2019

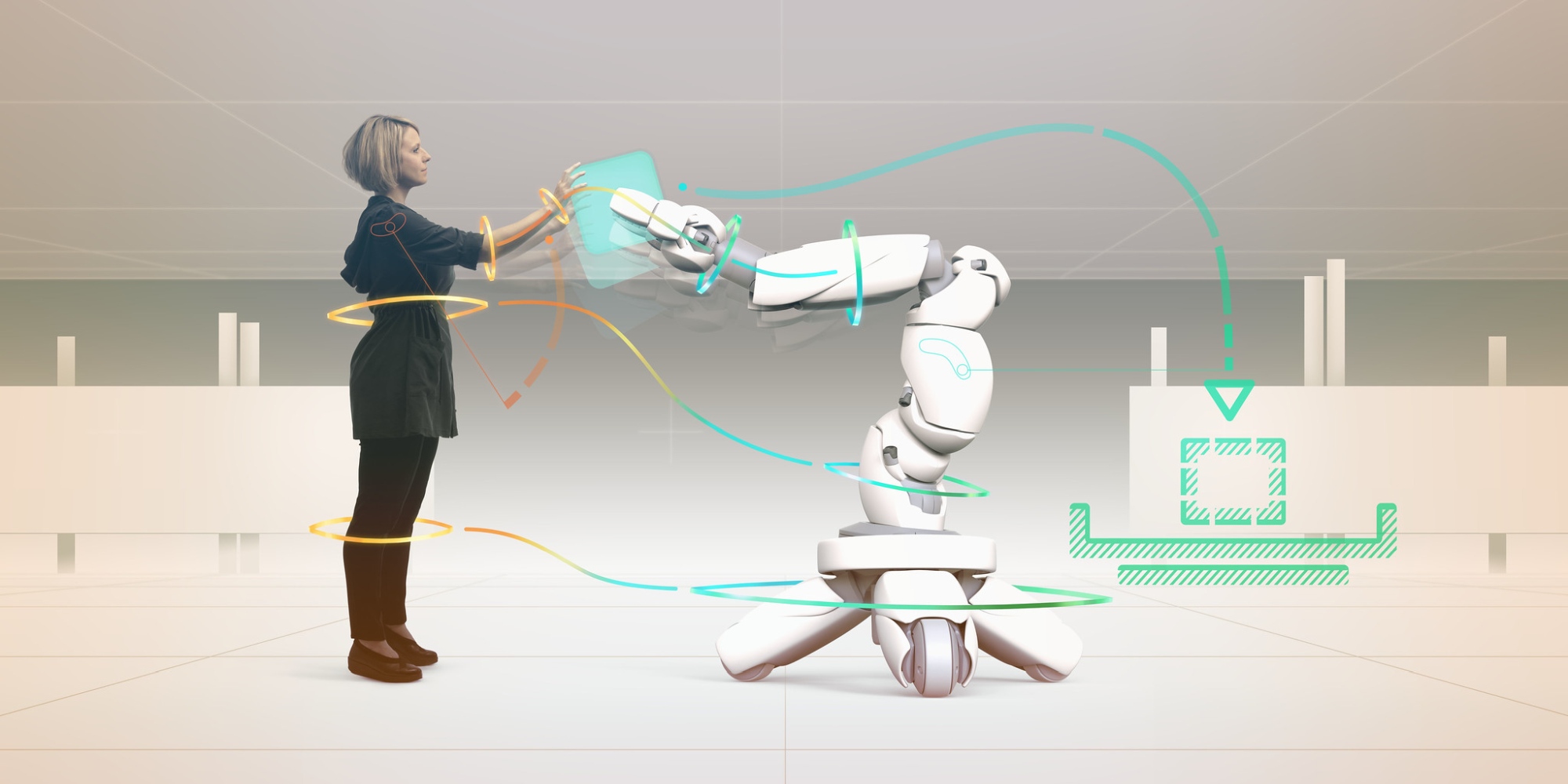

CoBot Studio

The project focuses on the development of an immersive extended reality simulation in which communicative collaboration processes with mobile robots can be playfully tried out and studied under controllable conditions. With access to the technical infrastructure available in Deep Space 8K as well as other VR and AR technologies, persons take part in collaborative games with robots in which tasks such as assembling or organizing small objects have to be completed together.