What can we understand by the term Artificial Intelligence and what do we want to understand by it? What do we actually understand about human intelligence and what does it have to do with attempts at modelling it?

The questions about artificial intelligence (AI) opens up room for speculation about the future, not least because of several old and new unanswered questions, regarding, for example, what intelligence describes, what is artificial, and a distinction between not artificial and less artificial. What is certain, however, is that in spite of the manifold and unresolved levels of meaning of this term, technologies are already profoundly changing our everyday life, our global connections, our cultures and politics. This development clearly poses questions about old concepts and new contexts as well as about how our social practices work and how we wish to apply new technological means to them.

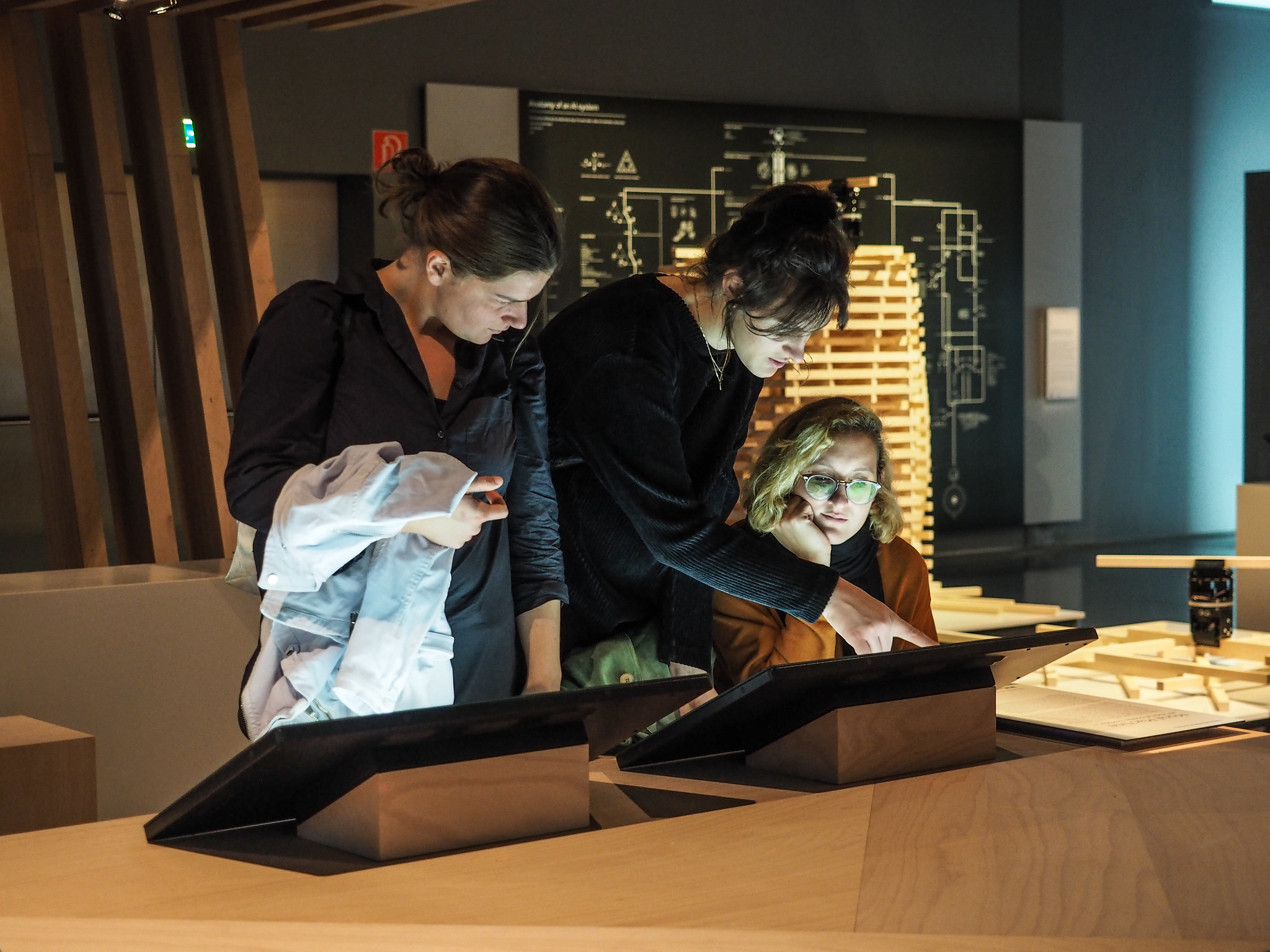

The Ars Electronica Center has dedicated a large part of its new permanent exhibition to these technologies with the aim of providing an orientation aid, a compass for everything new that comes along. Under the title Understanding AI, important technical aspects and concrete examples of application will be placed in social, ecological, political and ethical contexts, critically examined, and creatively expanded. The exhibition does not want to find a universal answer, or the solution to the questions raised by AI. Rather, visitors should have an opportunity to acquire a basic knowledge, to establish their own points of reference and to get involved themselves in the questions of positioning on AI technologies.

The Ars Electronica Futurelab developed an educational series for technological content within the new exhibition. At the center of a series of didactic stations is an attempt to illustrate the fundamental building blocks of various AI technologies and their mode of operation. Using simplified examples, Neural Network Training shows how long operation chains of mathematical functions operate as so-called artificial neural networks. Named after the leading cells in nervous systems and inspired by them, these networks organize data input into tendencies or probabilities and categorize them. Various networks can be both trained and observed in their application at the Ars Electronica Center.

At the end of the educational series, the Ars Electronica Futurelab illustrated various steps in the categorization of a Convolutional Neural Network (CNN), one of the basic building blocks of AI technology and deep learning. During the training phase, different characteristics of images were learned in several filter layers, i.e. simple lines, curves and complex structures are arranged independently and form the basis for categorizing images. These successive filters are visualized on eleven large displays in the Ars Electronica Center. At the end, a percentage diagram shows the the AI system’s assessment of one of the objects shown on the built-in camera.

Vector Space relates all entries of the Prix Ars Electronica since 1986 to each other via different parameters. The model uses several AI technologies from the field of text and image analysis to organize the archive into a three-dimensional representation. Image properties, text properties or a combination determine the similarity of the entries and position them in relation to each other. Comment AI uses a public project of the Research Institute for Artificial Intelligence OFAI to train a future AI application at the Ars Electronica Center. Visitors can classify collected comments from the online forums of various Austrian newspapers and thus teach the application different categories, from neutral to hate speech.

In addition, the Ars Electronica Futurelab developed playful stations for an experience of AI technologies in direct interaction. In the style of the work Learning to see: Gloomy Sunday by the artist Memo:Akten, who is also represented in the exhibition, two stations examine AI technology from the perspective of the inclination to cognitive self-affirmation. In dealing with phenomena of prejudice, repression, the interpretation of situations according to the patterns of past experiences and previous convictions, interactive stations follow the traces of these thought patterns in the structures of AI technology.

Pix2Pix: GANgadse converts visitors’ drawings into cat pictures. A Conditional Generative Adversarial Network (cGAN) has learned exclusively from images of cats and thus interprets all entered data as such. ShadowGAN shows the outlines of visitors captured by a camera as landscapes, since the network was trained exclusively on images of such landscapes.

The exhibition, with its questions about the relationship between human and technical reality aims to demystify the techniques and applications behind the concepts of artificial intelligence. As trending concepts, they have for some time opened up unclear spaces for discussion, which for some resembled more an advertising landscape, for others more a chamber of horror, than an informed debate. Instead of technocratic fantasies of power and creation or technophobic takeover scenarios, the Ars Electronica Futurelab’s didactic contributions to the exhibition aim to convey a basic knowledge of and approaches to the autonomous use of AI technologies.

Because of its accessibility and popularity, the exhibition at the Ars Electronica Center has since found an offshoot: The Ars Electronica Futurelab has designed two experience rooms for the Mission KI exhibition at Deutsches Museum in Bonn.

Read more about the Futurelab’s contribution to the Understanding AI exhibition in the Interview with Ali Nikrang, Peter Freudling and Stefan Mittlböck-Jungwirth-Fohringer on the Ars Electronica Blog:

In Understanding AI – Episode 1 Ali Nikrang, Peter Freudling and Stefan Mittlböck-Jungwirth-Fohringer of Ars Electronica Futurelab explain how specially designed installations allow people to experience AI.

Credits

Ars Electronica Futurelab: Peter Freudling, Stefan Mittlböck-Jungwirth-Fohringer, Ali Nikrang, Arno Deutschbauer, Horst Hörtner, Gerfried Stocker, Erwin Reitböck, Susanne Teufelauer