False positive investigates an interesting tension. On the one hand, it seems to be common understanding that states and big companies know everything about us, anyways. At the same time, their knowledge about our lives is pieced together from a very selective and messy dataset — a part of our digital, online traces. The scale at which metadata can be gathered and analysed these days is unprecedented; at the same time this can easily hide the fact that a lot of knowledge these — undoubtedly powerful — institutions have about us is actually highly speculative in nature.

Machine learning algorithms attempt to generalize from observations. The concrete outcomes, however, are subject to data quality (garbage in, garbage out), parameter tuning and optimization of various competing objectives (such as recall and precision). In addition, the purpose of the classification system at hand (e.g. delivering ads or catching terrorists) will always introduce hidden biases, assumptions and reward systems which will taint the “reality” perceived by the machine, from the very moment of measurement on. It is scary to think that machines know everything about us. It is even more concerning to think that they think they know — but actually don’t. That’s the conclusion of Moritz Stefaner, Mark Shephard and Julian Oliver about big companies spying us when surfing the worldwide web. They are explaining the motifs behind their venture Candigram, that was exposed at the Ars Elctronica Festival in 2015.

The Candygram program exposes the speculative nature of profiling via tracing personal data…

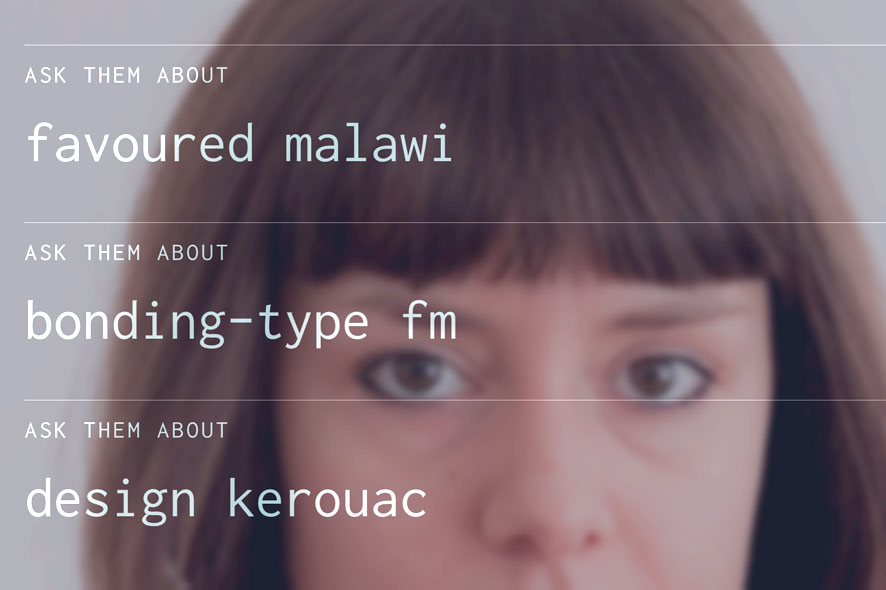

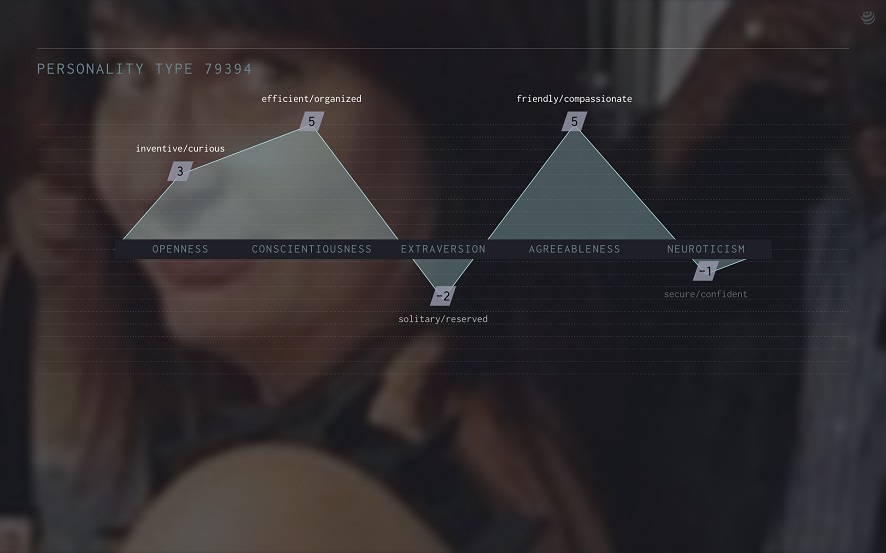

Moritz Stefaner: Right, the design of the Candygram corporate identity and the presentations we show to users (their data profiles) reflect the above mentioned tension. The presentation moves from simple, straight-forward facts (such as the name, profession, and home town of a person) to an associative view of related image materials, and word combinations to characterize the semantic context around a person profile to a highly speculative personality profile which assigns the person numeric scores along the “big five” personality traits. These trigger the cognitive dissonance we are looking for, ranging from “how come they know all this about me?” over “do they really think they can judge my personality based on so little data?” to “this is straight up wrong, I feel misrepresented”. The progression of materials from well-grounded to speculative information about oneself makes the process of extrapolation based on incomplete information tangible, and personally relevant.”

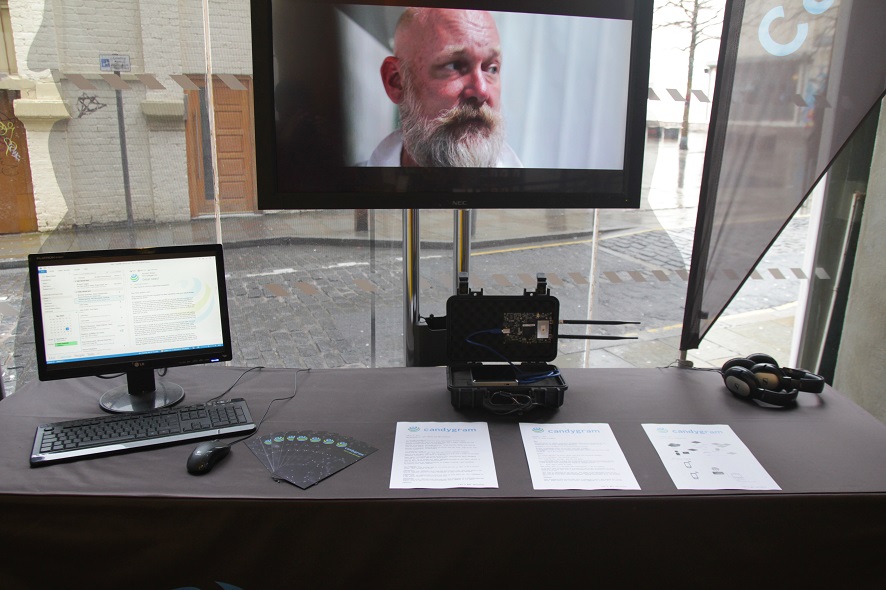

Mark Shepard in conversation and on the screen. Credit: Mark Shephard

What can data provided by mobile networks reveal about us and what are the limitations of profiling in that way?

Mark Shephard: On the most basic level, our mobile phones produce digital traces of where we’ve been, called location histories. From this data, it is possible to interpret how frequently we’ve been there and where we are likely to go next. It is trivial to map these locations to specific venues, and make assumptions about what we are doing there. When location histories of large subscriber sets are aggregated, it becomes possible to infer with whom we’ve been, how frequently we’ve been together, etc. These spatial associations, however, are based on a mobile handset containing a SIM card, not necessarily a specific individual. In some parts of the world it is common to share a mobile phone between multiple people. In other situations we simply leave our phones behind.

So an indexical relation between the location histories and specific individuals is not possible. Other information that our phones reveal about us–such as metadata associated with whom we call or text, the duration of the call, etc–can be used to establish a graph of an individual’s social network. These social associations can in turn be quite revealing. For example, a study at MIT by two undergraduates showed how you could reasonably predict the sexual orientation of someone by analysing their friendship associations on Facebook.

The audience of the Ars Electronica Festival 2015 got a glimpse of what their online behavior tells about them . Credit: Mark Shephard

Is there really a disclosure of facts we are not aware of?

Julian Oliver: There most certainly is, although there are many initiatives seeking to raise awareness of what is being disclosed. The documents made public by Edward Snowden are the most widely known in this regard. What’s not discussed as much, however, is how discrete data points, seemingly banal in and of themselves, can be operated upon algorithmically to generate insight and knowledge that is not readily apparent from the data points alone.

Especially after Snowden has uncovered NSA activities, the public should now be aware of being spied on their internet activities. Where does the climate of accepting disclosure come from? Are we living in an age of narcism?– Or is it fatalism which makes people careless? What are your experiences talking with the participants after taking part in your project?

Mark Shephard: I think there are at least two ways to address this. First, we are increasingly willing to trade bits of personal information for free access to online services. When you submit an email address to get online at an airport, you are doing just that. Here it’s less a question of narcissism than of convenience. There is also the rationale that we’ve all heard before: “I’ve got nothing to hide, so I’m not worried.” Unfortunately that position doesn’t take into account the fact that information collected about us is stored, often for many years. So while you may have nothing to hide now, should things change–new laws, changing cultural perspectives–information that seems relatively benign now may have different implications in the future.

A personality profile as a screenshot. Credit: Mark Shephard

Does the participant’s knowledge of how their data is retrieved on the net change their minds about future behavior?

Moritz Stefaner: It does. People are generally very interested in finding out how they can have more control over the information about them that is available online. Very few have responded with indifference. At the close of the consultations, we offer participants a brochure that lists a series of online resources for learning best practices for digital security online. We also have been organizing workshops where we introduce tools and techniques for secure web browsing, email, chat, etc.

Read more about further projects that were presented within the Connecting Cities framework on www.connectingcities.net.