You were involved in developing the “Understanding AI” exhibit from the beginning. What were the objectives?

Ali Nikrang: The topic of artificial intelligence was a set goal from the beginning – it’s a big field that you can break into subdivisions but the first thing we wanted to do was show how artificial neural networks function and what they can do, since they are the foundational technology for deep learning. That should give the visitors an aid to orientation so that everyone can get something out of it.

The exhibition team met almost every week from May 2018 onwards to discuss the possibilities in detail, implement them and sometimes reject them. After all, what’s brand new in 2018 could be yesterday’s news by 2019.

First we divided our plans into two major areas: one was for didactic work that explains the basic functions. How does a neuron function? What is a network? What is a convolutional neural network? The other big area was applications. How can artificial intelligence be communicated? What is the best way to show something so complex? I think we found a good way to represent this fascinating technology.

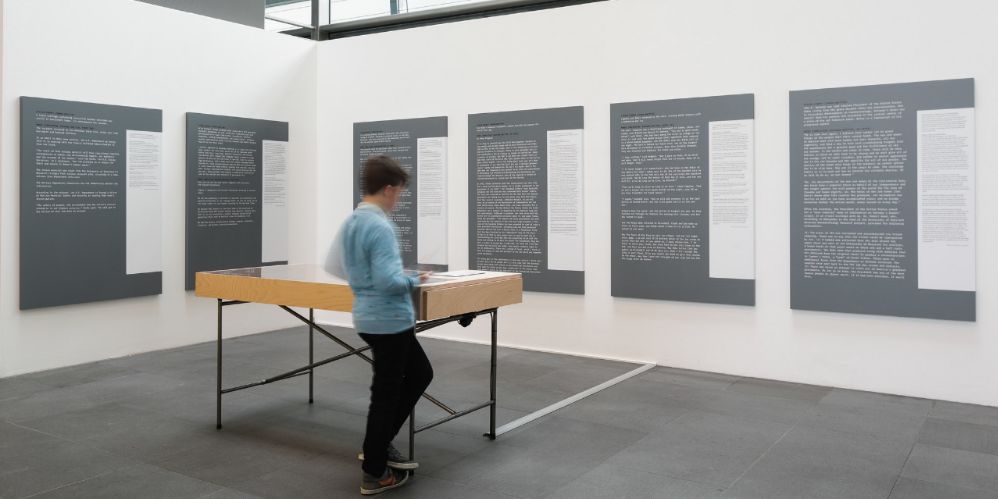

Credit: Ars Electronica / Robert Bauernhansl

What was the biggest challenge?

Ali Nikrang: Keeping up to date is a big challenge. In the field of deep learning in particular, so much is happening, but the exhibition can’t keep up with every development. In spite of that, it has to tell people something new, offer insight into the complex issues, sooth the fears they may have of the unknown, and put them in a position to contribute to the conversation.

In the didactic area, staying up to date isn’t such a big problem because the basic functions remain the same. But when it comes to applications, things can start to seem out of date really fast, because so much is going on. Some installations we had spent a lot of time planning were discarded only a few weeks ago, while the last application came in three days before opening.

Credit: vog.photo

The Understanding AI exhibition is designed to show visitors the similarities and differences in how humans and machines see the world. How do machines “learn”?

Ali Nikrang: Learning in a neural network functions through statistical data. For object recognition, for example, a convolutional neural network is fed with a large number of images and has to, say, recognize certain objects in those images. Even if the network is almost always wrong to start with, it can use a technique called backpropagation to learn from the examples. So the machine learns from confirmation or failure to change its parameters in such a way that the success rate increases. A model like that can have 138 million trainable parameters, for example, that it can adjust – in computer science we speak of “weight” (if you like, you can see an analogy here to the synapses in the brain).

So the learning process is a matter of doing quantitative training until the weights are adjusted in such a way that right answer comes out. This means the machine learns on its own which visual features are necessary for recognizing objects. When a training phase is completed, the learning is over. That’s not how it is with the human brain.

Neural plasticity (in the sense of continual learning) stands in stark contrast to machines. But there are also similarities. The convolutional neural networks are inspired by the visual cortex in the brain and the way we perceive our visible surroundings.

For textual work, words are represented as vectors in a multidimensional space, which also allows the semantics of the words to be learned. Similar words get similar vectors or positions in the space. This multidimensional space can have 300 dimensions, for example, but you can’t imagine visually, but as a row of numbers. Certain numbers together describe a word. The computer learns that there is more similarity between, say, the words sweater and t-shirt than between sweater and Eiffel Tower, because they often appear in the same context. Entire sentences are then produced from these word vectors.

So computers learn the statistics of data. People also learn statistically, to a certain extent.

Credit: vog.photo

Does this mean people and machines learn the same way? Statistically?

Ali Nikrang: From an evolutionary point of view, information processing is a question of survival. A species that can deal with information from the environment faster and more efficiently has an advantage over others. It can recognize danger faster and search for food in a more efficient way.

Nature has found a way here to gather lots of information with few resources. And statistics helps because not every little piece of information needs to be given a lot of significance. You learn which combinations in the real world more often occur as a unit and make sense together. Our perception is trained on the basis of concepts, so to speak, that are relevant for our life.

Credit: Ars Electronica / Robert Bauernhansl

What aspect of artificial intelligence are you personally interested in? Tell us something about your background!

Ali Nikrang: I studied classical music at the Mozarteum in Salzburg and computer science at Johannes Kepler University in Linz. I’m equally fascinated by both areas – it’s unbelievable how much progress has been made in classical music over the last few centuries and in computer science over the last few decades – of course these are building on the mathematical advances of recent centuries. There is still much to be done in connecting these two areas. Musical data are completely different from image or text data; the challenges are completely different. There’s no description for what music is and how exactly it’s perceived by people, that’s why it’s also distinctly harder to “explain” it to an AI.

As hard as that may sound, it’s also tremendously exciting. Our next step will be to open another section of the exhibition that explores this very topic: AI x Music.

Credit: vog.photo

How important is it to take a variety of disciplines into account in the complex of issues around artificial intelligence?

Ali Nikrang: Most of the achievements in AI come from information technology and mathematics, but cognitive psychology and neurology also have their share, because a lot of it is about perception – which also gives us a point of intersection with art. We are after all trying to imitate how people see the world and for that it’s indispensable to know something about how the brain works. That way you learn about the machine and about yourself. In (human) brain research they’re trying to profit from research into neural networks and understand the brain better that way.

So, many specialized fields come together to explain the world, and perhaps the statistics of data becomes the nature of data.

Ali Nikrang is Senior Researcher & Artist in Ars Electronica Futurelab where he is taking a vital part in the domain of “Virtual Environments”. He studied Computer Science at the Johannes Kepler University in Linz and composition with the focus on new media at the university Mozarteum in Salzburg. Besides, he obtained a diploma in piano performance at the same university.

Before he switched to the Ars Electronica in 2011, he worked as a researcher at the Austrian Research Institut for Artificial Intelligence in Vienna, where he gathered experiences in the field of Serious Games and simulated worlds. Ali has additional skills in the field of music and new media, including the developments of computer-based methods of music visualization, analysis and composition plus methods in sound analysis and sound processing.

His research interests incorporate the development of computer-based models for simulation of human sentiments, that arise in the acoustic and visual perception of an artwork. Together with the Institute of Computional Perception at Johannes Kepler University, he published a new method to simulate listeners’ perception of harmonic tension in a piece of music. Moreover, he developed a computer model for automatic composition of polyphonic music. Some creations of this model were already implemented in commercial projects as soundtracks (i.e. in Global Brand Conference of Silhouette in the Deep Space 8K at Ars Electronica Center (2017)).