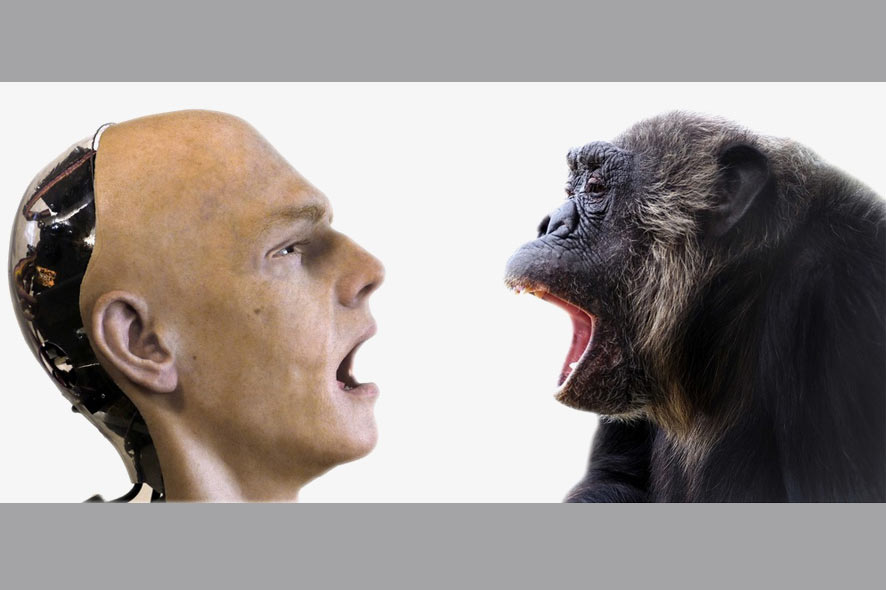

Anyone walking or riding through Linz these days can’t help but notice this picture—a humanoid robot and a chimpanzee facing one another, their mouths agape. The story behind the motif embodying the theme of the 2017 Ars Electronica Festival, being staged September 7-11, 2017 in Linz comes from the “Beyond Humans” project supervised by Ramiro Joly-Mascheroni, a scholar at City University of London. In this recent interview, he told us what his project is all about and what he thinks about artificial intelligence.

What’s the Beyond Humans project about?

Ramiro Joly-Mascheroni: Through Art, Aesthetics, Artificial Intelligence and Technology, we are exploring Social Interaction and the Multi-Sensory Integration of events around us. Scientific research in Neuroscience, Evolutionary Psychology and Philosophy is focusing on artificial agents and human interaction. During this era of intense technological advances, we should explore further the brain mechanisms underlying how we perceive actions, how we understand others’ actions, and why we may sometimes attribute human virtues and imperfections to non-human agents and machines.

The Beyond Humans project is part of my PhD research in the Cognitive Neuroscience Research Unit at City University of London, and in collaboration with the Mona Chimpanzee Sanctuary. Using an android, and neuropsychological methods, such as behavioural and imaging techniques, we explore the neural mechanisms and motor processes involved in the perception, interpretation, and portrayal of facial gestures and other motor actions, especially those crucial to human and animal social communication and interaction.

Beyond Humans, the motif of the 2017 Ars Electronica Festival. Credit: Ars Electronica / Martin Hieslmair

Could you please tell us more about the aim of the Beyond Humans project?

Ramiro Joly-Mascheroni: Through the process of evolution, animals and humans have developed neural mechanisms with precise functional specializations. Previous studies showed that certain human brain regions are indispensable for the processing of faces. We also know that other human brain areas are important for the understanding and execution of movements, whether they are our own, or those of others. Some of those brain areas can sometimes overlap, and we wonder whether by doing so, they may provide the neural substrate for our ability to perceive, understand and respond to the actions of others. In strict relation to our work, some of those actions, which we constantly perceive, and have to interpret, would be facial gestures and communicative expressions. Beyond Humans started out by investigating for the first time the exchange of communicative gestures across species. Initially between dogs and humans, subsequently following the evolutionary path of our nearest genetic ancestors, the chimpanzees, and we are now exploring issues that we can no longer call ‘between species’, but, after the inclusion of Artificial Intelligence, we have chosen to refer to as ‘across communicative agents’.

What is so special about this yawn movement of the pictured android?

Ramiro Joly-Mascheroni: In part of our research, we use simple everyday actions, such as yawning, to explore rather intricate neurocognitive mechanisms, as we believe this is important for the ongoing research in both human and animal social communication and interaction. In turn, this research is also helping us elucidate how we perceive and interpret other peoples’ actions, when we have a sensory processing impairment, such as the result of a brain lesion or a gradual sensory perceptual loss. One of the exciting implications of our research is also the contribution towards the work on SSDs (Sensory Substitution Devices). These studies aim to help Blind and Deafblind children who need to learn how to perceive, and accurately interpret others’ actions, to be able to successfully communicate and socially interact.

Sometimes we observe actions in others that we do not intend to mimic. Yet, they trigger the urge in the observer to produce the same action which we term ‘contagious’. Examples of such contagious actions are laughter and yawning, both of which we sometimes refer to as ‘embodiment- inducing actions’. We know how it feels to perform these actions ourselves, and our perceptual systems are so fine-tuned to them that we ‘embody’ the action; we feel it in our body. This rapid, almost automatic response can provoke the perceiver to produce the same motor act, even when they are not consciously or purposely intending to do so.

In the domain of action and body movement perception, a specialized ‘neural action mirroring system’ has been discovered, the so-called Mirror Neuron System (MNS). This neuronal network seems to be active when we perform, and when we observe certain actions being performed. Importantly for our research, this system appears to be sensitive to visual and motor similarity between the observer and actor.

Neuroimaging studies found that those brain areas known to be part of the MNS were active both when someone observed someone else yawning and when they subsequently yawned themselves. Visually perceiving someone yawning, reading the word yawning, watching a video or even looking at a still picture of someone yawning, may cause the observer to yawn.

“Other studies carried out with robots or moving avatars as stimuli have shown that robotic actions can engage the human perceiver’s motor system, too. So, we created this android which performs several facial expressions including yawning, smiling and frowning.”

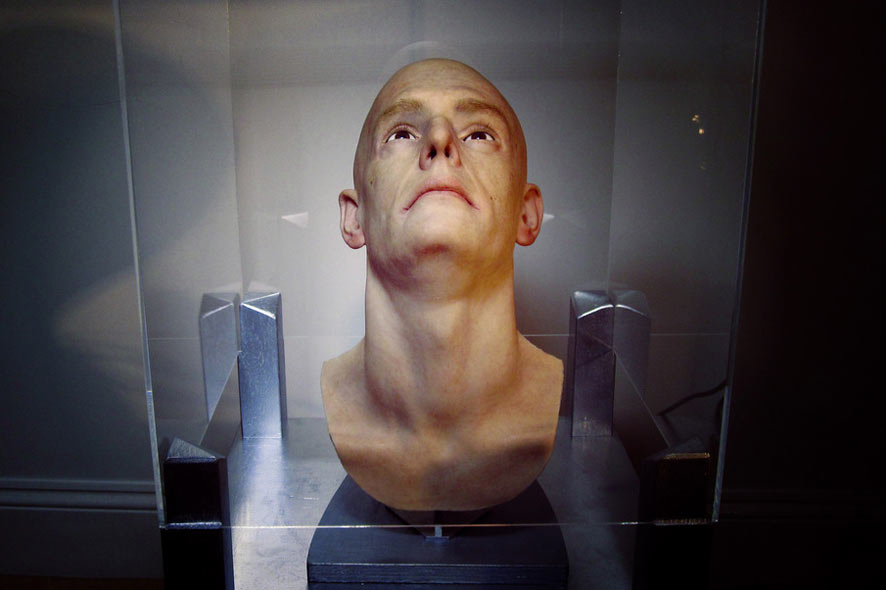

Credit: Ramiro Joly-Mascheroni

Why is scientific research more and more focusing on artificial agents and human interaction?

Ramiro Joly-Mascheroni: In fields such as Neuroscience, Robotics and Artificial Intelligence, there is increasing interest in exploring the acceptability of, and reactions to, artificially created inanimate objects, such as androids and robots. Our work expands into anthropology and evolutionary psychology and investigates simple neuropsychological issues, as well as the more intricate philosophical ones. We aim to explore how humans socially interact. From a comparative perspective, we are investigating how animals communicate, and, from an evolutionary perspective, we try to trace our findings as far back as to the origin of language.

This android yawning was sufficient to elicit yawn contagion in chimpanzees. The inanimate agent’s actions seem to have conveyed a message which was picked up by the animals. Results of our study suggested chimpanzees are capable of elucidating a communicative signal produced by an unfamiliar model, humanlike in appearance, but ultimately an android. This finding represents important evidence of relevance to evolutionary theories and comparative perspectives on action perception and action understanding. The finding that our genetically closest primate can catch yawns from a man-made object, is both novel and interesting. The implications of this finding, of an animal’s embodied experience with an object, may denote that the phylogenetically old phenomenon of Contagious Yawning may have been a part of a pre-language form of communication.

“A yawn, regardless of its unknown primary role, may have always carried a non-verbal communicative function, and its contagiousness aspects may serve us to find out more about how humans and animals developed adaptive functions, ways of communication, synchronization and social interaction.”

What are the benefits and risks of artificial intelligence in your opinion?

Ramiro Joly-Mascheroni: We are, in fact, exploring the potential advantages and disadvantages of using artificial intelligence agents. This particular robotic head was built with the help of award-winning prosthetics and special effects experts, and as a collaborative work with neuroscientists, primatologists, blind individuals, stroke patients, physiotherapists, teachers and other specialist educators. This has already provided us with interesting implications and benefits as a consequence of technological advances in artificial intelligence. However, these well-meaning advances can also present us with important moral dilemmas.

Let us think about this possible scenario, sometimes used in decision-making research. For some years, trains without staff have operated on some of our railway tracks. A driverless train is approaching a fork in the tracks. If the computer or robot driving the train allows it to run on its current track, a crew of five working on the line, will all be killed. If the computer or robot steers the train down the other branch, a lone worker will be killed. So, what should the robot do?

The current and increasing future use of robots and artificial agents has raised important moral and ethical concerns for the near future. Some decision-making processes, involve moral judgements, which can sometimes represent to us humans, enormously difficult challenges. Far too frequently, finding a solution to a moral dilemma can appear to be almost unsolvable. Some argue for the eminent need to build artificial agents that would be capable of unravelling these and other types of dilemmas. But, will we ever be able to create artificial intelligence that can solve the moral dilemmas we can’t crack ourselves? This intensifies the challenge ahead, as moral and ethical values would somehow have to embed into the computer’s software.

It seems evident that some artificial intelligent agents will, at some point in the immediate future, need to follow some decision-making processes. Indeed, according to our research, it is possible that we may contagiously perform the same motor actions – in our experiment an inoffensive and harmless action, i.e. a yawn – but accomplished by the ‘morally and ethically unaware’ android we created. Therefore, if there is a possibility that we may unintentionally emulate the actions of an Artificial Intelligence agent, does this mean we need to limit or control their capabilities, or our own?

Ramiro Martin Joly-Mascheroni (IT) is a Psychology PhD student exploring how blind children and adults perceive actions. Within a comparative and evolutionary perspective he is investigating how human action perception differs from that of animals. He developed an android, as one of a series of tools used by blind individuals and stroke victims, to train, rehabilitate, and regain control of their own facial expressions, and to aid interpretation of others though biofeedback systems.