Moving or shifting objects using only the power of the mind is a phenomenon that has emanated from the realm of parapsychology in which a preoccupation with so-called telekinesis reflects a fascination with people who exude spiritistic powers. But there has never been proof of these supernatural forces, so there’s not the slightest scientific basis for them. Nevertheless, for centuries now, humankind’s interest in going beyond our motor skills has been undiminished. And in fact, the latest research results nurture the presumption that, in the foreseeable future, it will indeed be possible to harness the power of thoughts to move things.

The use of technology to enhance inborn human capabilities has already achieved astounding results in the medical field. For instance, quadriplegics can express their thoughts by employing eye tracking to formulate messages. This technology, which was originally used in the advertising industry to analyze consumers’ shopping behavior, enables quadriplegics to literally write by glancing. Now, Ars Electronica has launched a collective effort of four of the institution’s divisions—Futurelab, Solutions, Festival and Center—to bring to fruition humanity’s age-old dream of moving objects by merely thinking about them or looking at them. And visitors to the Ars Electronica Festival will not only be able to marvel at this phenomenon; festivalgoers themselves will be able to actually take command of an earthmover with their eye movements and mental powers. And keep in mind: by no means is this a bunch of hocus-pocus; it entails the application of solid scientific findings. We discussed this project with Erika Mondria, director of the Ars Electronica Center’s Brainlab and herself a researcher. And we also interviewed Ali Nikrang, Senior Researcher & Artist at the Ars Electronica Futurelab.

Nina Kulagina was a Russian woman who was claimed to have psychic powers, particularly in psychokinesis and was able to move objects without touching them. Source: You Toube

Erika: The entire undertaking is conceived as an experiment to develop prototypes on the basis of two technologies to operate an earthmover—on one hand, via eye contact and, on the other hand, through mental concentration. Eye tracking technology will be in use throughout the day at the Ars Electronica Festival, but we’ll also be staging highlight presentations featuring a brain-computer interface. Eye tracking hardware analyses the movements of a subject’s pupils, so that focusing on a particular spot works like pushing a button. This is already being used successfully in the medical field, as is the brain-computer interface. I’m personally familiar with the case of a coma patient in Sicily who, thanks to this technology, was able to communicate for the first time in 10 years. Our software and hardware supplier, g.-tec medical engineering GmbH, an Upper Austrian firm with whom I have a good, long-term working relationship, developed a system that swiftly recognizes whether the subject’s mental response is indicative of a YES or a NO answer. This technological innovation is what enabled the patient to tell her daughter that she had always felt her presence and understood what she said.c

The first Brain Computer Interface of g.-tec medical engineering GmbH for patients was introduced 2009.

How did Ars Electronica’s individual departments implement this project with the earthmover? What range of expertise did this call for?

Erika: The Festival provides us with an experimental space, by which I mean logistical support as well as a high-profile public setting. The Futurelab, of course, brings in decades of experience in programming and R&D, as well as the courage that it takes to set up such a project. Solutions brings to the table skills and insights in communication custom-tailored to the market. This calls for contacts and the capacity to recruit partners that are prepared to get involved in a joint venture like this. And needless to say, we needed an earthmover, which had to be technically modified. And last but not least, there was the experience I gained at the Ars Electronica Center working together with staffers who possess social intelligence, which is what it takes to motivate people to participate and to explain to them how such an experiment proceeds. This means helping them to overcome their inhibitions, briefing them properly, and seeing to it that everything runs smoothly with respect to the human factors. Because one thing’s for sure: if you don’t provide solid orientation to the human subjects, then this whole apparatus isn’t going to function.

2003 Zach Lieberman, James Powderly, Evan Roth, Chris Sugure and Theo Watson developed “EyeWriter”, a reasonable eye-tracking software, which enables patients to draw on a computer screen, by eye-movement. Credit: Zach Lieberman, James Powderly, Tony Quan, Evan Roth, Chris Sugrue, Theo Watson

So how exactly does this technology function? How does the information travel from the brain to the device?

Erika: This device doesn’t translate your thoughts on a 1:1 basis. Now, that is indeed how it works to carry out language reconstruction, but that entails a direct, hard-wired linkup to the neurons of your brain, which is how it’s actually done in medical cases. Due to the various electrical spikes generated by thoughts, the impulses can be registered. These swings in voltage can be seen as a graph on a computer screen, and software can read them as information, just like a language. There have even been experiments in which the subject’s skullcap has been opened and very fine needles inserted into various areas of the brain, where the information is then recorded. Five years ago, g.tec founder Christoph Guger expressed the opinion that this wouldn’t be possible for another 20-30 years, which provides a good indication of the rapid development of this technology that’s still in its infancy.

The project at the festival is a look into the future. We’re not hard-wiring cables to people’s brains like I just described… instead, there are eight spots on the scalp at which we can measure the so-called P300 wave. This potential emerges 300 milliseconds after a person has had the experience of observing a particular event that s/he expected to occur.

What seems like science fiction has been made a true-to-life fact by the Ars Electronica Futurelab’s joint venture with Wacker Neuson, a manufacturer of heavy-duty construction equipment. Credit: Wacker Neuson Linz GmbH

Ali: Simply imagine a huge football stadium in which there are hundreds of thousands of noises made by people—this corresponds to the approximately 100 billion neurons in the brain. We have eight sensors that are attached to the scalp. You can conceive of them as microphones that amplify what a command has triggered. And these microphones are positioned at eight key locations to provide us with particular information, which is, of course, no simple matter since ambient noise overlays the specific sounds that we’re trying to filter out. Obviously, with this small number of microphones, it’s impossible to hear what every single individual in the stadium is saying, but we sure can identify when a goal has been scored by the boisterous cheering it triggers. And after listening for some length of time, we can even say which of the two teams scored that goal. Now, applying this metaphor to commands for the operation of the earthmover, it means that concentration on the part of the subject is essential to assure the audibility of those impulses that are necessary to trigger a command. If that concentration is disturbed, then no command is issued. In the analogy cited above, this interference is the noise being made by the other spectators. In the human brain, they’re impulses from those neurons whose information is irrelevant to the matter at hand. Bottom line: this is a complicated matter. If we were to use more microphones or better hook-ups, then we’d have more precise information, but this isn’t absolutely necessary in an earthmover project that entails only eight commands. In their training sessions, festivalgoers will learn the commands and what they have to focus on to execute them. This enables us—just like a blind person attending a football match—to ascertain whether the cheering after the goal was scored occurred on the left side or the right side, and we can, in turn, draw conclusions about the mental functionality or the powers of concentration of those who take part in this experiment.

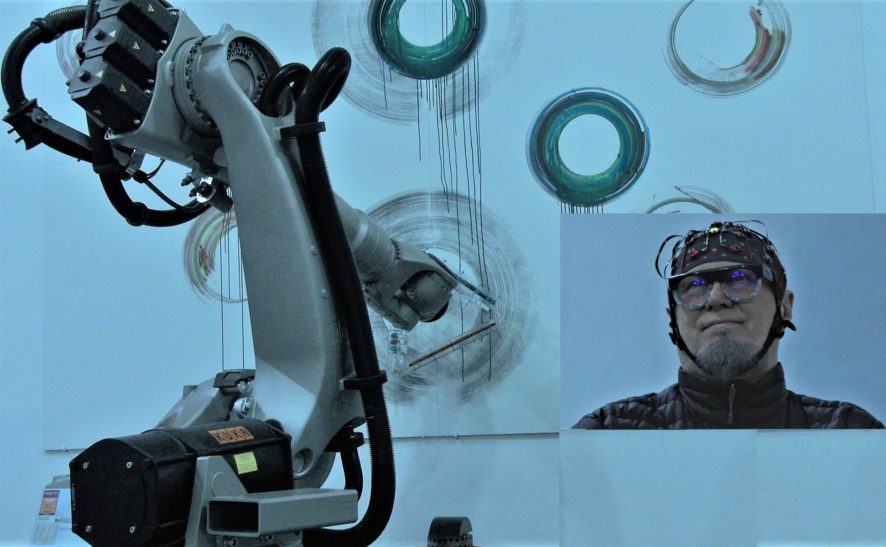

Dragan Ilić uses an elaborate brain-computer-interface (BCI) system by which he could control a hi-tech robot via brain and state-of-the art technology. Credit: A3 K3 Intermedia/Trans-technological performance & interactive installation by Dragan Ilic. Courtesy of the artist & GV Art London.

What can go wrong on the human side? After all, as you can well imagine, when people put on this sensor cap hooked up to these cables, they just might feel like mice in a lab!

Erika: The eight microphones that Ali cited as an example correspond to only eight electrodes, and their job is to register the spectators’ cheers—that is, to measure the potential 300. Since our thoughts are constantly being distracted, this corresponds to the overlaid signal that’s difficult to precisely localize. But our device can do it! And that gives you an idea of what an extraordinary performance that it, measuring magnitudes that occur only in the microvolt range. Then this huge quality of data has to be forwarded and digitized, either directly via cable or with wireless technology. A computer model then decodes the mass of data and computes the differences in milliseconds. THAT’S what this high-performance technology can do!

To what extend did conventional software have to be reprogrammed for use in this project?

Ali: I had to teach the software to comprehend the specific commands—for instance, to move the machine’s digger arm in a certain direction. You can conceive of this as a matrix of symbols. The appearance of particular symbols causes a person to react mentally in various ways, to execute specific commands. But the translation has to function in such a way that the machine can receive or decode the command. This is like assigning values that are digitized, and, on the other end, they come out in such a way that a technology can read them and then issue the earthmover a command via electrical impulse. That’s how I would explain this to a layperson.

The exciting aspect of this project is the fact that we’re bringing together two worlds that, at first glance, are totally incompatible. On one end, we have the world’s most precise device, one that works in the microvolt range—namely, the human brain. And on the other end, we have motorized machinery that was built to exert massive power to move heavy objects. The artistic aspect of this project is the collision of two worlds that could hardly be more dissimilar!

Since 2009 Erika Mondrian is managing the BrainLab at Ars Electronica Center working constantly on new cooperation’s and programs for visitors to reflect the permanently changing field of Neuro Science and Brain Computer Interface. She studied Fine Art & Cultural Studies at the Linzer Art University and the Linzer Johannes Kepler University (graduated with honours), Fine Art in London at London Institute Camberwell College & Central St. Martins College and Fine Art in Marseille at Aix-Marseille Université.

Ali Nikrang is Senior Researcher & Artist at Ars Electronica Futurelab where he is member of the group Virtual Environments. One of his main occupations is bridging the gap between the real and the virtual world by developping new methods, so that the user comes closer to the feeling of being in the real world. He furthermore is dealing with the development of computer-based models to simulate the human sentiments which are stirred by visual and acoustic impacts of art experiences.