With Ricercar, Ali Nikrang, Key Researcher and Artist at the Ars Electronica Futurelab, has developed an AI in the context of his research on the topic “creative intelligence”, that is trained with 25,000 pieces of music and is able to compose music on its own. After the systematic evaluation of musical structures, it comes to surprisingly natural and emotional sounding results. How is that possible? Ali Nikrang, about his research on artificial intelligence and music, as well as its (de-)mystification, limits and possibilities:

How do you actually compose music using artificial intelligence?

Ali Nikrang: MuseNet was – in my opinion – the first application capable of composing music of such high quality that it was hard to tell if the music was created by an AI system or a human composer.

The project Mahler Unfinished, which was realized in 2019 at the Ars Electronica Festival, was just one of our projects, with the goal of finding out what a good AI music composition system can do with the theme of a symphony, which is really extraordinary: It starts with only one voice and even the tonality is not completely clear at the beginning. In other words, it can be considered a challenge for any AI system.

Composing a natural and emotional piece with MuseNet, based on the theme of Mahler’s Unfinished symphony, for me was proof that new technologies like transformers or attention mechanisms, which are mainly used in NLP (natural language processing), can be successfully used to compose music, in 2019. In order to investigate this further, I developed my own AI-based music composition system Ricercar, which provides several innovations and enhancements in the field of AI-based music composition.

Ricercar (ricercare, Italian for “to search for sbdy. or sth.”) has a different architecture than MuseNet. This is interesting from a technological point of view, because we can deduce from this that the architecture of the system per se is not that crucial. If we have a model, that is capable of learning the statistical relationships and dependencies of data over a long period of time in a piece of music, we already have the best prerequisite for AI-based music composition.

I have developed several new approaches for Ricercar, including ideas for using symbolic musical data in a new way to represent music in a way that requires fewer resources. My focus was also on making the system aware of recurring structures in the music (such as melodies) that occur repeatedly during the piece, with and without variations.

Ricercar is able to concentrate on two or three themes during the piece. This corresponds more to the way human composers actually compose music. Usually we do not have more than two or three themes in a piece of music. The rest are variations and repetitions with similar structures.

To have an AI system focus on two or three themes has been difficult so far. This has to do with the way, the AI has learned to compose music. After all, all it learns during training is the ability to predict the next note, taking into account all previous notes. When the AI is trained in this way, it tends to use a kind of flat structure for its compositions, always introducing new structures and themes and paying little attention to the hierarchical musical structure. For example, it is not able to repeat a whole sequence that was the main motive at the beginning of the piece. We therefore need special technical mechanisms to get the model to concentrate on individual structures in a piece of music.

What makes us unique in the future? What role does the human being play in the interaction with AI?

Ali Nikrang: The future of AI-based music composition lies in collaboration with humans, because there is no art without human participation. Art is created by intention and there is no creation without human intention.

Even if we look at the very first cave paintings in human history, we can find this intention to draw something and share it with a society in this way. For art, this intentional approach is of even greater importance.

AI systems like Ricercar or MuseNet are developing their own view of data based on statistical patterns. During the training, they are, so to speak, forced to learn some kind of essential understanding of music so that they are in the end able to generate data, similar to those they got to know in the training set. This means composing music that sounds human, natural and emotional.

I think, the AI’s approach to acquiring the data could also be of interest to humans and I’m sure that artists* will also use this technology to develop completely new perspectives and forms of art that may not be imaginable from today’s perspective.

It would not be the first time in the history of music that technology has helped to expand our own possibilities. The piano itself was an 18th century invention and the first flute, built at least 35,000 years ago, was also an innovation. Before that time, singing was the only way to express music. But only through collaboration it will be possible to take advantage of the benefits of this technology.

Can artificial intelligence also help create art? What can composers learn about music through AI?

Ali Nikrang: In fact, artificial neural networks are capable of being trained with a large amount of data today and very often they learn dependencies and relationships in the data that human artists* might not have consciously perceived.

That’s why such systems could indeed serve as a new source of inspiration for artists. Another application could be, to open the possibility for anyone to compose music soon, without having spent years in practicing and studying the theory. The process of composing could become much more intuitive than it is currently possible.

If we study a model, capable of creating compositions, we might learn something new about music itself. This is a very simple principle and perhaps this is much more important, than the process of composing itself. We use the composition as a kind of task to teach the AI model about music, whose task is simply to understand music. The system serves as a bridge between music and technology, and when we study an AI model, we might learn why and how music works. A fully trained AI model may have learned something about music, that is unknown and interesting to us.

How will AI change our perception of music?

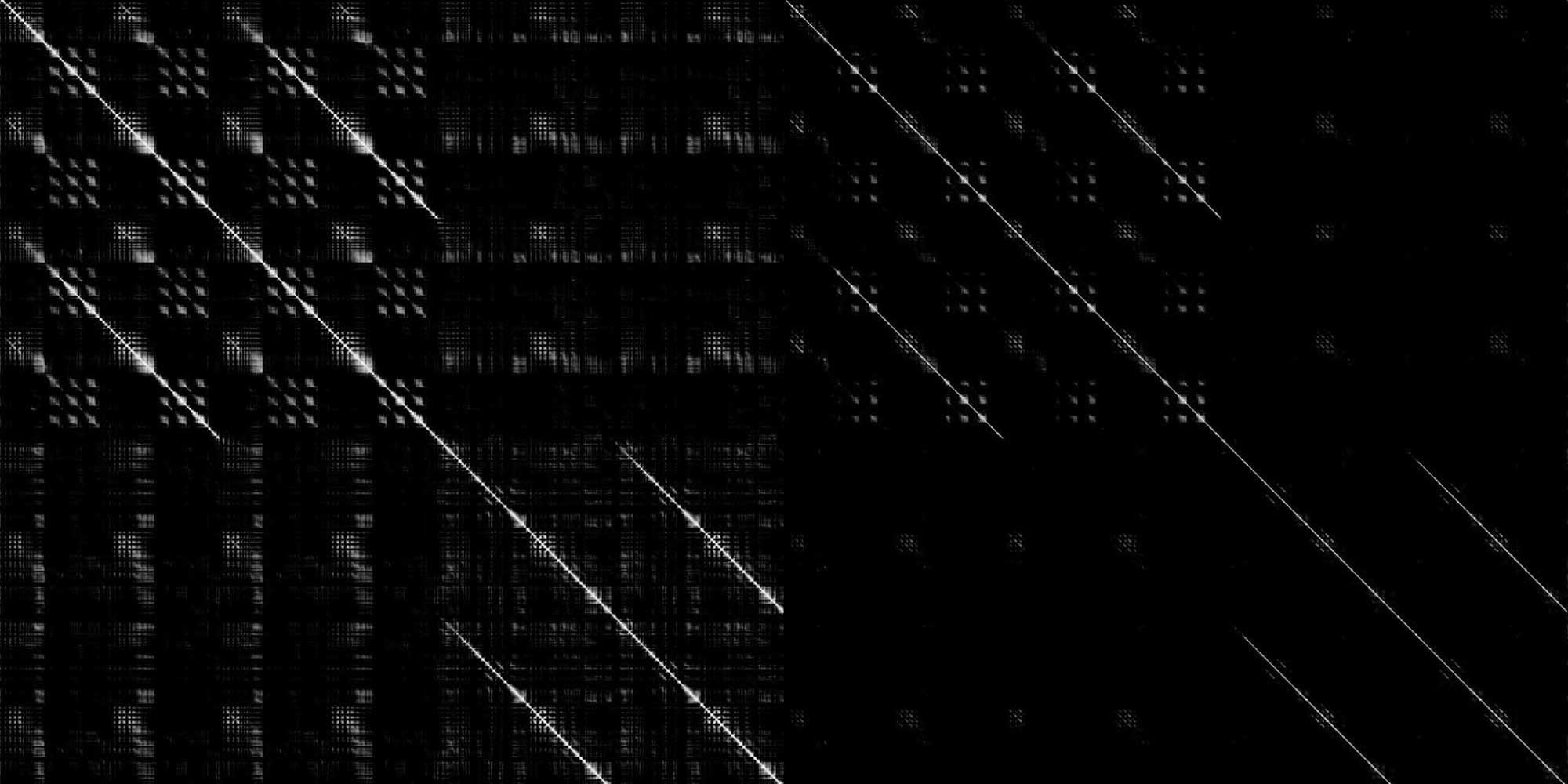

Ali Nikrang: AI can indeed change our perception of music. In the moment, we see it as a series of pieces, composed by Beethoven, Mozart or others. We have the same situation in the field of pop music. AI could create a new perspective. When an AI system is trained with musical data, it learns to represent this musical data “internally”. The so-called “weights and bias”, which are learned during training and stored as vectors, allow the model to represent the musical data internally. Afterwards the model does not change. In other words, the result of the training is a large number of vectors that permanently store everything that the model has learned from the data.

The model uses this internal representation to process every musical idea and every musical structure. It is also possible to imagine this in spatial terms: Every musical idea is given a position, a place in an imaginary multidimensional space. Similar musical ideas would naturally be close to each other, and ideas that are very different would be far apart. Therefore, composing with such a model (like Ricercar) can also be seen as a form of navigation in this space, in which you constantly change from one musical state to another and try out different possibilities.

But this also means that the entire musical repertoire can also be thought of as a kind of imaginary space. Every composer would have a place or a position here. You can almost see the music of a composer as an island in this space. The system can generate new pieces that fit the style of the respective composer or even imitate the style of a certain piece. It is a new way of experiencing music that is not based on individual pieces and compositions, but on our own perception and emotions. This is something that is not fully possible today, but it is a possible scenario for future systems.

This means that music is also subject to the trend towards personalisation. Will algorithms also influence our taste in music, perhaps even narrow it down?

Ali Nikrang: That is a good question. It depends, of course, on how these systems are built and used. However, a long-term influence of AI on our musical taste would be realistic, just as the progress in electronic music in recent decades has changed our taste for music and led to completely new musical forms.

Artificial intelligence is becoming creative, and even for artists, contrary to many fears, this seems to favour positive developments. How do you think they should react?

Ali Nikrang: From a technological and economic point of view, it is of course very interesting to make music in this convenient way, simply by pressing a button. But that is only the technological and economic point of view.

From an artistic point of view, it will always be necessary to involve humans to work with technology, because only then we can expect results, that not only sound musically “correct” but also have a higher human and personal meaning. AI is currently used exclusively as a tool in the creative field, but considering the creative potential of such systems, it would make sense to see these systems as a kind of counterpart or partner. To achieve this, however, new methods of collaboration need to be developed on the technological side to enable natural communication between humans and machines. Especially creative applications need creative ways of interaction and collaboration. Furthermore, AI-based models are very complex systems, which makes it very difficult to find ways, to perform a deeply human activity like composing music in a natural and intuitive way in collaboration with an AI system. Collaborative AI, especially in the creative field, is therefore a very important research topic that could help to exploit the creative potential of current and future AI systems.

How does AI change the audience? Can this music even trigger the same emotions?

Ali Nikrang: It is indeed a strange feeling to notice that you react emotionally to a piece of music composed by an AI system. On the one hand you feel a certain manipulation, on the other hand you are fascinated by this system, at least from a technical point of view.

AI systems only learn statistical patterns of data. But with this we get fascinating results and asks ourselves: How is it possible that a machine, which has no understanding and no idea of our emotions, can produce something so emotional and stimulate emotional reactions in us?. Even after many years of dealing with these systems, I still get a strange feeling of hearing an AI composition and feeling emotions together with it.

Learn more about Ali Nikrang and creative intelligence on the Ars Electronica Futurelab’s new website or about digital data in major and minor on the Ars Electronica Blog

Ali Nikrang is a Key Researcher at the Ars Electronica Futurelab. He has a background in both technology and music. He studied Computer Science at the Johannes Kepler University and music (composition and piano) at the Mozarteum University in Salzburg. Before joining the Ars Electronica Futurelab in 2011, he worked as a researcher at the Austrian Research Institute for Artificial Intelligence in Vienna. His work focuses on the interaction between humans and AI systems with a focus on creative applications. It includes investigating the creative outcomes of AI systems and how they can be controlled and personalized through human interaction and collaboration. As a classical musician and AI researcher he has been involved in numerous projects combining artificial intelligence and music. His work has been shown in various conferences and exhibitions worldwide and he has also been part of several TV and radio documentaries on artificial intelligence and creativity.