by Editorial Team

There is a worrying absence of women employed in the fields of Artificial Intelligence and data science in today’s society. Globally, more than three-quarters (78%) of professionals in these fields are men, while less than a quarter (22%) are women according to the World Economic Forum (2018). The question of whether this is a man’s world does indeed seem to have an answer: yes, this is a man’s world. At least, for now.

At the same time, a large number of studies suggest the underrepresentation of female professionals in the fields of AI and machine learning is the current cause of a strong gender bias in AI and machine learning systems (West, Whittaker, & Crawford, 2019; Wajcman, Young & FitzMaurice, 2020). This brings to the table ethical dilemmas such as social and economic justice, as well as the value of inclusion and diversity. It would seem that the lack of diversity in the sector reinforces and perpetuates problematic traditional gender stereotypes.

Ars Electronica’s European Platform for Digital Humanism connects a network of over 80 partners across Europe driven by a shared conviction that the processes of digital transformation need to be designed and guided with a focus on citizen empowerment, equity and inclusion, and generate conversations that re-evaluate our relationship to the technologies we’ve created and how we use them. In this context, gender bias in artificial intelligence cannot be left out of the discussion, and was tackled by Ars Electronica within the programs of the European ARTificial Intelligence Lab, a Creative Europe project that highlighted the need for human-centered, art-driven and equitable innovation in AI that ran from 2017 to 2020.

Gender Bias in AI

As Erin Young, Judy Wajcman and Laila Sprejer point out in their report “Where are the women? – Mapping the gender job gap in AI” (Alan Turing Institute’s Women in Data Science and AI Project, 2021), “although algorithms and automated decision-making systems are popularly known as unbiased and objective, bias is actually very present and is being amplified through AI systems at several stages”. First, the data used to train algorithms may underrepresent certain groups or encode an historical bias against marginalized demographic groups, determined by decisions on the data collected and how it is curated. Second, biased assumptions or decisions made by developers that either reflect personal values and priorities or are the result of a poor understanding of the underlying data are often reflected in modeling and analysis processes. All this is not at all surprising. AI systems are biased because humans are too. As a system that repeats commands entered by its biased creators, artificial intelligence will necessarily be as biased as its creators.

To appeal to popular culture, HAL 9000 – the artificial intelligence and main antagonist of Kubrik’s “2001: A Space Odyssey” – might be the exception to the rule. The fact that outside the fictional world, practically all voice assistants used in our daily lives have, almost by default, a female voice and name: Alexa, Siri, Cortana…, might not be a mere coincidence.

Ever since voice assistants started coming into our lives, technology companies have justified the use of female voices, claiming that studies have shown consumers prefer female to male voices. This further perpetuates the stereotypes that live with us in all facets of day-to-day life. Another reason most often given by programmers is that there is no data for male voices. Over the years, text-to-speech systems have been trained predominantly with female voices. Thus, companies opt for female voices when creating voice automation software, also because it is the most efficient solution. Female voice recordings date back to 1878, when Emma Nutt became the first female telephone operator. Given that the voice recognition systems developed within the AI industry are dominated by female voices, creating automated male voice systems can be incredibly difficult. But not impossible.

In 2019, UNESCO together with The German Federal Ministry for Economic Cooperation and Development and the EQUALS Skills Coalition published the report “I’d blush if I could: closing gender divides in digital skills through education”. The report shares strategies for closing gender gaps in digital skills through education. The report borrows its name from the response Siri gives when human users say to her, “Hey Siri, you’re a bi***.” As of April 2019, Siri started responding to the insult more appropriately (“I don’t know how to respond to that”). However, as the report underlines, the voice assistant continues to drag submissive attitudes in the face of clear gender abuses.

The report focused especially on digital assistants and how they reflect, reinforce and spread gender biases and model tolerance for sexual harassment and verbal abuse, as they make women the visible face of bugs and glitches resulting from hardware and software designed predominantly by men, and thereby send explicit and implicit messages about how women and girls should express themselves and respond to requests.

But why should we care so much about the gender gap and gender biases in Artificial Intelligence?

Digital Assistants, social media, smart home devices, banking, navigation apps, autonomous vehicles, platforms such as TikTok, YouTube and Netflix, AI and voice assistants working with voice recognition systems have become a vital part of our daily routines. Voice search statistics reveal that one billion voice searches are performed every month and by 2027 the value of the global AI market is expected to reach $267 billion. Artificial Intelligence is fast becoming an integral part of our daily lives and will be a major driving force of the rapidly accelerating digital transformation. Therefore, we have the responsibility to confront and question the gender biases inherent in AI models and seek ways to mitigate them. There is an urgent need for big tech and the industries surrounding it to implement ethical and humane frameworks for AI development that ensure representation and gender diversity in AI to make it more inclusive, diverse, balanced and, ultimately, representative. This starts with the pools of developers that must include people of diverse gender identities, sexual orientations and ethnicities. We, as customers and users, after all, need to be more demanding. “We need to pay much closer attention to how, when and whether AI technologies are gendered and, crucially, who is gendering them.”

The European ARTificial Intelligence Lab explored this challenge throughout the duration of the project and was focused on bringing in the experience and perspective of artists and creatives to highlight the necessity of their human-centered, art-driven approach for an ethical AI development framework. The next three projects, which were presented in the framework of the AI Lab over the years, were born as a counterattack to bias in AI and a demand for more diverse spaces through the inclusion of different perspectives, that can help overcome the masculinized vision of the complacent female assistant.

An AI that challenges gender roles

Women Reclaiming AI is a collaborative AI voice assistance and form of activism created by artists and technologists Coral Manton and Brigitte Aga in collaboration with an ever-evolving community of self-identifying women. The project aims to reclaim female voices in the development of future AI systems by empowering women to use conversational AI as a medium for protest. Users can talk to the evolving voice assistance at womenreclaimingai.com and see the visual representation created from a DIY dataset of images of participants and other inspiring women. The intention of the initiators is to create an alternative dataset of women, which feels more representative.

Data with a feminist perspective

Feminist Data Set is an ongoing multi-year art project by machine learning design researcher and artist Caroline Sinders (US) that combines lectures, workshops, and calls to action to collect feminist data to create a series of interventions for machine learning. What is feminist data? Feminist data can be artworks, essays, interviews, and books that are from, about, or explore feminism and a feminist perspective. The feminist data set acts as a means to combat bias and introduce the possibility of data collection as a feminist practice, aiming to produce a slice of data to intervene in larger civic and private networks.

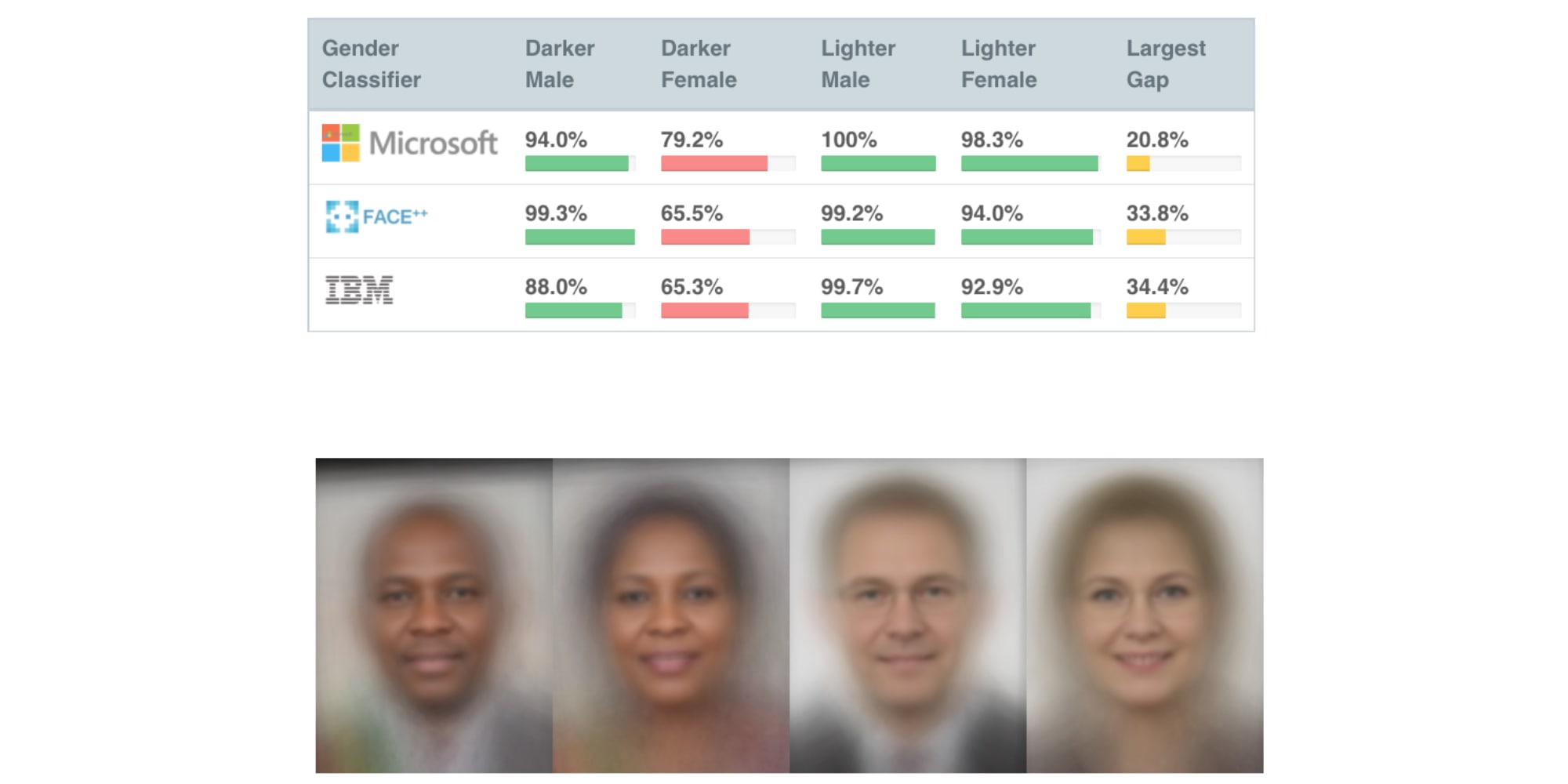

Fighting gender and skin color discrimination in AI

In Gender Shades, Joy Buolamwini and Timnit Gebru have investigated the biases of AI facial recognition programs. Their study shows that in some applications, AI facial recognition programs exhibit obvious discrimination based on gender or skin color. One of the reasons for this is the faulty or incomplete data sets with which the programs are trained. Skin color information is crucial to this process. In response, the researchers created a new benchmark data set, i.e. new criteria for comparison. The set contains the data of 1,270 parliamentarians from three African and three European countries. Buolamwini and Gebru’s set is the first training data set that contains all skin colour types and is able to test facial recognition of gender.

Sources:

Young, E., Wajcman, J. and Sprejer, L. (2021). Where are the Women? Mapping the Gender Job Gap in AI. Policy Briefing: Full Report. The Alan Turing Institute.

Ella Fisher (2021): Gender Bias in AI: Why Voice Assistants Are Female. In Adapt. URL: https://www.adaptworldwide.com/insights/2021/gender-bias-in-ai-why-voice-assistants-are-female

Jennifer Latson (2015): The Woman Who Made History by Answering the Phone. In TIME. URL: https://time.com/4011936/emma-nutt/

West, M., Kraut, R., & Ei Chew, H. (2019). I’d blush if I could: closing gender divides in digital skills through education. UNESCO. https://unesdoc.unesco.org/ark:/48223/pf0000367416.page=1

Deyan Georgiev (2022): 2022’s Voice Search Statistics – Is Voice Search Growing?. In Review 42. URL: https://review42.com/resources/voice-search-stats/

Bojan Jovanovic (2022): 55 Fascinating AI Statistics and Trends for 2022. In DataProt. URL: https://dataprot.net/statistics/ai-statistics/

Megan Specia (2019): Siri and Alexa Reinforce Gender Bias, U.N. Finds. In NY Times. URL: https://www.nytimes.com/2019/05/22/world/siri-alexa-ai-gender-bias.html

The European ARTificial Intelligence Lab is co-funded by the Creative Europe Programme of the European Union and the Austrian Federal Ministry for Arts, Culture, Civil Service and Sport.