Honorary Mention

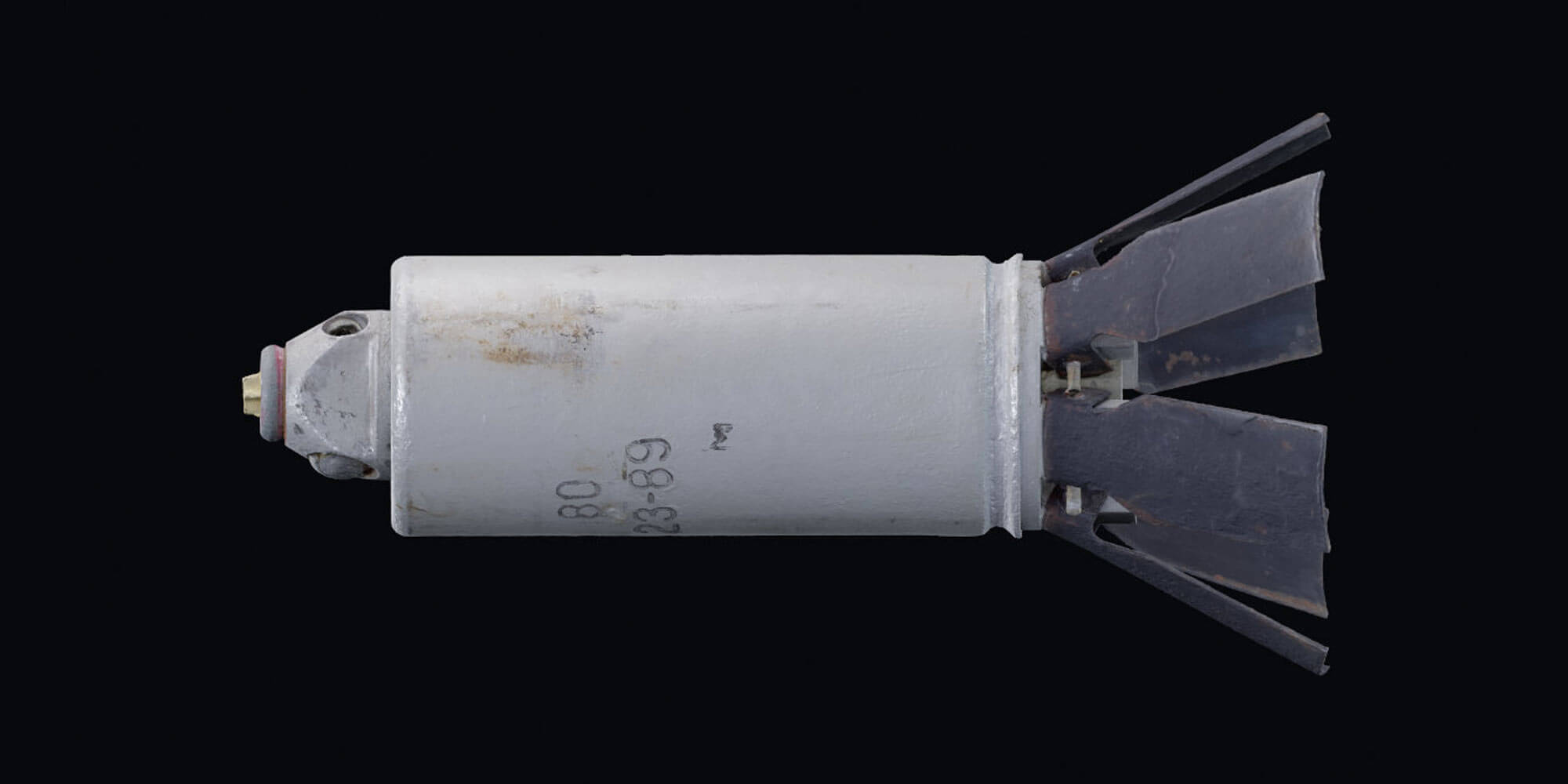

VFRAME is a computer vision project that develops open source image processing software and neural network models for human rights related research and conflict zone monitoring. Started in 2017 with the goal of bridging the gap between industry, commercially aligned AI, and the needs of investigative research, VFRAME is now a pioneer in the development and application of new techniques that combine 3D-photogrammetry, 3D-rendering, and 3D-printing to generate synthetic data for training neural networks. Instead of following industry trends to scrape data from online sources, which can inherit problematic biases, VFRAME uses an artist-first approach that combines digital fabrication, sculpture, photography, and 3D-artistry to create a virtually unlimited source of training data. The result is powerful computer vision models that can automate the detection of illegal cluster munitions in million scale video datasets from conflict zones.

Since the beginning, VFRAME has partnered with Mnemonic to apply this technology to their large archives. Our first successful pilot project during 2022 resulted in the detection of over 1,000 videos containing the RBK-250 cluster munition bomb in their Syrian Archive, with over 3 million videos and approximately 10 billion image frames, a task that would otherwise be impossible. Last year also marked the beginning of a new collaboration with the NGO Tech 4 Tracing to gain direct access to real munitions for 3D scanning. This important new step allows the VFRAME project to finally scale up development efforts to build more computer vision detection models for application to conflict zone monitoring, which are all available open-source.

Credits

Director, founder, computer vision: Adam Harvey

3D design and emerging 3D technologies: Josh Evans

Information architecture and front-end development: Jules LaPlace

With support from: Prototype Fund (Bundesministerium für Bildung und Forschung); NLNet Foundation and Next Generation Internet (NGI0); NESTA; SIDA; Tech 4 Tracing