by Carla Gimeno Grauwinkel, Ana-Maria Carabelea

Ars Electronica Festival’s last edition introduced us to the concept of a New Digital Deal. To be completely accurate, it was the leitmotiv of the whole festival. Digitalization was at the core of this concept and the idea that while it might not be changing our world per se it is, in fact, radically changing how and what we can or must deal with in this world.

The fact that new deals are being called for everywhere these days, speaks of a growing awareness of the inevitability of change. Having arrived in the third decade of the 21st century, it might just be the right moment to think about what a new deal in the digital context would mean. At a time when the discourse about digital transformation is louder (but also more confusing) than ever, rethinking the foundations of the digital world deserves the main stage in the different discussion spaces.

As Ars Electronica is a platform for those who see the future as the responsibility of our time and face it with social activation and empowerment, the festival hosted projects which focused on making different realities and identities visible. Inclusion, diversity and equality were recognized as fundamental aspects to be taken into consideration in the New Digital Deal. The exploration of the implications of digital transformation, including the importance of equity and inclusion in our digital infrastructures, also lies at the heart of the European Platform for Digital Humanism, a platform initiated by Ars Electronica in 2019 that currently connects over 80 partners across Europe who are exploring what is necessary to create fair, ethical and transparent approaches to a new digital deal.

Within that context, the European ARTificial Intelligence Lab, a Creative Europe project coordinated by Ars Electronica from 2018 to 2021, focused strongly on the legal, cultural, educational and ethical dimensions of Artificial Intelligence. This allowed for a holistic model that considers human values and elementary questions of what AI should or should not do, as well as how AI systems are developed, deployed, used and monitored, and by whom. Gathering the perspectives of 13 major cultural operators in Europe, the European ARTificial Intelligence Lab centered on visions, expectations and fears that we associate with the conception of a future, all-encompassing artificial intelligence. One of the critical challenges currently driving the discourse around AI technologies is the social and racial biases inherent in some of the most used datasets and AI models.

Equity and ethnic representation is surely one of the most important and challenging sides of digital transformation. As humans create artificial intelligence and shape the different directions that digital transformation takes, only the diversity of the teams that make decisions and program the AI algorithms, can ensure that these algorithms reflect as accurately as possible the diversity within society.

This could start with ending gender bias in the fields of Artificial Intelligence and data science, but it should not stop there. To offer a more plural, fair and representative New Digital Deal, it will be necessary to include other ethnic, geographic, educational and economic dimensions. The more people from different backgrounds and with different experiences and realities work on AI, the more the risk of bias will be reduced.

If the people behind the ideation, creation and programming of all these digital changes are – for example – middle-aged CIS white men with a similar background, it is obvious that their realities will be reflected in their work and the likelihood of a more diverse representation will be reduced. Their own individual experiences, privileges and limitations will most certainly silence those of others.

That is why it is crucial to count on diverse and plural voices. More diverse AI development teams would be capable of addressing complex gender, ethnic and social issues before and during production.

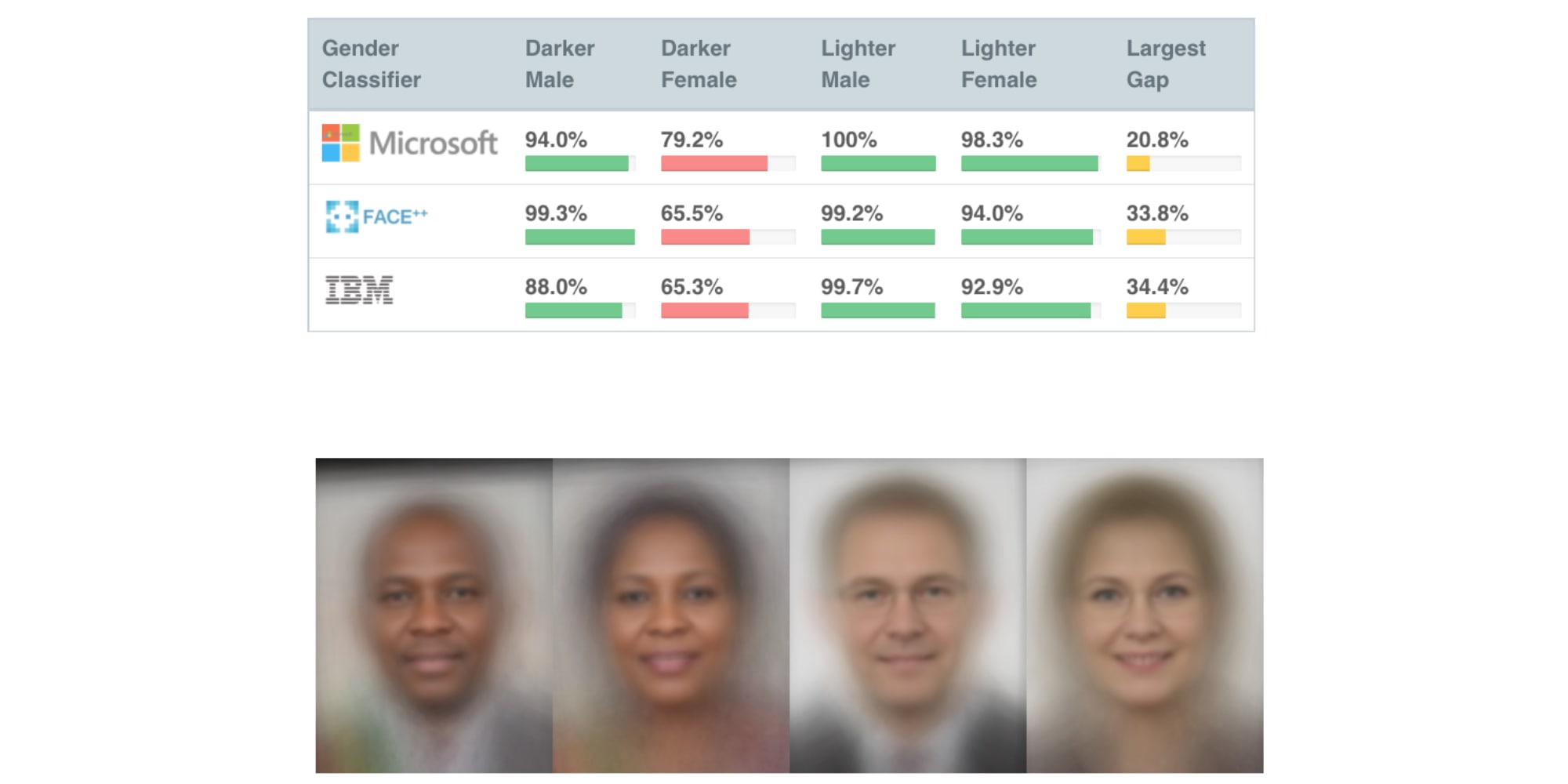

In this context, the AI Lab – within the framework of the Ars Electronica Festival 2019 – hosted the Gender Shades project by Joy Buolamwini and Timnit Gebru, who investigated the bias of AI facial recognition software. Their study reveals that popular applications that are already part of the programming display obvious discrimination based on gender or skin color.

Buolamwini is a computer scientist and digital activist based at the MIT Media Lab, but also the founder of the Algorithmic Justice League, an organization that fights for racial justice through algorithms. Its raison d’être is the conviction that technology should serve us all, not just the privileged few. “AI’s potential must be tempered with the acknowledgement that AI magnifies the flaws of its makers”. She experienced this firsthand, when she was a graduate student at MIT in 2015 and discovered that some facial analysis software couldn’t detect her dark-skinned face until she put on a white mask. As she noted in her successful TED Talk, “it’s one thing to have Facebook confuse people when analyzing a photo, but another when law enforcement or a potential employer is using such software”.

Just as Buolamwini took her project forward as a response to gender and skin-type bias in commercial artificial intelligence systems, there have been other artistic explorations that were born with the purpose of giving visibility to collectives and identities that are not sufficiently represented in the digital environment. Some of these projects are part of the European Artificial Intelligence Lab Journeys, a format developed by Ars Electronica in the wake of the pandemic in which artists take the viewers on filmic excursions into their artistic practice.

An archive of black existence

Black Movement Library is a library for activists, performers & artists to create diverse XR projects; a space to research how and why we move, and an archive of Black existence. This journey created by multidisciplinary artist and educator LaJuné (US), explores issues of cultural representation and exploitation through readings and discussions, while providing an introduction to motion capture, rigging and 3D environments.

In recent years, access to motion capture data, 3D base models and “make-an-animation-of-yourself” software has increased like never before. The possibilities of making and completing polished projects in this sense have become easier and more achievable for everyone. But despite their advances and accessibility, they still lack the tools to create diverse characters and movements unexplored by systems that claim neutrality. Systemic injustices are perpetuated in the digital world.

For instance, in 2018 lawsuits were filed against Fortnite for using dances from mainly black creators without permission, compensation or credit. The “Milly Rock” became “Swipe it” and the “Carlton” became “Fresh” effectively erasing the origins of these dances. Those lawsuits were put on hold because the dances were not considered choreography under copyright law.

Core elements of the journey integrate performance, extended reality, and physical computing to question access, control and representation. In LaJunés’ own words Black Movement Library is, in itself, a Movement: “It celebrates Black History and Black Culture and it holds us accountable in the ways we deal with black people: our movements, our bodies and our lives”.

How do you see yourself when the world refuses to reflect you?

Danielle Brathwaite-Shirley (UK) is an artist working predominantly in animation, sound, performance and Video Games to communicate the experiences of being a Black Trans person. Their journey ‘Accessing what you always knew you needed’, makes us reflect on identity, visibility, representation. In their own words: “the past has taught us that history will erase us as long as it pretends to remember us. So now it is our turn. Those of us who have to be remembered so that those of the future know they have a lineage. Black trans people are not new. In fact, we are as old as time but the whispers buried us. It is time to take responsibility for keeping in our memory those who are in the present”.

“Throughout history, Black queer and Trans people have been erased from the archives. Because of this it is necessary not only to archive their existence, but to record the many creative narratives they have used and continue to use to share their experiences”. Brathwaite-Shirley’s main aim is to bring to the forefront the experiences of Black trans women and, more generally, to archive Black trans experience. It’s a call to reflect on the importance of having a history and references and how erasing the past can be very harmful in the present. Their work refuses to let viewers be passive. It’s uncomfortable, critical, reflective, real.

When drag meets Machine Learning

This next project explores what AI can teach us about drag, and what drag can teach us about AI. The Zizi Show (2019-ongoing) by Jake Elwes (UK) constructs and then deconstructs a virtual cabaret that pushes the limits of what can be imagined on a digital stage. Drag Queens, Drag Kings, Drag Things and AI… This virtual online stage features acts that have been constructed using deepfake technology, learning how to do drag by watching a diverse group of human performers. It dissects one of the dominant myths about AI: the notion that “an AI” is a thing we might mistake for a person.

The bodies we see in the show have been generated by neural networks trained on a community of drag artists filmed to create training datasets at a London cabaret venue closed during COVID-19. During each act, audiences are invited to interact with the website and play with which deepfake bodies perform which songs.

Sources:

Joy Buolamwini: “AI Face Recognition May Amplify Inequality” in Doha Debates (2020). https://dohadebates.com/video/joybuolamwini-ai-face-recognition-may-amplify-inequality/

Joy Buolamwini: “A MIT researcher who analyzed facial recognition software found eliminating bias in AI is a matter of priorities” in INSIDER (2019). https://www.businessinsider.com/biases-ethics-facial-recognition-ai-mit-joy-buolamwini-2019-1

LaJune McMillian: “Art and Code Homemade” in Open Transcripts (2021). http://opentranscripts.org/transcript/art-code-homemade-lajune-mcmillian/

The European ARTificial Intelligence Lab is co-funded by the Creative Europe Programme of the European Union and the Austrian Federal Ministry for Arts, Culture, Civil Service and Sport.

Carla Gimeno Grauwinkel is currently doing an internship at Ars Electronica as part of the Spanish Ministry for Culture’s CULTUREX grant programme. She studied Journalism at the Autonomous University of Barcelona with a special focus on cultural and social communication, and has a Master in Management of Cultural Institutions and Companies.

Ana-Maria Carabelea joined Ars Electronica in 2021 as the Communication Manager for the Regional STARTS Centers. She has previously conceptualised and curated programmes within the cultural and creative industries and is currently researching the interrelation between tech brands, digital artists and audiences as part of an MA in Brands, Communication and Culture at Goldsmiths University of London.