MusicLM is an advanced machine learning model that can generate high-quality music and sounds based on text descriptions.

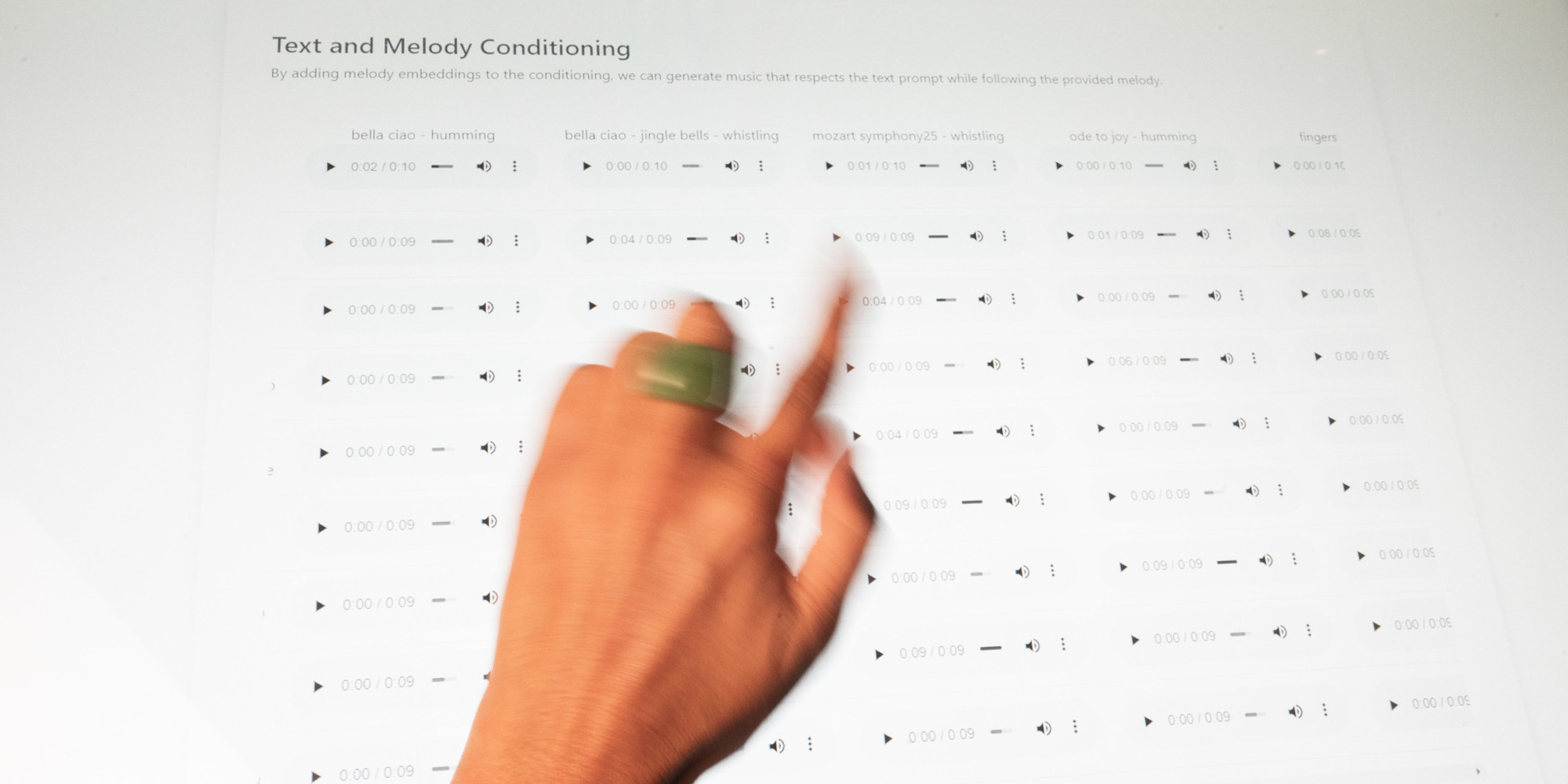

It outperforms previous systems in terms of audio quality and accurately following the given text descriptions. The model can also incorporate a melody and transform it according to the desired style described in the text. To support further research, the authors have released a dataset called MusicCaps, which consists of music-text pairs with detailed descriptions. The project addresses the challenges of audio synthesis and the scarcity of paired audio-text data. MusicLM leverages a multi-stage modeling approach and is also trained on a large dataset of unlabeled music to generate long and coherent music pieces. The model’s performance is evaluated using quantitative and qualitative metrics, showing its superiority over existing systems.