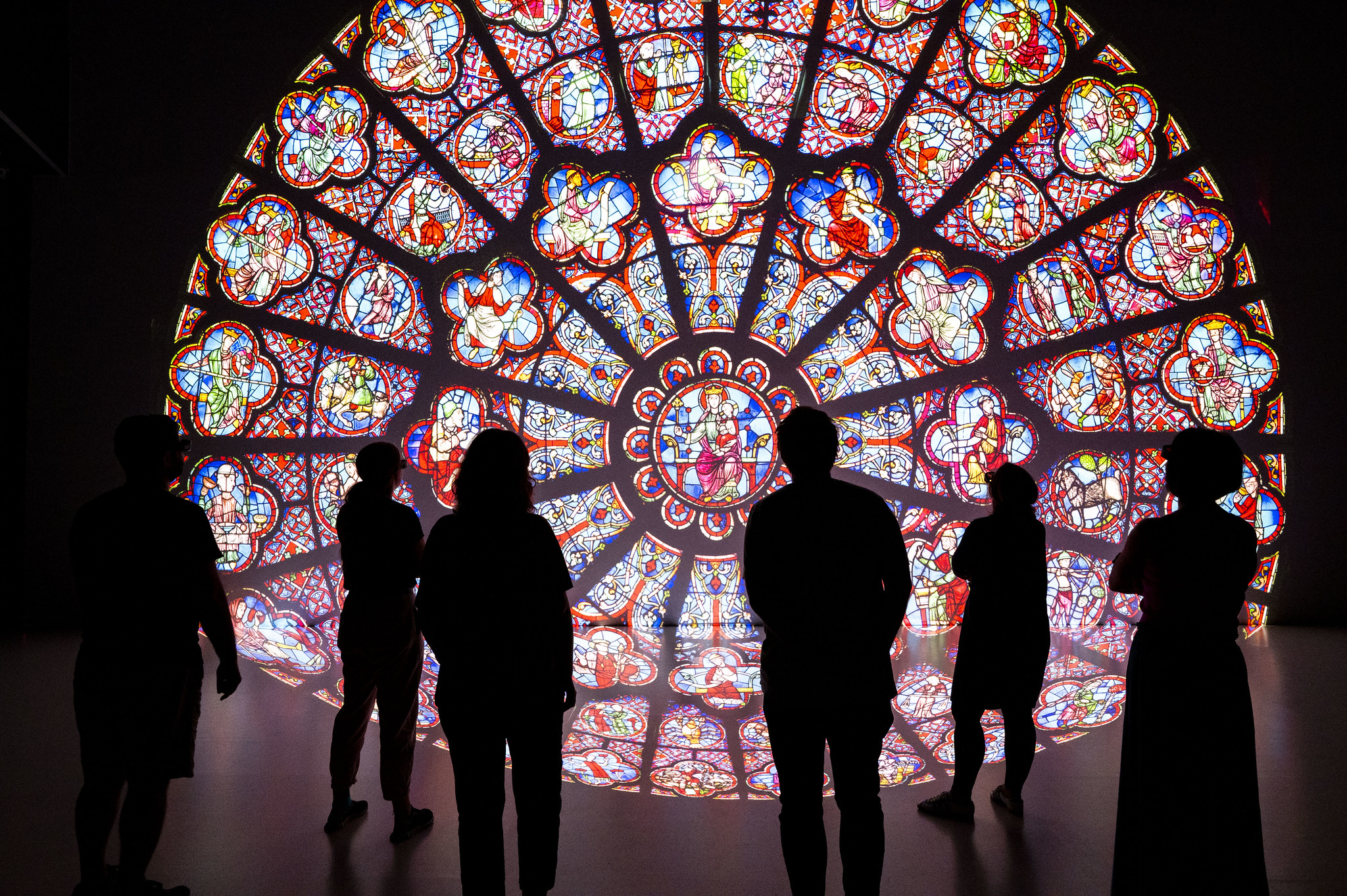

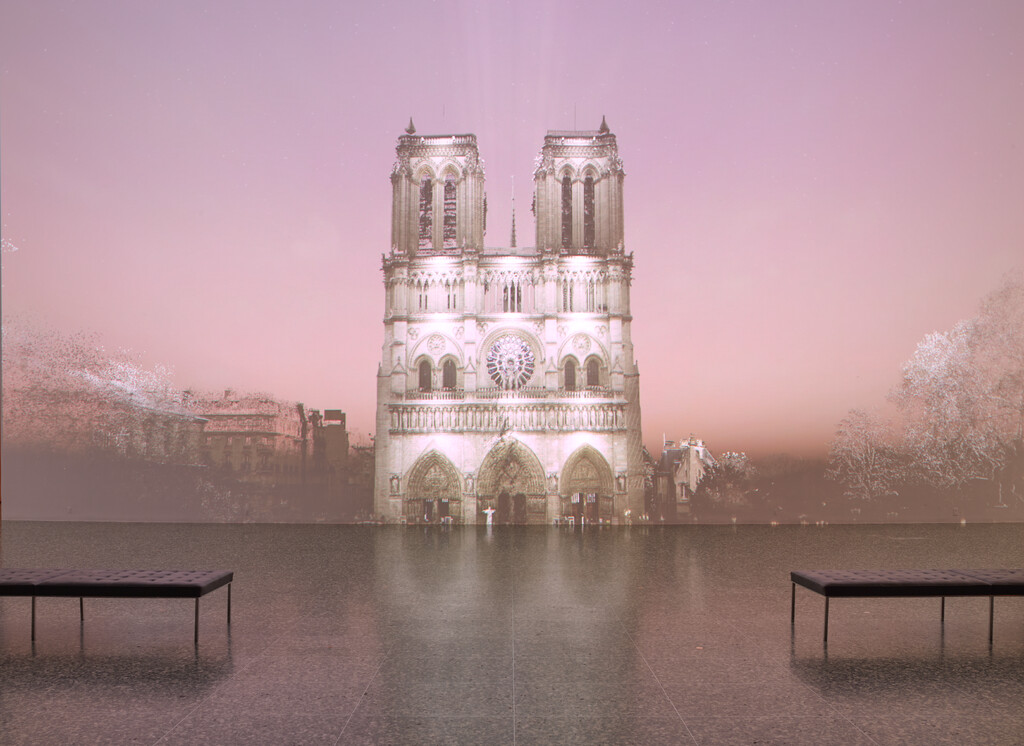

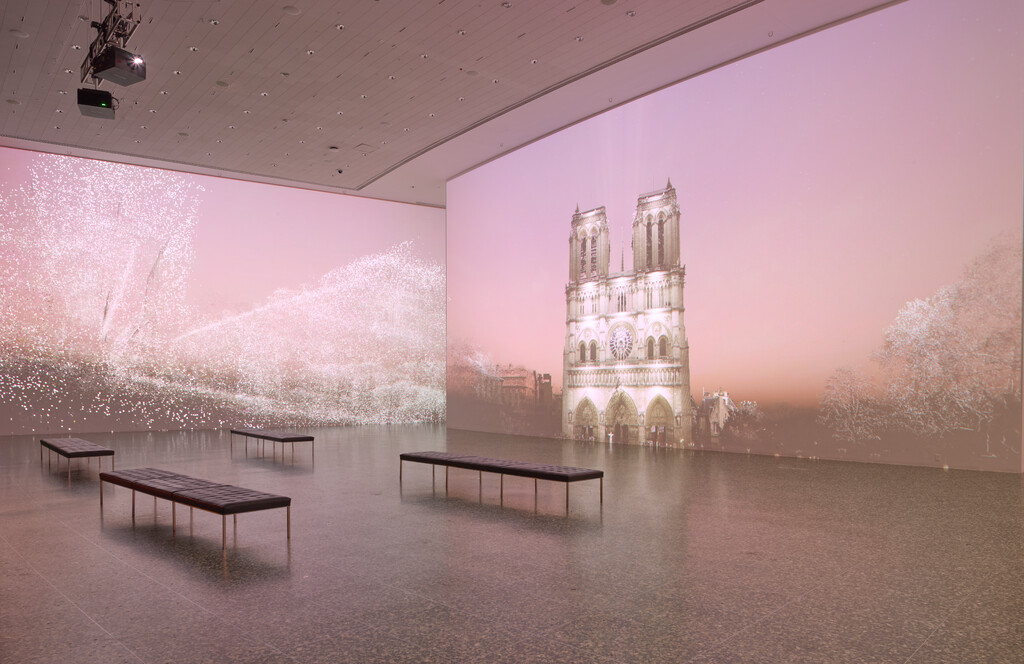

Visitors of Notre-Dame Immersive can explore a huge model of the world-famous Paris cathedral and discover its special features in stereoscopic 3D. The immersive journey in Deep Space 8K of the Ars Electronica Center encompasses impressive architecture, historical representations, and larger-than-life details of the elaborate glass windows.

In April 2019, the world-famous Notre-Dame cathedral in Paris was severely damaged in a devastating fire, requiring years of reconstruction and restoration work. On the occasion of its reopening in December 2024, the Ars Electronica Futurelab, together with the French start-ups Iconem and Histovery, brought the world-famous cathedral into Deep Space 8K as an immersive experience.

The construction of Notre-Dame de Paris was just as monumental as the events that made the cathedral a place for the history books: after nearly 200 years of construction, the cathedral was completed in 1345 and was the scene of the coronation of Napoleon Bonaparte as emperor. It was immortalized in Victor Hugo’s novel “The Hunchback of Notre-Dame”. In the night of April 15, 2019, a major fire almost completely destroyed the cathedral – over 600 firefighters were deployed to save Notre-Dame. Almost 1,000 people were engaged in the reconstruction, financed by nearly one billion euros in donations. Notre-Dame Immersive in Deep Space 8K makes this extensive restoration work visible, as well as the construction of the cathedral and its architectural features.

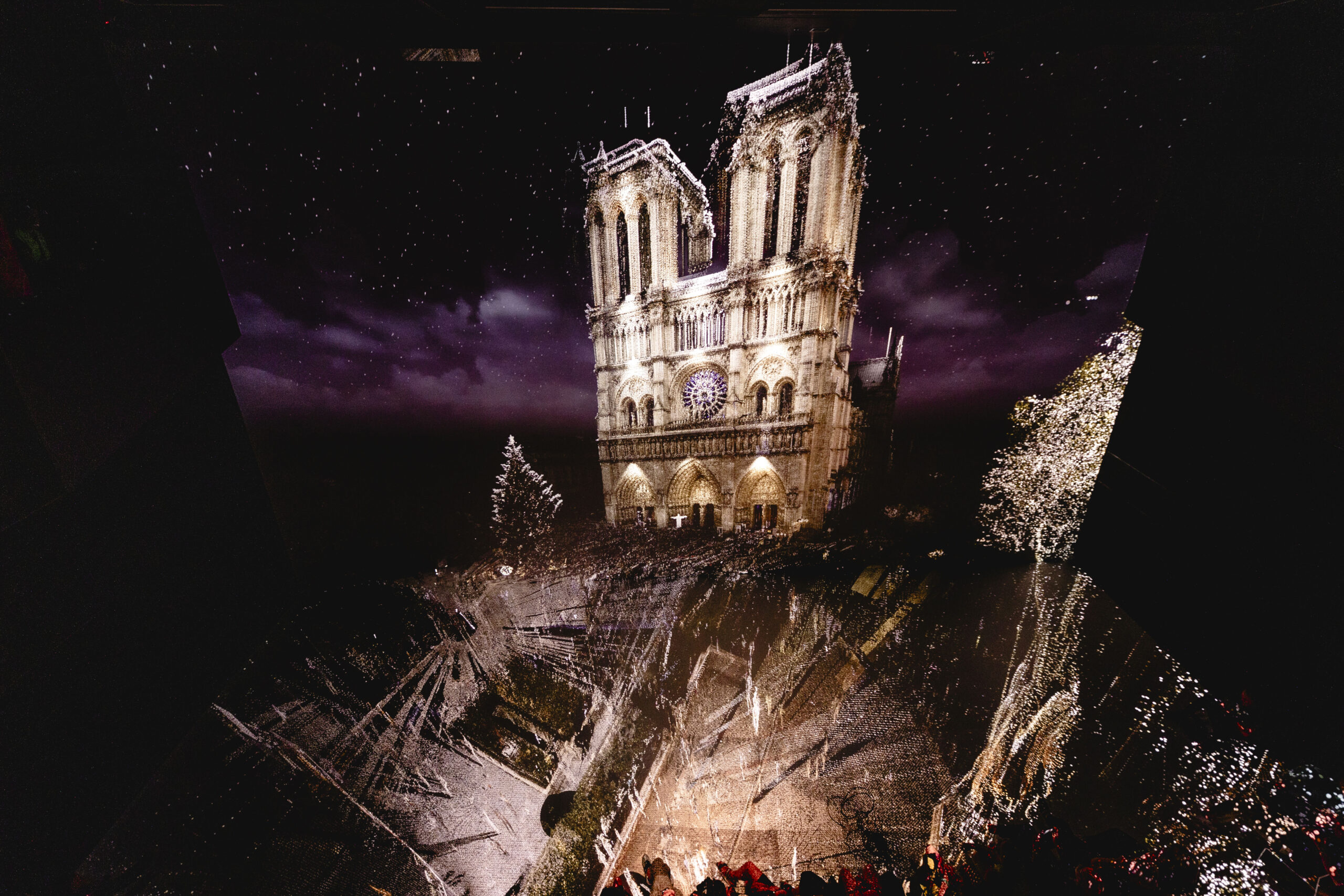

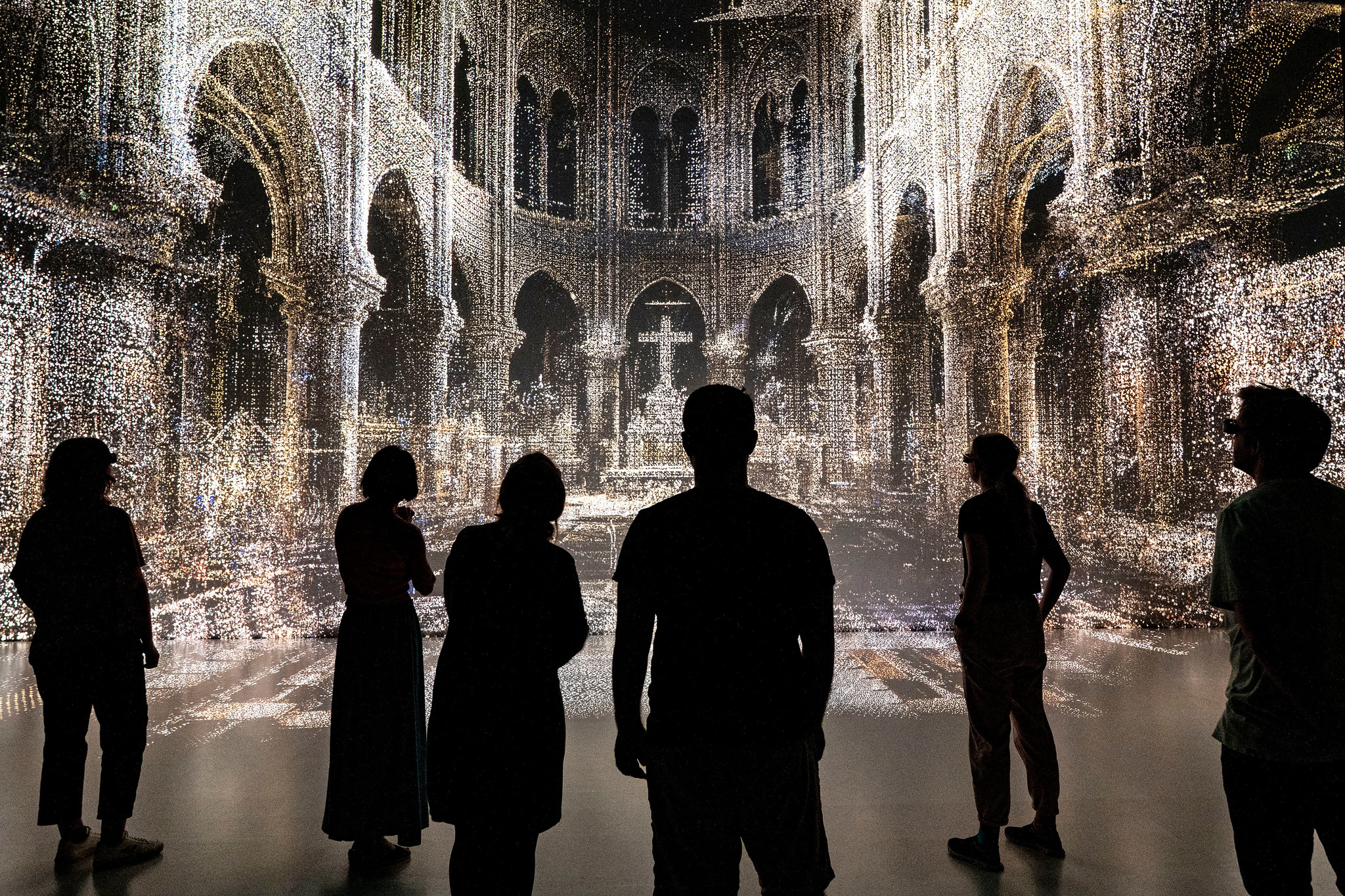

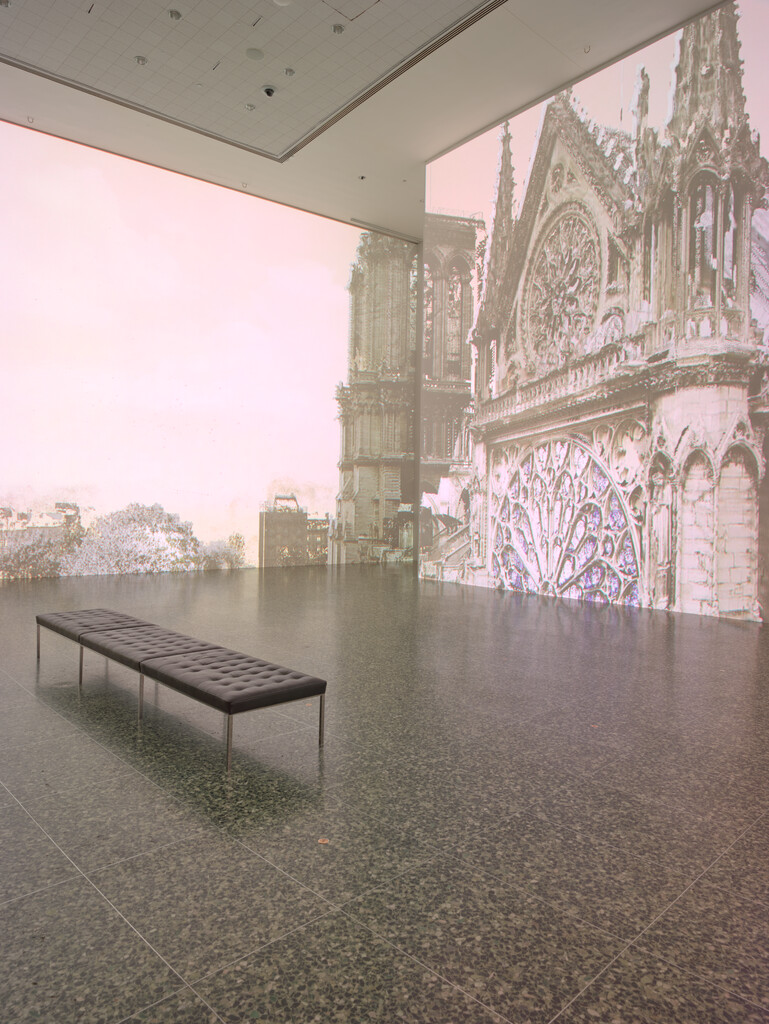

The digital architecture of Notre-Dame Immersive is based on data from renowned art historian Andrew Tallon, who created a detailed point cloud of the cathedral using laser scans in 2010. After Tallon’s death, the data went to the French start-up Iconem, whose team completed the data set using modern technology. This point cloud is the only detailed digitalization of the cathedral before the 2019 fire and is therefore the central component of the digital processing for Deep Space 8K.

Detailed deep dives into history

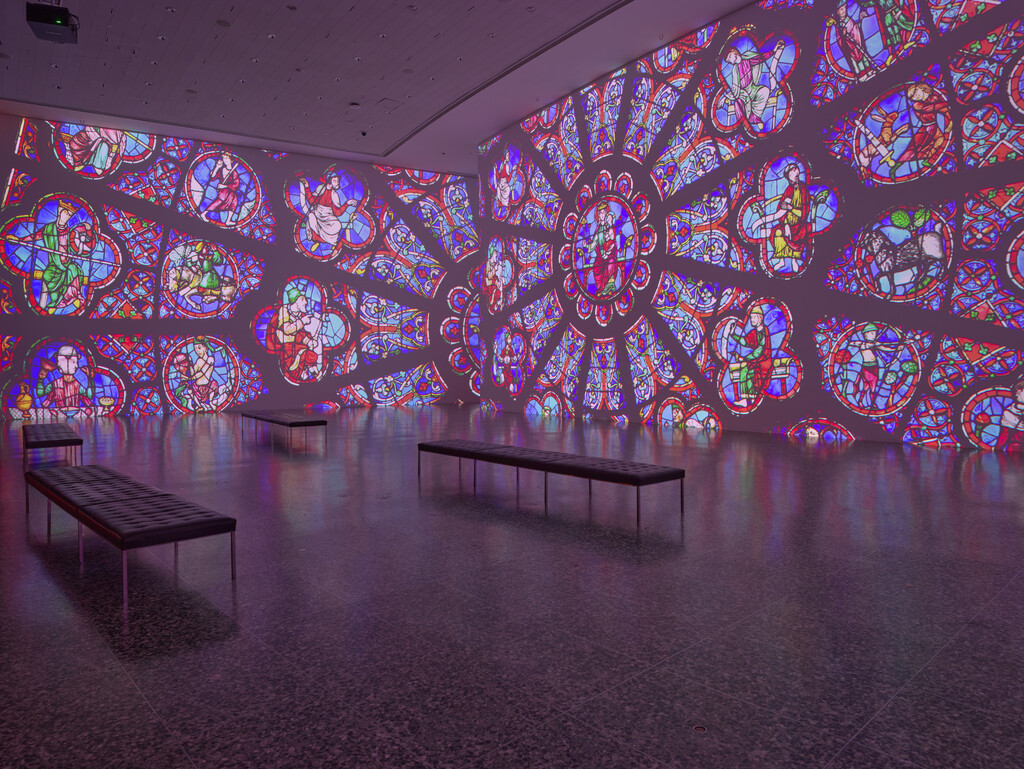

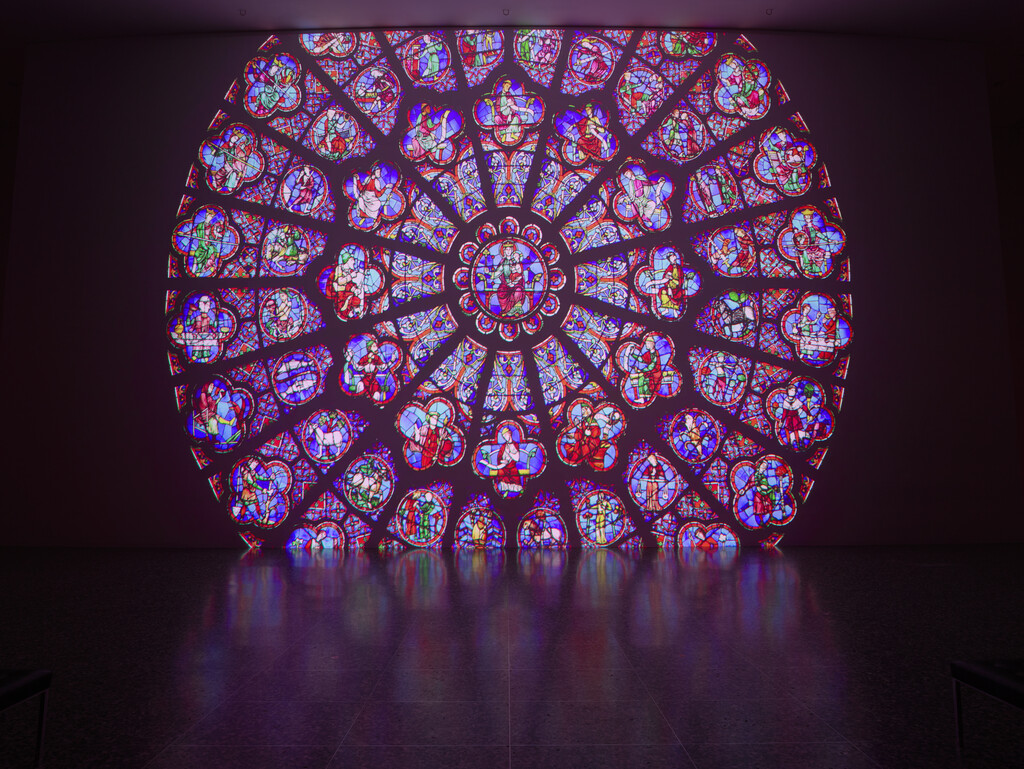

The data of the point cloud contains over a billion points, with the majority depicting the interiors of the cathedral in detail. To be able to display these massive amounts of data in the Deep Space 8K, the point cloud was divided into different sections that can be explored by the Deep Space infotrainers. In some places, there are additional details to discover, such as 360-degree panoramas, 3D models and a high-resolution image of the rose window of the west portal. This additional content was provided by the start-up Histovery, which also provided a 3D model of the cathedral during the fire. Animated in 3D by the Futurelab, the extent of the fire at Notre-Dame can thus be impressively experienced by visitors in Deep Space 8K.

The panoramas, images, and 3D models are embedded in the original locations inside and outside Notre-Dame as so-called “points of interest”. These appear as sparkling spheres within the point cloud of the cathedral and, when approached, provide insights into the history of the building in the 12th century and the restoration work following the devastating fire.

The project is controlled interactively via a controller, allowing the presenters to move freely between the points of interest and respond specifically to the interests and questions of the audience. Notre-Dame Immersive thus also impressively highlights the importance of new technologies in the preservation and communication of humanity’s cultural heritage.

The technology behind Notre-Dame Immersive

Since Andrew Tallon’s point cloud consists of over a billion points, rendering it in real time for Deep Space 8K as well as in a video at the Museum of Fine Arts in Houston, USA, posed various challenges. For real-time rendering, the Futurelab had to carefully balance quality and frames per second. This required dynamic adjustments because the density of the point cloud changes depending on the viewer’s virtual location. Consequently, when the number of visible points was reduced, the gaps between the points became more noticeable, creating areas of black space. If these gaps reached a certain size, the image began to flicker as the camera moved. To address this, the team increased the size of each rendered point dynamically to ensure a continuous visual experience and avoid flickering caused by the negative space.

For the video version, this issue became even more pronounced as it would only become apparent once the sequence was rendered in the final output quality. Contrary to expectations, even high-end PCs struggle to display all the points at once during rendering. To overcome this, all point cloud settings were meticulously fine-tuned for each camera perspective to achieve the best possible outcome.

Immersive video version in Houston

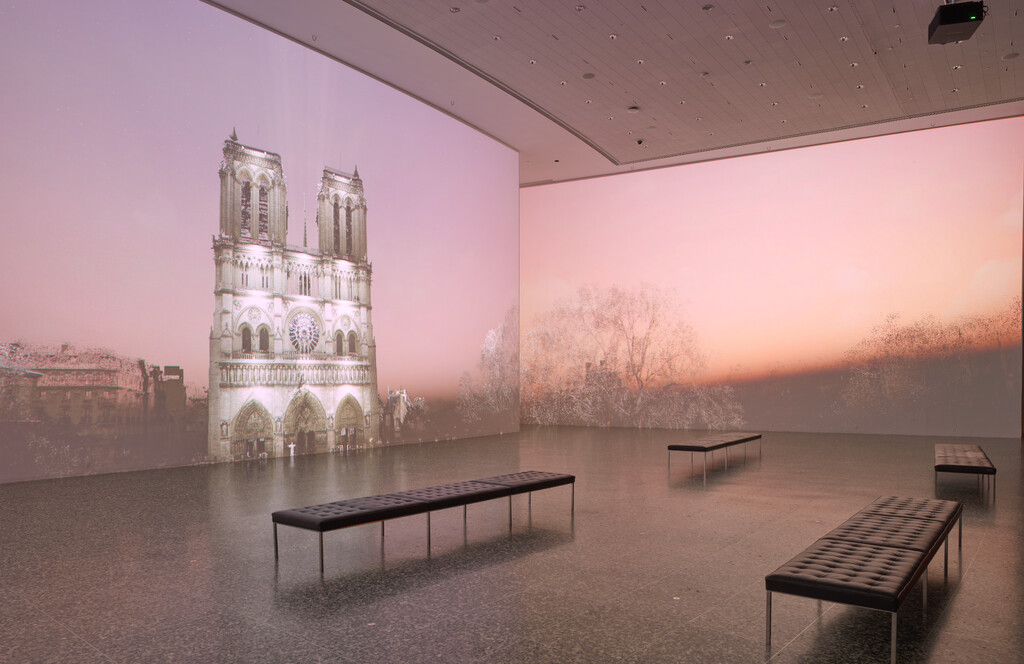

On November 23, 2024, Notre-Dame Cathedral: An Immersive Experience premiered in Cullinan Hall at the Museum of Fine Arts in Houston, USA. To work on the three monumental walls of 18,3 to 19,3 meters wide and 9,15 meters high, the controllable stereoscopic 3D version for Deep Space 8K was converted into an animated 14-minute presentation in 2D.

Since the projection in Houston is presented without subtitles or a voiceover – unlike with presenters in Deep Space 8K – it offers an opportunity to approach the project from a different perspective. As the projection runs on a loop in the exhibition, it is crucial that visitors can join the artwork at any time without feeling like they have missed something or lack context to understand it. The piece was therefore designed to be a slow-paced, atmospheric artwork that leverages the vastness of Cullinan Hall. By filling the space with soft light from the projections, it aims to evoke the awe one might feel when standing in the real Notre-Dame.

To adjust for the different environment, Futurelab developed a specialized nDisplay setup to map the virtual world into Cullinan Hall. nDisplay is a system in Unreal Engine that enables multi-display rendering and synchronization for immersive environments like virtual production, simulators, or large-scale visualizations. In simple terms, an nDisplay setup functions like a set of windows, with a camera positioned at a predefined viewpoint. Everything the camera sees through these “windows” is rendered, allowing for an asymmetrical field of view and creating an immersive perspective from a specific sweet spot.

While the audience is viewing the interior of the cathedral, all three walls can be filled with the available data. However, when outside the cathedral, it becomes clear that the point cloud doesn’t extend beyond the nearest house fronts, making the projections appear empty. Fortunately, the team was able to use various images of the area surrounding Notre-Dame and employ techniques like “structure from motion” and Gaussian splatting to reconstruct the surrounding environment. The Futurelab then modified the data structure to integrate it into Unreal Engine’s particle system and adjusted several parameters to achieve the point cloud look for the AI-generated environment.

Celebrating cultural heritage at Ars Electronica

Ars Electronica launched its commitment to cultural heritage shortly after the opening of Deep Space in 2009 with a gigapixel image of “The Last Supper” (data by Haltadefinizione) and a 3D point cloud of Pompei (created by CyArk). For the first time, a wide audience was able to immerse themselves in cultural world heritage in stereoscopic 3D and larger-than-life size in the Deep Space of the Ars Electronica Center. This was followed by numerous unique and top-class three-dimensional insights – for example into ancient Rome, Venice from the canals to the Doge’s Palace, the Great Pyramid of Giza, the over 100-year-old nativity scene of the New Cathedral in Linz and a virtual reconstruction of the synagogue in Linz, which was destroyed by the Nazis. In addition, gigapixel images have since made it possible to zoom in on world-famous paintings like nowhere else – be it works from the Sistine Chapel, by da Vinci, Picasso, Caravaggio, Botticelli, de Goya, Klimt, Schiele, Van Eyck, Bruegel the Elder, and many more.

Credits

Ars Electronica Futurelab: Roland Haring, Raphael Schaumburg-Lippe

Ars Electronica: Melinda File, Michaela Wimplinger

PARTNER: Iconem, Histovery

Notre-Dame Immersive is supported by the Austrian Foreign Ministry and the Institut Français d’Autriche, as part of the strategy for the international dissemination of cultural and creative industries. Additional funding is provided by the Dorotheum and the state of Upper Austria/culture.