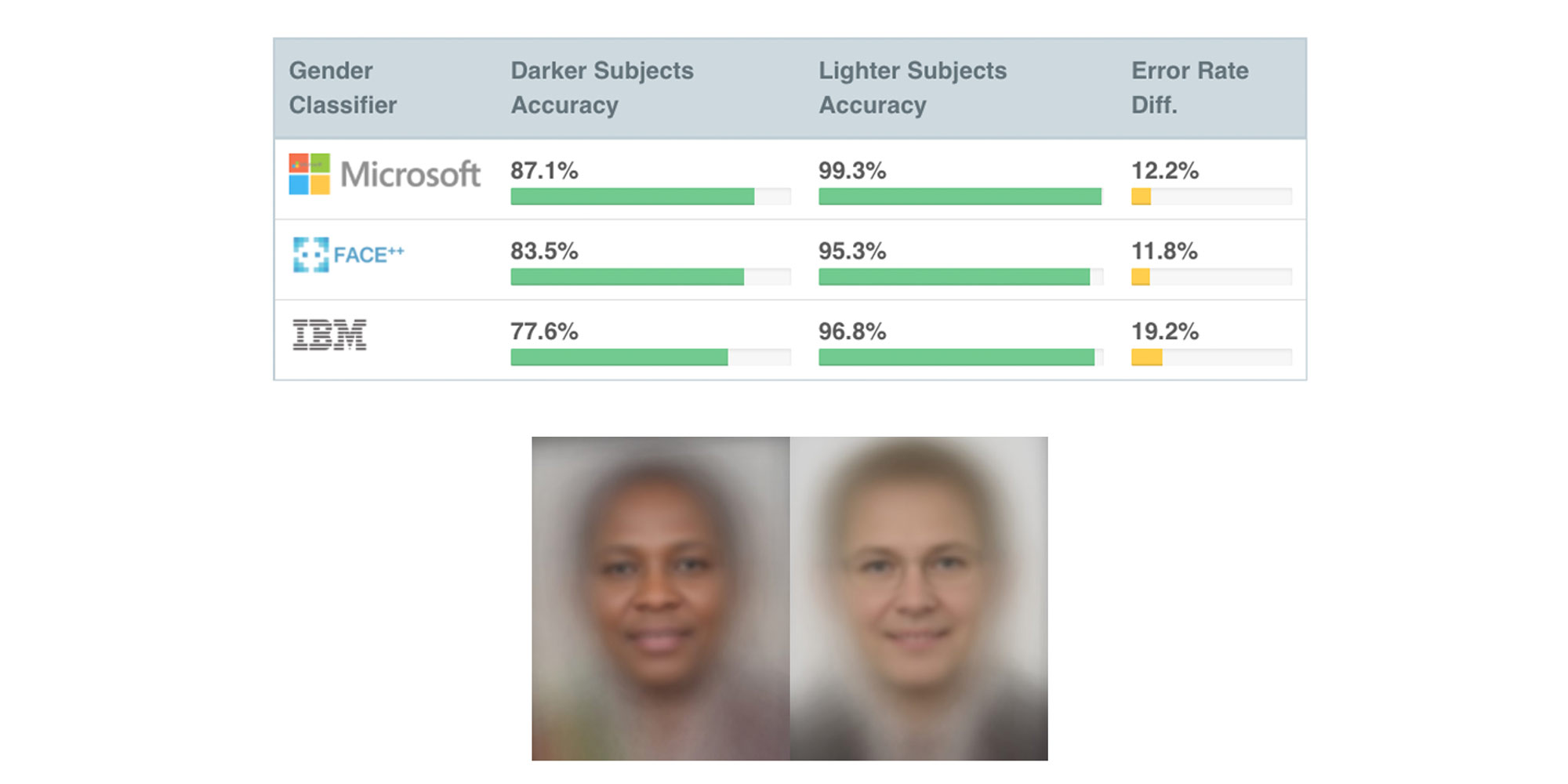

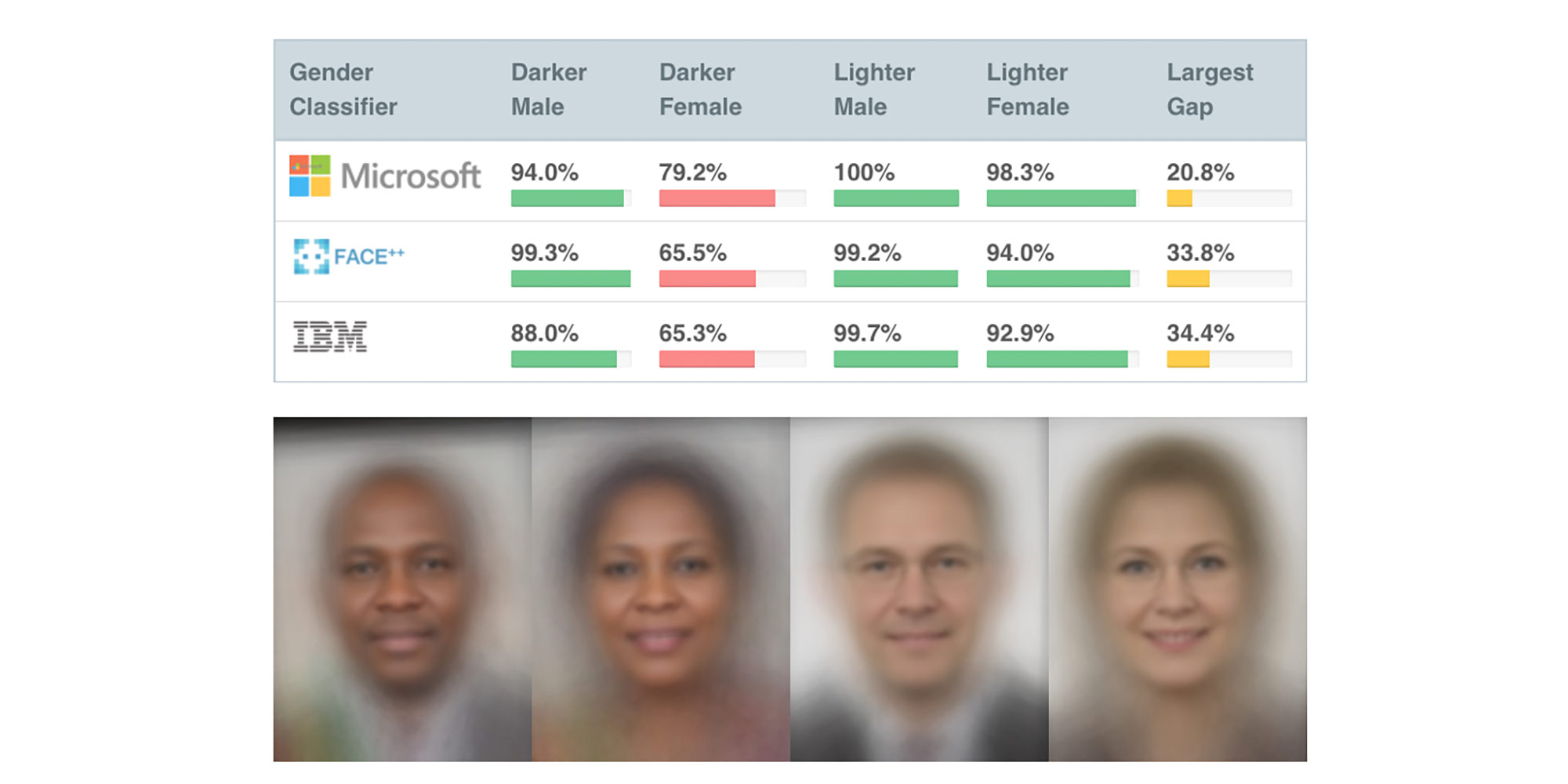

Joy Buolamwini und Timnit Gebru untersuchten die Vorurteile von KI-Gesichtserkennungsprogrammen. Die Studie zeigt, dass bereits populäre Anwendungen eine offensichtliche Diskriminierung aufgrund des Geschlechts oder der Hautfarbe aufweisen. Ein Grund für die ungerechten Ergebnisse sind fehlerhafte oder unvollständige Datensätze, mit denen das Programm trainiert wird. In der Medizin kann das ein Problem sein: einfache neuronale Netze haben bereits die selbe Trefferquote beim Erkennen von Melanomen (maligne Hautveränderungen) wie ExpertInnen.

Allerdings sind Informationen über die Hautfarbe für diesen Prozess entscheidend. Deshalb haben die beiden Forscherinnen einen neuen Benchmark-Datensatz erstellt, der neue Vergleiche erlaubt. Er enthält die Daten von 1.270 ParlamentarierInnen aus drei afrikanischen und drei europäischen Ländern. So haben Buolamwini und Gebru den ersten Trainingsdatensatz erstellt, der alle Hautfarbentypen enthält und gleichzeitig die Gesichtserkennung des Geschlechts testen kann.

Projekt Credits:

- Joy Buolamwini, Founder of the Algorithmic Justice League and Poet of Code

- Buolamwini, J., Gebru, T.: “Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification.” Proceedings of Machine Learning Research 81:1 — 15, 2018, Conference on Fairness, Accountability, and Transparency

- This project is presented in the framework of the European ARTificial Intelligence Lab and co-funded by the Creative Europe Programme of the European Union.

Biografien:

Joy Buolamwini (US) is a graduate researcher at the Massachusetts Institute of Technology who researches algorithmic bias in computer vision systems. She founded the Algorithmic Justice League to create a world with more ethical and inclusive technology. Her TED Featured Talk on algorithmic bias has over 1 million views. Her MIT thesis methodology uncovered large racial and gender bias in AI services from companies like Microsoft, IBM, and Amazon. Her research has been covered in over 40 countries, and as a renowned international speaker she has championed the need for algorithmic justice at the World Economic Forum and the United Nations.

She serves on the Global Tech Panel convened by the vice president of the European Commission to advise world leaders and technology executives on ways to reduce the harms of AI. In late 2018 in partnership with the Georgetown Law Center on Privacy and Technology, Joy launched the Safe Face Pledge, the first agreement of its kind that prohibits the lethal application of facial analysis and recognition technology.

As a creative science communicator, she has written op-eds on the impact of artificial intelligence for publications like TIME Magazine and New York Times. In her quest to tell stories that make daughters of diasporas dream and sons of privilege pause, her spoken word visual audit “AI, Ain’t I A Woman?” which shows AI failures on the faces of iconic women like Oprah Winfrey, Michelle Obama, and Serena Williams as well as the Coded Gaze short have been part of exhibitions ranging from the Museum of Fine Arts, Boston to the Barbican Centre, UK. A Rhodes Scholar and Fulbright Fellow, Joy has been named to notable lists including the Bloomberg 50, Tech Review 35 under 35, BBC 100 Women, Forbes Top 50 Women in Tech (youngest), and Forbes 30 under 30. Fortune magazine named her “the conscience of the AI revolution.” She holds two master’s degrees from Oxford University and MIT; and a bachelor’s degree in computer science from the Georgia Institute of Technology.

https://www.poetofcode.com/

Timnit Gebru (ETH) is an Ethiopian computer scientist and the technical co-lead of the Ethical Artificial Intelligence Team at Google. She works on algorithmic bias and data mining. She is an advocate for diversity in technology and is the cofounder of Black in AI, a community of black researchers working in artificial intelligence. Prior to joining Google, Timnit did a postdoctorate at Microsoft Research, New York City in the FATE (Fairness Transparency Accountability and Ethics in AI) group, where she studied algorithmic bias and the ethical implications underlying any data mining project. Timnit received her PhD from the Stanford Artificial Intelligence Laboratory, studying computer vision under Fei-Fei Li. Her thesis pertains to data mining large-scale publicly available images to gain sociological insight, and working on computer vision problems that arise as a result. The Economist, The New York Times and others have covered part of this work. Some of the computer vision areas Timnit is interested in include fine-grained image recognition, scalable annotation of images, and domain adaptation. Prior to joining Fei-Fei’s lab, Timnit worked at Apple designing circuits and signal processing algorithms for various Apple products including the first iPad. She also spent an obligatory year as an entrepreneur (as all Stanford undergrads seem to do). Timnit’s research was supported by the NSF foundation GRFP fellowship and the Stanford DARE fellowship.