Anton Bruckner Private University

-

Listening Room

Production Studio ABPU

During the symposium, the ABPU’s Production Studio will become the space for the Listening Room. A studio with a 20.2 speaker array that will allow visitors to perceive multichannel music in a relaxed way. Visitors can enter, exit and move freely through the space, perceiving thirty selected fixed-media pieces from the call for contributions.

-

Medium Sonorum Concert

Sonic Lab, ABPU

Dive deep into freshly made immersive electronic music with the 20.4 system of the Sonic Lab. This is a concert designed to perceive spatial music selected from the call for contributions that relates to Manufacturing Audible Truth. The program shows a variety of styles of multichannel music from around the world.

-

Machine Mourning: Beyond the Void of Extractive Listening

Daria Kozlova (UK) & Arwina Afsharnejad (DE)

Under the scorching sun on the top of the melting AI iceberg, Grimes peacefully reads The Communist Manifesto to David Guetta deepfaking Eminem’s vocals. Google guru Ray Kurzweil floats by on a drifting ice floe. Kurzweil is waving hello and continuing a lively conversation with the AI-reincarnated double of his deceased father. Only distant twitter…

-

Hate-follow me

Helga Arias (SP/CH)

Since the advent of the Twitter revolution and the widespread use of the internet, social media has profoundly transformed our interactions and responses during crises. It has gained a powerful and faster-than-ever influence on what is known as crowd behavior. Recent phenomena, such as the proliferation of false information and the emergence of influencers who…

-

AI Explainability, Embodiment and Sound in Space: A Case Study

Nicola Privato (IT)

RAVE is a neural synthesis model for real-time performance. It compresses high-dimensional input data into lower-dimensional representations, known as latent spaces, that can be navigated by the performer in visual programming environments through dedicated objects.

-

Breathless

Mona Hedayati (IR/CA)

My lecture-performance initially provides a brief overview of a semi-generative, live sensor-to-sound performance conceptually embedded in my affective response to socio-political oppression that I am personally tied to. On a formal level, I will underscore the qualities of AI-powered immersive audio channeled into bodies that moves from particles to wave, from matter to event to…

-

Vibrate ResonAIte

Julian Rubisch (AT), Tobias Leibetseder (AT)

Vibrate ResonAIte connects human and artificial creativity in a dialogue of images and sound. Mutual inspiration and provocation result in blending and diffusion of the participating minds until physical boundaries seem to disappear.

-

SADISS, a smartphone-based sound system

Volkmar Klien (AT), Martina Claussen (DE/AT), Angelica Castello (MX/AT), Tobias Leibetseder (AT)

SADISS is a web-based application developed at Anton Bruckner Private University (Linz, Austria) that bundles smartphones into monumental yet intricate sound systems or choirs.

-

No One Died

Yunyu Ong (AU)

Dive into a world of deceptive truths and immersive storytelling at the No One Died workshop! Explore multi-perspective sound music narratives that challenge conventional narratives through spatial audio experiences.

-

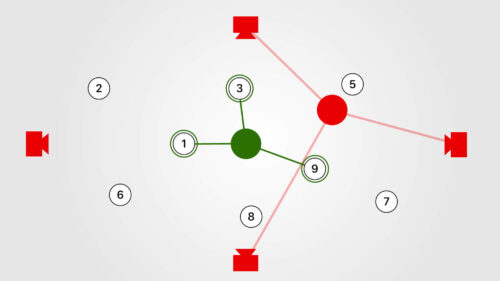

MoNoDeC: The Mobile Node Controller Platform

Nick Hwang (US), Anthony T. Marasco (US), Eric Sheffield (US)

The workshop will demonstrate the usage of MoNoDeC (tool) in the context of the composition Punctuated Equilibrium. Participants would learn about MoNoDeC, Punctuated Equilibrium and Collab-Hub, and take turns being audience and control members.