The potential of artificial intelligence in musical applications has been demonstrated by various projects in recent years. Modern AI can certainly be seen as another step towards expanding musical possibilities through the use of technology.

The connection between music and technology has a long tradition, from the first instruments that served as a complement to the human voice to the mechanical and electronic instruments (or automata) that have emerged throughout human history.

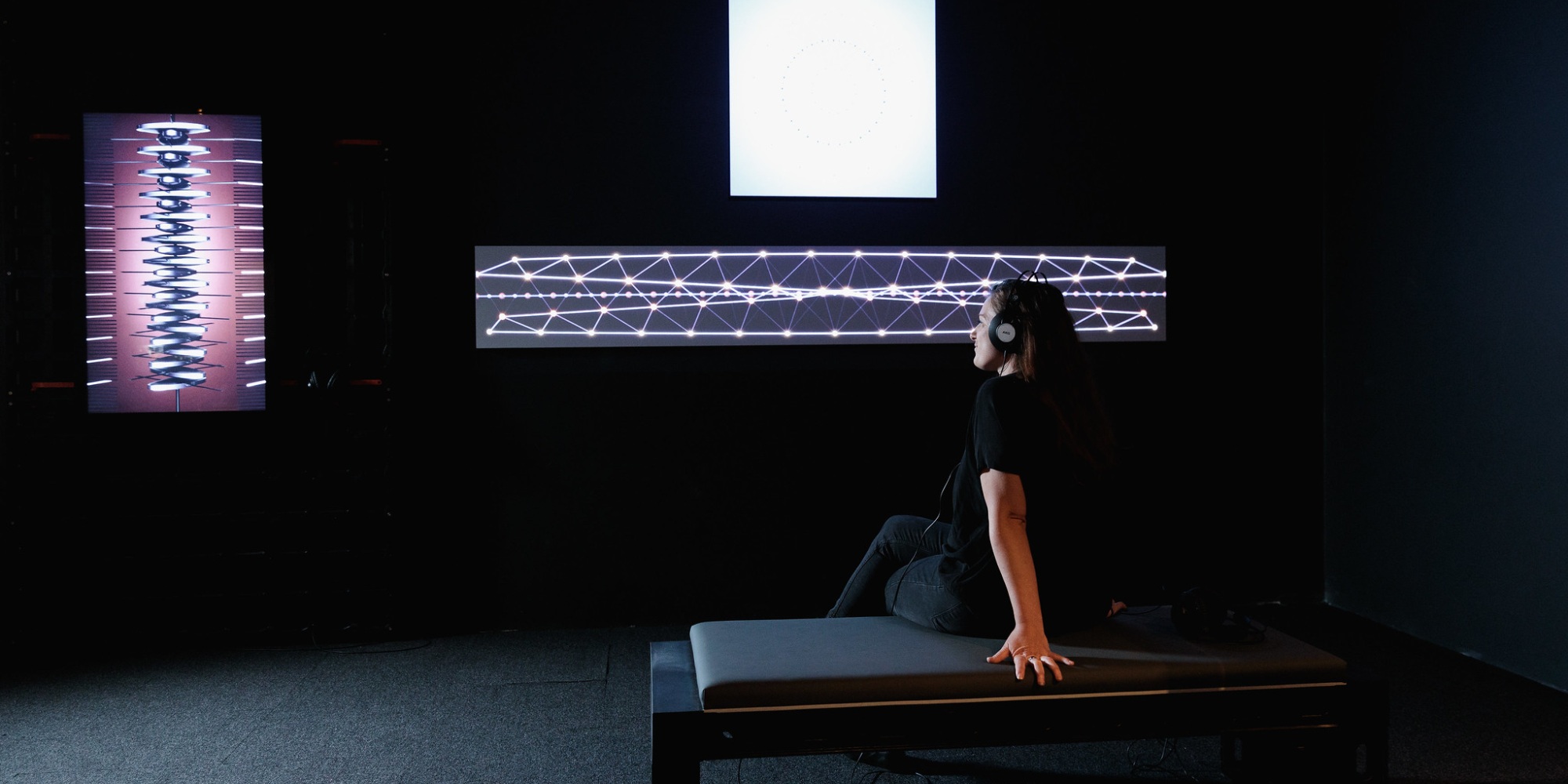

The Ars Electronica Center has been dedicating a special exhibition to this topic since 2019. Entitled “AI x Music,” Ars Electronica uses a wide variety of exhibits to trace more than 1,000 years of the cultural and technological history of musical automata, machines and systems for composition and reproduction.

As early as the 9th century, Banū Mūsā ibn Shākir from Baghdad described the first programmable music automaton with al-Āla allatī tuzammir bi-nafsihā – a reproduction of which is on display in “AI x Music.” The exhibition extends from these beginnings to the Bösendorfer 290 Imperial CEUS computer grand piano, which is equipped with one of the most advanced and precise integrated recording and playback systems available today.

The grand piano was linked by the Ars Electronica Futurelab to four applications from current research on artificial intelligence in music. Performance RNN from Google’s Magenta project is based on a Recurrent Neural Network (RNN) that independently generates polyphonic music. You can still hear quite awkward improvisations. Piano Genie, also from Magenta, allows visitors to play the 88-key piano via an 8-key controller. The interface simplifies and supports music making for all users. The AI model MuseNet from the research organization Open-AI can imitate various musical styles. Like the GPT-2 language model also presented at the Ars Electronica Center, MuseNet works with a uniquely large data set of examples. From this pool of musical pieces with their recurring patterns in harmony, rhythm and tone sequence, the statistical model has learned essential structures from which the AI can now generate something new.

The possibilities of true-to-original recording via the Bösendorfer Imperial 290 CEUS are demonstrated using a recording of Maki Namekawas and Dennis Russell Davies’ interpretation of Ravel’s Ma Mère l’Oye. Highly sensitive sensors register in detail all sound-generating movements, from the speed of the keystrokes to the position of the pedal, to accurately reproduce the interpretation. Accompanying the various pieces and AI improvisations are visualizations by artist Cori O’Lan. For both the piano interpretation and the piano phase improvisations, new visualizations were created in co-production with the Ars Electronica Futurelab.

The exhibition addresses not only the historical connection between music and technology, but also the tension that arises for many visitors between the emotionality and deeply sensual experience of this medium and the mathematics and mechanics that are understood as purely rational and nonsensical. The social acceptance of AI technologies in the field of music seems to be much more challenging – and to cause more irritation – than the automated production of texts, statements and decisions. The programs on display at the Ars Electronica Futurelab are intended to give visitors the opportunity to hear for themselves how these technologies are used in music and what results they produce, as well as to develop a basic knowledge on which they can base their own assessments and opinions.

Credits

Ars Electronica Futurelab: Ali Nikrang, Arno Deutschbauer

Realtime Visuals: Cori O’Lan

PARTNER: Ars Electronica Center