At the Fraunhofer Institute for Medical Image Computing MEVIS, they develop real-world software solution for computer-aided medicine and enhance information integration and decision support for clinicians. The goals of the work is to detect diseases earlier and more reliably, customize treatments for each patient, and make therapeutic success measurable.

Patients with bronchial or lung diseases have been examined with X-rays for over a century. Due to technical progress, CT and MR technologies are nowadays used more and more frequently to produce series of detailed, three-dimensional layered images of the interior of the body. These devices have gotten faster and now produce better images of a greater number of patients in a decreasing amount of time. Meanwhile, automated analytical tools are improving the diagnostic capabilities of the computer. Over recent decades, a wealth of digital information emerged, residing in images and insights, but dispersed worldwide in the archives of clinics and medical practices.

On this basis, researchers are now developing so-called deep neural networks. These networks learn to analyze medical images layer by layer and recognize anomalies thereby. They first extract frequent local patterns, and subsequent layers integrate these into regional and increasingly wider contextual characterizations. This hierarchy of characteristics enables them to differentiate among local information such as a lung tumor, and to evaluate the context—for instance, whether the tumor is in a lung or the liver.

This 3-D film produced by Fraunhofer MEVIS provides a simple, understandable explanation of deep neural networks. Beginning in May, it will be screened regularly in Deep Space 8K. We got a preview from Markus Wenzel, a computer scientist working on clinical applications of machine learning algorithms at Fraunhofer MEVIS.

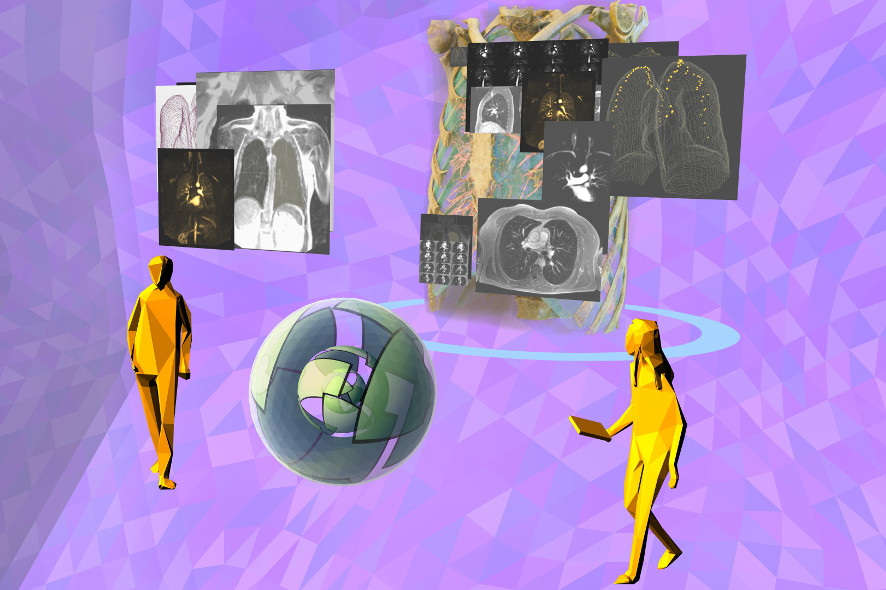

Patient-Doctor-AI-Lung-Examination: The recorded medical data are no longer viewed first by the radiologist. Instead, the neural networks extract the complex relationships from the information and summarize them. The doctor uses the resulting knowledge and can share it with the patient.

Before you joined Fraunhofer MEVIS, you worked at a clinic in Florida. Did the close cooperation with physicians influence your approach as a computer scientist?

Markus Wenzel: Yes, it made a big impact. I intentionally avoided going to university clinics; instead, I went to facilities that offered scant latitude for research. That was always a good filter to keep out ideas too far removed from actual practice. And now, I’m even more delighted that the radiologists and other clinical colleagues I work with on a daily basis in Hamburg have shown such tremendous enthusiasm for the subject of deep neural networks and cognitive computing. I also have plenty of opportunities to question them about their work and the methods they use, and thus to find out what support we can provide to help them most effectively.

How has the radiologist’s role been changed by the increasing use of new imaging procedures—for instance, in the examination of bronchial or lung illnesses?

Markus Wenzel: The digitization of medical imaging has meant that radiologists can use the computer as an aid. At first, this was primarily a matter of being able to display the images faster— which in turn led to more images being acquired using CT, MR, x-ray and ultrasound. Physicians realized that they could see and differentiate among structures in the body that hadn’t been visible in such high detail before, and the equipment manufacturers responded with even higher resolutions and faster devices that delivered more and more cross-sectional images per examination.

Today, radiologists can use imaging to answer many more clinical questions than they ever could before, but the demands on their knowledge and the workload they have to handle have also never been greater. Consider, for example, monitoring the progress of a patient suspected of having lung cancer. In two scans, each consisting of approximately 500-1,000 individual images, and taken six months apart, all of the conspicuous nodules in the lung have to be located and correlated in the two scans. For each pair of corresponding anomalies in the earlier and the current scans, the change has to be assessed, since that’s what provides the information for therapy decisions: Benign follow-up or need for treatment? The physicians have to find, compare, measure and assess, and do so with huge quantities of data. Some of these steps are elementarily cognitive tasks—monotonous, tedious. So you can easily imagine that scientists would come up with the idea of replicating the instrument that enables human beings to solve cognitive problems: The brain, which consists of neurons, all of which are interconnected in a gigantic network. And the network is flexible; it adapts to the task at hand within certain limits. This is roughly what happens when a person learns a new skill.

Patient-Doctor-AI-Interaction: In the future, doctors will compare their patient’s medical images and treatment information with those of other patients for more precise, customized therapy.

What are neural networks?

Markus Wenzel: Neural networks are a model of the brain constructed of additions and multiplications that emulate what happens in a neuron in the brain. Via synapses, a neuron receives impulses from other neurons, upgrades or suppresses them, and adds everything up. If the incoming impulses are strong enough, the neuron sends an impulse to all of its down-line neurons. This is the basis of all our capabilities, everything we can do with the power of our brain. That includes cognitive abilities—for example, seeing and categorizing what we see, hearing and understanding what’s said to us. That the cognitive apparatus of human beings—and, of course, many animals as well—are so efficient is presumably attributable to their layered construction. Take the eye as an example: The retina already filters certain movements and directions. And this continues on all the way to areas of the brain that carry out more complex perceptual functions.

And what’s a cognitive computer?

Markus Wenzel: Cognitive computers emulate cognitive functions of the brain such as seeing and hearing. The most widespread approach to this is with so-called deep neural networks. They’re just like those areas of the brain that depict cognitive functions; they’re organized in layers—in fact, quite a few of them, sometimes 50 or more. However, in contrast to those in the human brain, these layers initially have no function. For the neural network to be able to carry out a function, it has to be trained by showing it examples consisting of an image and a sort of explanation of what the neural network should find in it. In our example of lung cancer, the network is shown, on every clinical image of the lung, exactly where the anomalies are that the network will later have to identify and assess on its own. Showing the network a sufficient number of such examples enables it to learn what properties of the image on each layer it has to analyze in order to do its job. Once this has been learned, it can be called a cognitive machine. Strictly speaking, this is the only one of the necessary building blocks of cognition, but we’re also working on additional components—explaining why a particular decision was made, the capacity to learn from feedback, and even the readiness to independently request feedback when the degree of uncertainty is too great for it to make a definite decision.

Deep-Neuronal-Net: A hierarchy of characteristics is created, for example, the network can ultimately distinguish a lung tumour from a blood vessel and assess the context, for example where a tumour is located.

How widespread is cognitive computing in medicine today?

Markus Wenzel: There are numerous very promising research projects in this field, and their number is increasing daily. Our clinical partners are enthusiastic and highly committed to this effort. And something that’s been an impediment in recent years has been eliminated—approval has been granted for the use of cognitive computers as medical products for diagnostic purposes, at least in the U.S., but we have little doubt that Europe will soon follow suit. But, at least from my point of view, there are still the two fundamental questions of security and explainability, which researchers are now tackling. Security means that the system cannot be easily attacked. And I think it’s important that cognitive computing not be implemented in the field of medicine as a closed system, but rather that its results, decisions and recommendations can transparently be traced back to features of the input data. That’s what we call explainability.

What role does data protection play in this context?

Markus Wenzel: Basically, whether or not a particular set of data can be assigned to a certain individual is irrelevant to the process of training multiple cognitive algorithms. Accordingly, we can anonymize the data and break them up into such small packets that it’s impossible to reconstruct them. Moreover, it’s unnecessary to store the data beyond the end of the training period, which lasts only a few weeks. Therefore, it’s possible to use the data for a very short time and for a tightly circumscribed purpose, and nevertheless yield tremendous benefits. Thus, we can allay certain concerns such as that the data will be stored permanently, that people leave behind indelible digital trails about the state of their health, or that their data can be combined variously and arbitrarily with data gleaned from others.

But there are more subtle implications of an ethical and commercial nature. The data become valuable, and the ones who profit from their use are certainly not the ones who own them—i.e. the patients. Quite the contrary—if they want to share in the benefits, they might even have to pay for the additional analyses. On the other hand, creating this added value is, of course, quite an elaborate process. The possibility of using data to train algorithms that can improve the diagnostic process changes the relationship between patients and physicians as well as insurance companies and hospitals. The value of data strengthens the hand of patients. In my view, there are many indications that renegotiating this balance will lead to dramatic changes in the health care system.

Deep-Neuronal-Net-2: From sufficient training examples, the network can learn which properties of the medical images it must analyse in each layer in order to fulfil its task.

Markus Wenzel has been working on clinical applications of machine learning algorithms at Fraunhofer MEVIS since 2005. During this time, he has served as thesis advisor to numerous students and written his own dissertation on intelligent methods in breast cancer diagnosis. He has also initiated and managed several major international research projects. His current work focuses on designing projects that apply cognitive computing and AI methods, as well as developing and conducting machine learning courses on the university level and as continuing professional education.

Before your next visit to a Best of Deep Space 8K, ask if this film can be shown!

To learn more about Ars Electronica, follow us on Facebook, Twitter, Instagram et al., subscribe to our newsletter, and check us out online at https://ars.electronica.art/news/en/.