Location

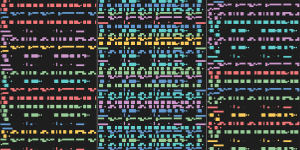

APPARATUM

panGenerator (PL)

Digital interface meets purely analogue sound. The APPARATUM was inspired by the heritage of the Polish Radio Experimental Studio — one of the first studios in the world to produce electroacoustic music. The installation draws inspiration musically and graphically from the “Symphony – Electronic Music” composed by Bogusław Schaeffer. Here, analogue sound generators, based on magnetic tape and optical components, are controlled via a graphic score with a digital interface. NOTE: You can create and listen to your own composition, print out your score and retrieve it in the online archive of APPARATUM.

Voices from AI in Experimental Improvisation

Tomomi Adachi (JP), Andreas Dzialocha (DE), Marcello Lussana (IT)

Voices from AI in Experimental Improvisation is a project by Tomomi Adachi, Andreas Dzialocha and Marcello Lussana. They built an AI called “tomomibot” which learned Adachi’s voice and improvisation techniques using neural network algorithms. The performance raises questions about the logic and politics of computers in relation to human culture.

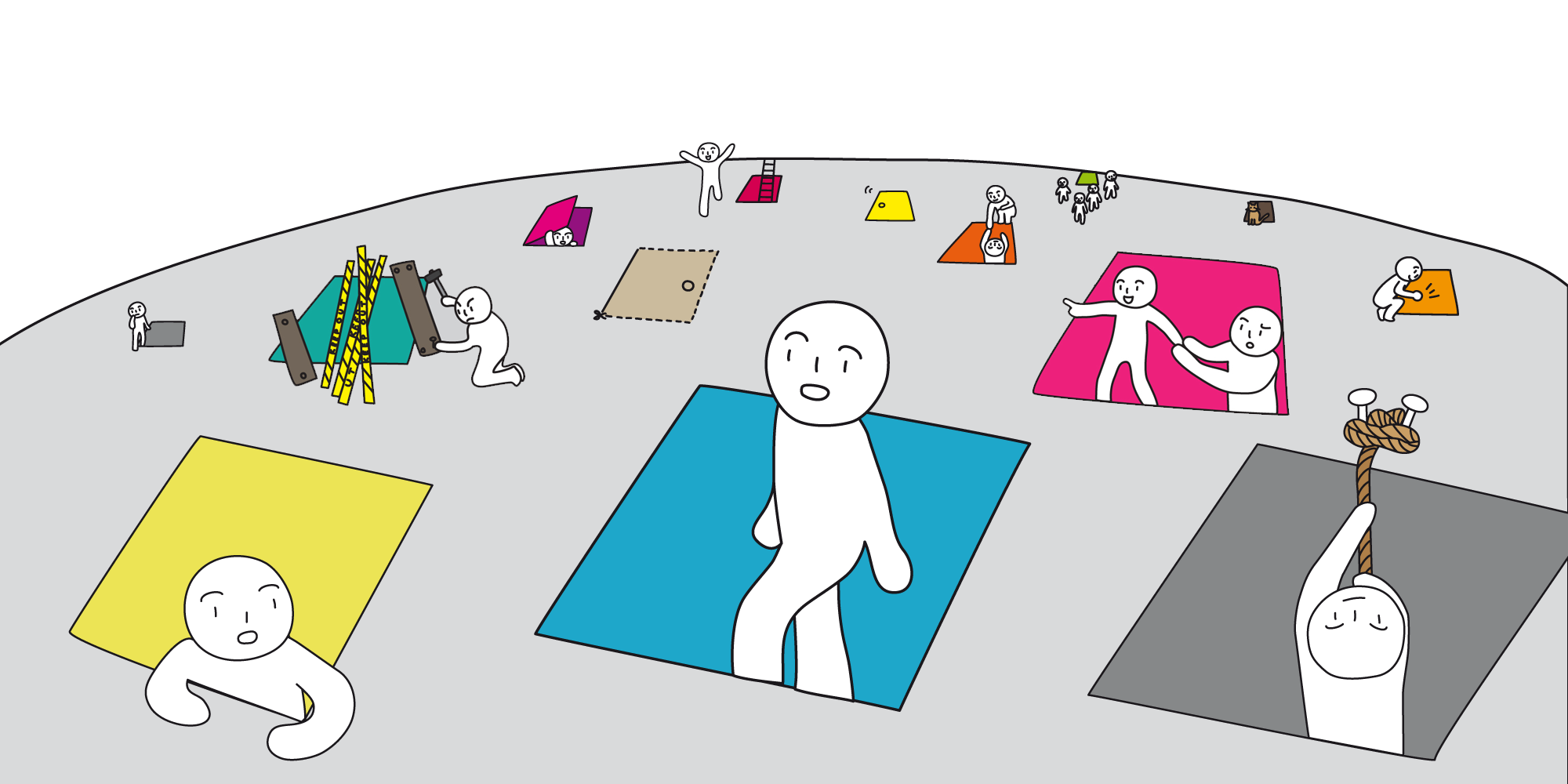

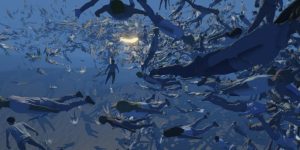

Emergence

Universal Everything (GB)

Emergence is an open-world environment and crowd performance. The virtual reality installation expresses the primal human desire to maintain individual identity while being part of a crowd. As the user navigates a crowd of thousands, shafts of light beckon them closer. As they touch the light, the environment responds in real time, continually challenging the user's perception. In this installation, Universal Everything experiments with software-based improvisation and custom-coded crowd simulations.

GRAND JEU 2

Wolfgang Mitterer (AT)

Electronics are operated live and represent a second organ with multiple possibilities when coupled with a keyboard and controllers. This results in a massive expansion of sound in every direction, from Baroque to Bruckner, and beyond.

Automatic Music Generation with Deep Learning

Ali Nikrang (AT)

In recent years, there has been a great deal of academic interest in applying Deep Learning to creative tasks such as generating texts, images, or music. This workshop focuses on current approaches to music generation. We will also discuss questions like: What makes musical data so special? What can music enthusiasts expect from these models? And how do listeners accept music composed by AI?

The Normalizing Machine

Dan Stavy (IL), Eran Weissenstern (IL), Mushon Zer-Aviv (IL)

The Normalizing Machine aims to identify and analyze the image of social normalcy. Facing the camera, each participant is asked to point out who looks “more normal” from a line-up of previously recorded participants. The machine adds the selection to its aggregated algorithmic image of normalcy.

ULTRACHUNK (unfortunately canceled)

Jennifer Walshe (IE), Memo Akten (TR)

What are the implications of using your voice to improvise with a neural network? Composer Jennifer Walshe and artist Memo Akten present ULTRACHUNK (2018), a neural network trained on a corpus of Walshe’s solo vocal improvisations. Here, Walshe wrangles with an artificially intelligent duet partner – one that reflects a distorted version of her own improvisatory language and individual voice.

Looped Improvisation

Ali Nikrang (AT), Michael Lahner (AT)

We generated several short sequences that are played as input in a loop. As a result, the application will continue to create new outputs despite the same input.

Music Traveler

Aleksey Igudesman (DE/AT), Julia Rhee (KR/US), Dominik Joelsohn (DE/AT), Ivan Turkalj (HR/AT)

Music Traveler is a marketplace that connects musicians and centralizes spaces with musical instruments, equipment, and services for the creative industry.

Nokia Bell Labs

Domhnaill Hernon (US)

An interactive experience fusing music and image. Users’ movements are transformed into a dynamically designed audio-visual experience through the Bell Labs Motion Engine.