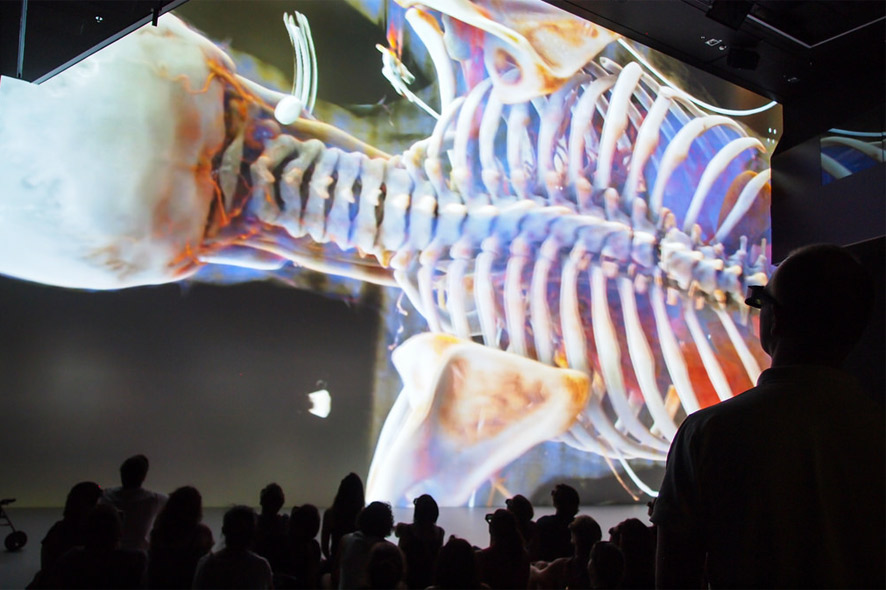

Considering how computer tomography (CT) makes it possible to peer inside the human body without resorting to a scalpel is actually quite fascinating in its own right. Cross-sectional images derived from the raw data delivered by CT scanners have already been providing physicians with essential information on a timely basis. Now, Siemens Healthcare has taken advantage of methods used in the film industry to come up with Cinematic Rendering: realistic three-dimensional depictions of the human body. You can behold the astounding results of these new imaging procedures in the Ars Electronica Center Linz’s new Deep Space 8K. Dr. Klaus Engel, Principal Key Expert at Siemens AG, and Dr. Franz Fellner of Linz General Hospital will present Cinematic Rendering at the next Deep Space LIVE on Thursday. October 15, 2015 at 8 PM, and during the upcoming Deep Space Weekend “The Universe Within” on Saturday, October 17, 2015 at 3 PM.

What’s new about these images? How is it possible to illuminate and tint organs, bones and muscles inside the body of a living human being? And what factors did the Ars Electronica Futurelab crew have to consider to be able to screen these 3-D visualizations in Deep Space 8K? Dr. Klaus Engel of Siemens Healthcare and Ars Electronica Futurelab Technical Director Roland Haring answered these and other questions.

Credit: Dorin Comaniciu (Siemens Healthcare), Franz Fellner (Radiology Institute AKH Linz, Medical Society Upper Austria), Walter Märzendorfer (Siemens Healthcare)

With the development of Cinematic Rendering, Siemens Healthcare has taken visualizing the human body to a whole new level. But, tell me: what’s the use of photorealistic images in medicine? Hasn’t the imaging in use up to now done a good job?

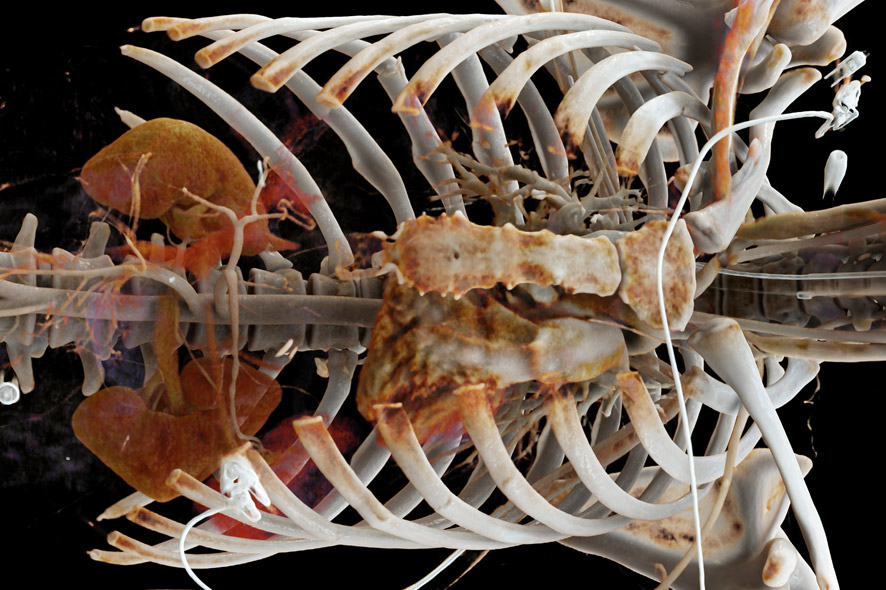

Klaus Engel: That’s true! We’re already able to compute highly detailed images of the human body. Nevertheless, they’re incapable of depicting certain visual effects that occur in nature. Human perception has been trained to interpret the most subtly nuanced shadows in order to estimate forms and distances. These various shadings come about through the complex interaction of light with these objects. On surfaces, light is reflected, refracted and dispersed. But it also penetrates the material, whereby its spectrum shifts, it repeatedly changes direction and emerges from the material at another point. Calculating these complex paths on which light travels until it reaches our eyes’ retinas has, up to now, demanded too much computing power and the algorithms have been too slow.

Credit: Hospital do Coração, São Paulo, Brasilien / www.siemens.de/presse

To generate realistic 3-D depictions of the human body, you have recourse to the artistic repertoire of animated filmmaking. What’s so cinematic about this imaging?

Klaus Engel: Just like in the film industry, we use photographically captured spherical panoramas to illuminate the scene. The film industry employs these spherical panoramas in order to believably integrate digital characters such as Gollum in “Lord of the Rings” into scenes with real live cast members. To make for believable lighting of a digital character—which is usually inserted into the scene long after it was shot—a spherical panorama is recorded at the moment the scene’s shot, and this is used later to calculate the lighting of the digital character. As a result, the light and shadows and the coloring of the scene are identical with respect to the actors and actresses and the digitally inserted characters.

We use such photographic spherical panoramas in order to impart natural illumination to the display of images based on medical data. In this way, we can create highly complex lighting environments that make anatomical structures stand out starkly. The high dynamic range of the resulting images makes it possible to achieve very dramatic effects that are highly conducive to noticing significant details. Plus, this opens up the option of modeling complex camera effects such as those produced by variable apertures, exposure times and optics. Thus, what we have at our disposal to generate these images is, so to speak, a completely virtual camera that we can also use to model artistic effects in order to maximize the amount of information that the images get across.

What steps are necessary to be able to generate photorealistic 3-D depictions from the data delivered by a CT scanner?

Klaus Engel: First of all, cross-sections of the body are reconstructed from the data captured by the scanner. Stacking many such cross-sectional images produces a 3-D volume. In this 3-D volume, we can simulate all possible paths along which light can propagate. Thanks to high-performance graphic cards and an algorithm that finds only those light paths that can reach a viewer’s eyes, we can now simulate the complex interaction of the light with the medical data. The resulting images are much easier to understand, even for untrained spectators. This, in turn, opens up new possibilities for using such images in the communication between physicians and patients and in medical students’ education entailing live patients. This new procedure also has enormous potential in the diagnosis of illnesses, but the studies on this subject are still in progress.

In conjunction with “The Universe Within,” Deep Space 8K morphs into a futuristic virtual anatomy theater. What possibilities are inherent in the environment that the Ars Electronica Futurelab developed when it comes to depicting medical data?

Roland Haring: Deep Space 8K is a unique multi-user environment that facilitates interaction and collaboration. Whereas conventional virtual-reality installations are limited to a handful of users, more than 120 people can simultaneously immerse themselves in three-dimensional worlds in Deep Space 8K. This is made possible by the size of the physical space and the high resolution of the projections. And precisely this is Deep Space’s strength in the visualization of medical data, which, due to its wealth of details, benefits tremendously from precise depiction. Various interfaces—some of them highly experimental—enable visitors to explore and experience these worlds of imagery.

How detailed are the depictions produced by Cinematic Rendering? And how are the colors of bones, muscles and organs actually determined?

Klaus Engel: The degree of resolution of a modern CT scanner is approximately half a millimeter, which means that we can gather extremely detailed anatomical information and use it to generate very detailed images. Of course, a CT scanner doesn’t register color information from the body; rather, it delivers information about x-rays’ diminishment within the body. Bones, for example, weaken the rays more than muscle tissue does and can thus be colored differently by means of a transfer function that assigns to each material a different color and degree of transparency. Accordingly, we can apply different colors to muscle tissue and bones, or make soft tissue transparent in order to be able to look deeper inside the body. It’s impossible to differentiate among organs purely on the basis of x-ray absorption. What we do have nowadays are highly advanced image recognition procedures that automatically identify organs and structures and can extract them from the scan.

Credit: Martin Hieslmair

Do the data from the CT scanner have to be post-processed for use in Deep Space 8K?

Roland Haring: In principle, this project works with medical data just like those produced by any modern hospital. But these data are monochrome since they’re actually density measurements. As previously mentioned, the colors are calculated afterwards by means of a special computer algorithm. For various anatomical data sets, there are predefined tables of colors that can then be applied. The visual impression that this evokes is then highly plausible.

Would these real-time visualizations of the human body have been possible in the “old” Deep Space?

Roland Haring: No, for a variety of reasons. First of all, the images provided by the new projectors in Deep Space 8K contain four times as many pixels as before, so the resolution is twice as high. Secondly, the old equipment was already approaching the end of its useful life, so its illumination strength was weakening. And third, with the old computer processors, we would have had no way to depict such visualizations with a sufficiently fluid pictures-per-second rate. Something else that would have made it impossible with the old infrastructure is the fact that, for stereoscopic material, we have to render all pictorial content separately for the left and right eyes, which effectively doubles the required picture rate to 120 Hz.

Credit: Dorin Comaniciu (Siemens Healthcare), Franz Fellner (Radiology Institute AKH Linz, Medical Society Upper Austria), Walter Märzendorfer (Siemens Healthcare)

What will imaging procedures be like in 2025? What items are on your wish list?

Klaus Engel: I predict that photorealistic images like these will be routine in 10 years. Anyone who’s interested—a physician or a patient—will be able to use a tablet to view and analyze the medical images from a digital patient file. This capability will enormously strengthen individuals’ consciousness of their own body and their own health. But for this to take place, the technology has to be made much more user-friendly for laypeople. Organs and other anatomical structures must be recognized automatically and depicted in a way that enables the user to immediately comprehend what the images represent and indicate. In going about this, the computer will certainly be able to point out changes that have occurred, but the final diagnosis will generally remain the responsibility of the physician.