Mirror Mirror

by

Chelsi Goliath

In collaboration with Christopher Theys

Concept

Mirror Mirror on the wall, show us the fairest truths of all. Unveiling the biases in machines, algorithms and AI.

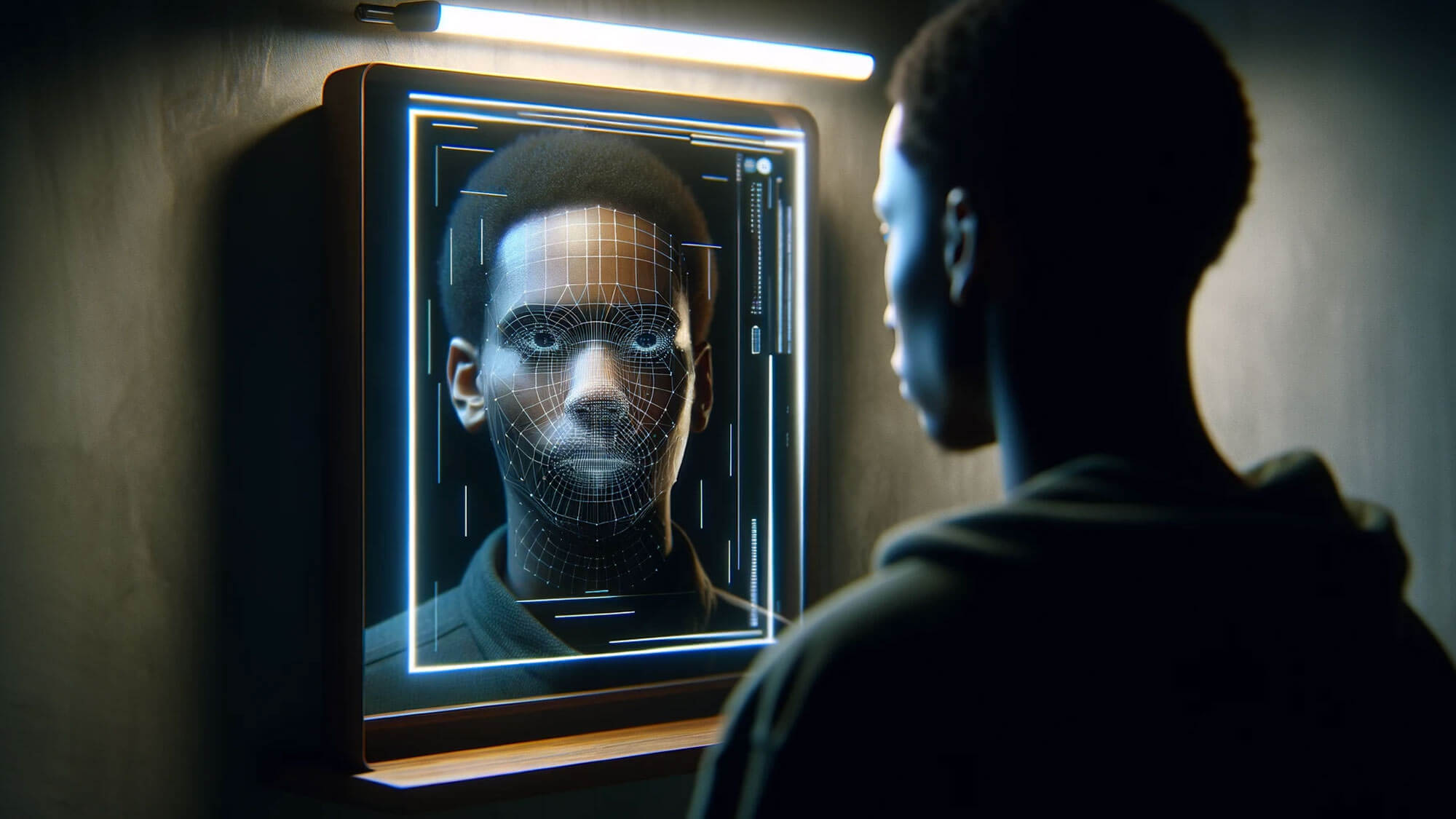

Our project investigates the vital question of creating unbiased technology, specifically in AI and algorithms, for a more equitable future. We initially aimed to eradicate technological biases but realized the complexity of these issues, leading to an exploratory approach without definitive solutions. Our centerpiece, the “Bias Mirror” installation, serves as a literal and metaphorical reflection. It transcends being mere technology, emerging as an artistic medium that provokes introspection about AI biases and personal prejudices.

The Bias Mirror shifts the focus from providing answers to sparking questions and self-reflection. It’s a catalyst for recognizing societal biases and personal involvement in these systems. The installation’s strength lies in its ability to embrace complexity, offering a space for critical thought and personal discovery. It reveals biases in digital frameworks and personal biases, urging users to confront prejudices in their interactions with technology and each other.

Our collaborative approach was key, transcending disciplines and pooling diverse insights to create a multifaceted understanding of bias. This synergy ensured an inclusive environment, crucial for addressing bias and representation issues. Our introspective methodology fostered a mindful development process, contributing to broader discussions on equity in technology.

We adopted design thinking, empathizing with users, defining biases, ideating solutions, and rigorously testing our concepts. This human-centric approach transformed AI bias from an abstract concept into a tangible, interactive experience.

We utilized data science methods, including algorithmic analysis and machine learning, complemented by ethnographic research and critical theory analysis, to holistically understand and mitigate AI biases. Our analysis of datasets like Olivetti’s face dataset, LFW, CelebA, and FairFace, along with CNNs and debiasing techniques, informed our approach to how biases in AI actually exist.

The novelty of our project lies in merging interactive art and technology. This integration elevates the project beyond technical solutions, creating an engaging, emotional, and intellectual user experience. The Bias Mirror is not just informative; it actively involves users, making abstract bias and fairness concepts personal and tangible. Its use of machine learning in an artistic context bridges the gap between complex AI technology and public engagement.

Our project poses the question: How can interactive art reflect and challenge hidden biases in technological systems? This underscores the role of art in revealing and questioning prejudices in technology and its broader societal implications.

Process Reflection by Chelsi Goliath

Our project embraced interdisciplinarity, integrating fields like technology, psychology, socio-economics, and the social sciences. This multifaceted approach was crucial in comprehensively understanding and addressing biases in AI. By merging the technicalities of AI and algorithm development with human behavioral insights and societal implications, we crafted a nuanced and effective interactive installation. This interdisciplinary strategy enriched our perspective, allowing us to delve deeply into the intricate relationship between technology and societal dynamics.

In the project’s early stages, we grappled with an overload of information and diverse viewpoints. This led us to hone in on personal experiences of bias and microaggressions, both in personal interactions and through technology. As the project progressed, guidance from mentors helped us navigate this emotionally charged topic in a healthier, more constructive manner, shifting from anger or frustration to a focus on open dialogue and deeper understanding of ongoing experiences of bias and unfair treatment.

Our exploration of various biases, coupled with practical applications in AI algorithms and user interactions, offered tangible evidence of AI’s deviation from neutral interpretations.

We started off with trying to solve or figure everything out. Our research question before involved so many ‘how’s’ and ‘how can we solve this problem’ to where we are now. We want to Imagine a world where the technology we are exposed to on a daily basis, from artificial intelligence to biometric and facial recognition on our devices is fair and unbiased.

Our question now is can we begin to rethink how these AI & algorithms are developed to mitigate these biases especially for people of color? At the core we have always stayed on track with trying to demystify the nuances of algorithms and AI just from a different perspective. There’s the perspective of being very critical of how opening up these discussions can take different turns, there’s the perspective of how differently this affects different people and then there’s the perspective of viewing this through a cultural lens as a form of artistic expression.

Process Reflection by Christopher Theys

As a collaborator in the pioneering field of interdisciplinary and transcultural collaboration, particularly in the context of a progressive university model, my experience has been profoundly transformative. My journey in this collaboration was not just about contributing my skills in data, science, creative art and expression through code and technology but also about learning from and with diverse individuals.

Interdisciplinarity was central to our project, integrating various fields such as technology, psychology, socio-economics, and social sciences. This approach was essential in understanding and addressing biases in AI. By combining technical aspects of AI and algorithm development with insights into human behavior and social implications, we were able to create a more comprehensive and effective interactive installation. This interdisciplinary methodology enriched our perspective and allowed for a deeper exploration of the complex interplay between technology and societal issues.

Initially, our project was overwhelmed with abundant information and diverse perspectives. This led us to focus on personal experiences related to bias and microaggressions, encountered both interpersonally and through technology. As the project developed, mentor discussions guided us to approach this emotionally charged topic from a healthier standpoint, rather than a position of anger or frustration. Our evolved research question aimed to foster open dialogues about the continuous experiences of bias and unfair treatment, seeking to explore and understand these issues more deeply and constructively.

Through our project “Mirror Mirror” we learned that our hypothesis about biases in AI algorithms held substantial truth. The development of “The Bias Mirror” installation revealed significant biases in AI, particularly in facial recognition. Our research on various biases, combined with the practical implementation in AI algorithms and user interactions, provided concrete insights into the deviations of AI from unbiased interpretations. The project, evolving through mentorship and focused discussions, taught us the complex nature of bias in technology and its interpersonal impacts, enhancing our understanding beyond the initial hypothesis.