Virtual environments seem to be the next level of visualization, communication and collaboration. Designed to create an immersive perceptual illusion, they stimulate the senses of an increasingly sophisticated audience – but what’s actually happening beneath their impressive surface? And how have language and expression adapted over time to meet the growing challenges of reality? Florian Berger, Lead Developer and Artist at the Ars Electronica Futurelab, talks about his approach to visual illusion and artificial reality in virtual space – and the crucial code behind it all.

How does the Ars Electronica Futurelab approach the development of virtual environments?

“Since the founding of the Ars Electronica Futurelab, a key aspect of our research and development agenda has been to develop hardware and software that give artists low-threshold access to flexible and powerful virtual reality systems.” – Florian Berger

Florian Berger: In 1996, the (re)construction of a CAVE was the Ars Electronica Futurelab’s first experiment in creating publicly accessible virtual illusions of reality for visitors to the Ars Electronica Center.

The CAVE (Visual Experience Automatic Virtual Environment) was a very new interface to virtual reality at the time and was originally developed for research purposes. The 3-by-3-by-3-meter cubic space surrounded participants with projections on the walls, ceiling, and floor. CAVE was conceived of as a collaborative scientific visualization environment for use in areas such as medicine, neuroscience and engineering. It was based on the idea of constructing a common scientific visualization model that can be applied in many different ways: interactive modeling and visualization of medical and biological data; three-dimensional shape capture, modeling, and manipulation; and three-dimensional faxing and teleconferencing using PCs.

The CAVE-Lib software, a library used to run VR programs in the CAVE, controlled a network of at least four computers that synchronized the wall and floor projections depending on the position of the viewers to create an immersive illusion in their perception. Magnetic position tracking was used to establish the position of the users, who were equipped with 3D glasses.

Since the CAVE was installed at the Ars Electronica Center, experience has shown that artists are very interested in participating. But projects that were realized with the CAVE in its original form presupposed that the artists who wanted to use this environment already possessed the relevant programming skills – or that they had to collaborate with specialists in order to realize their ideas.

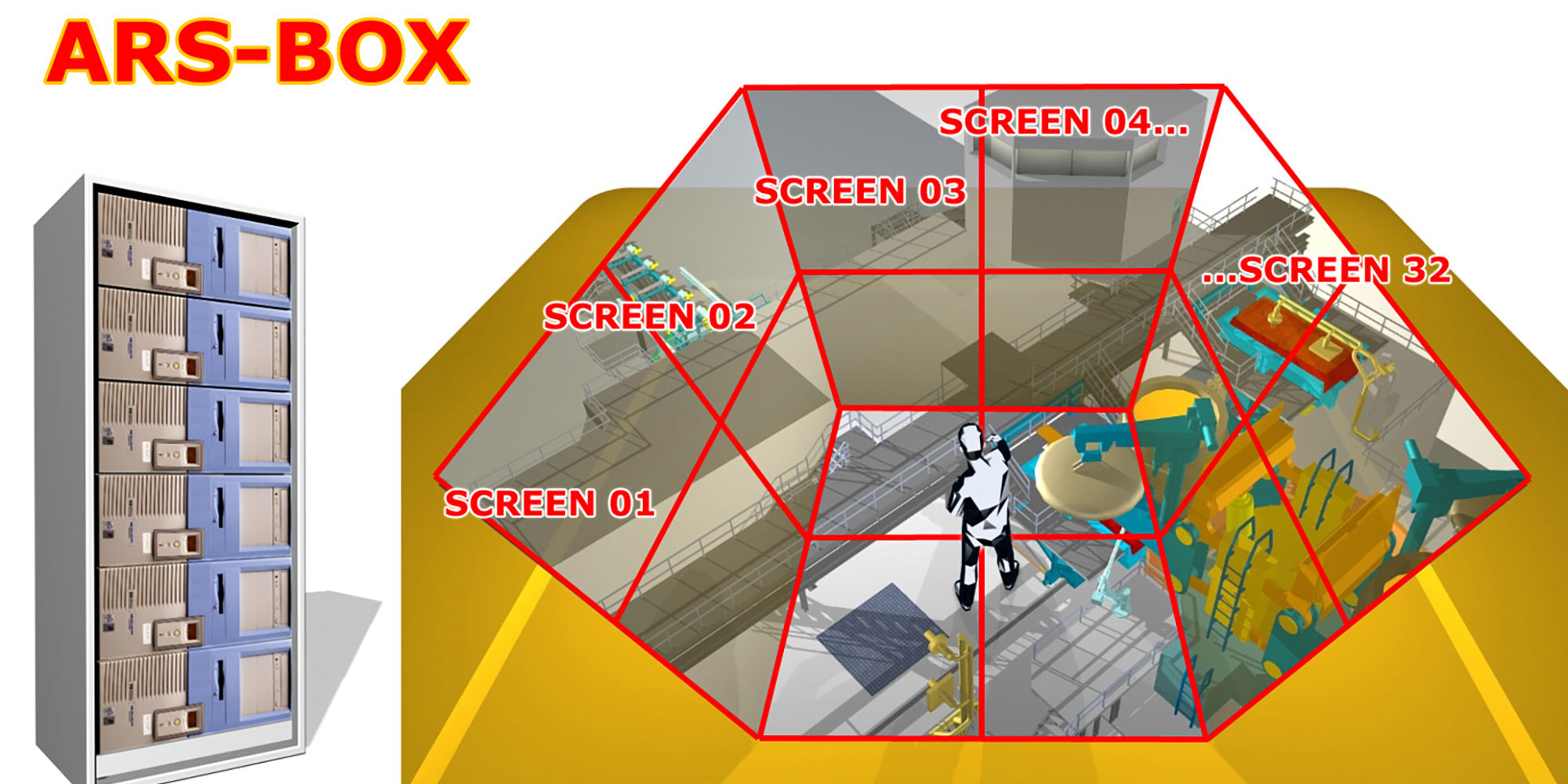

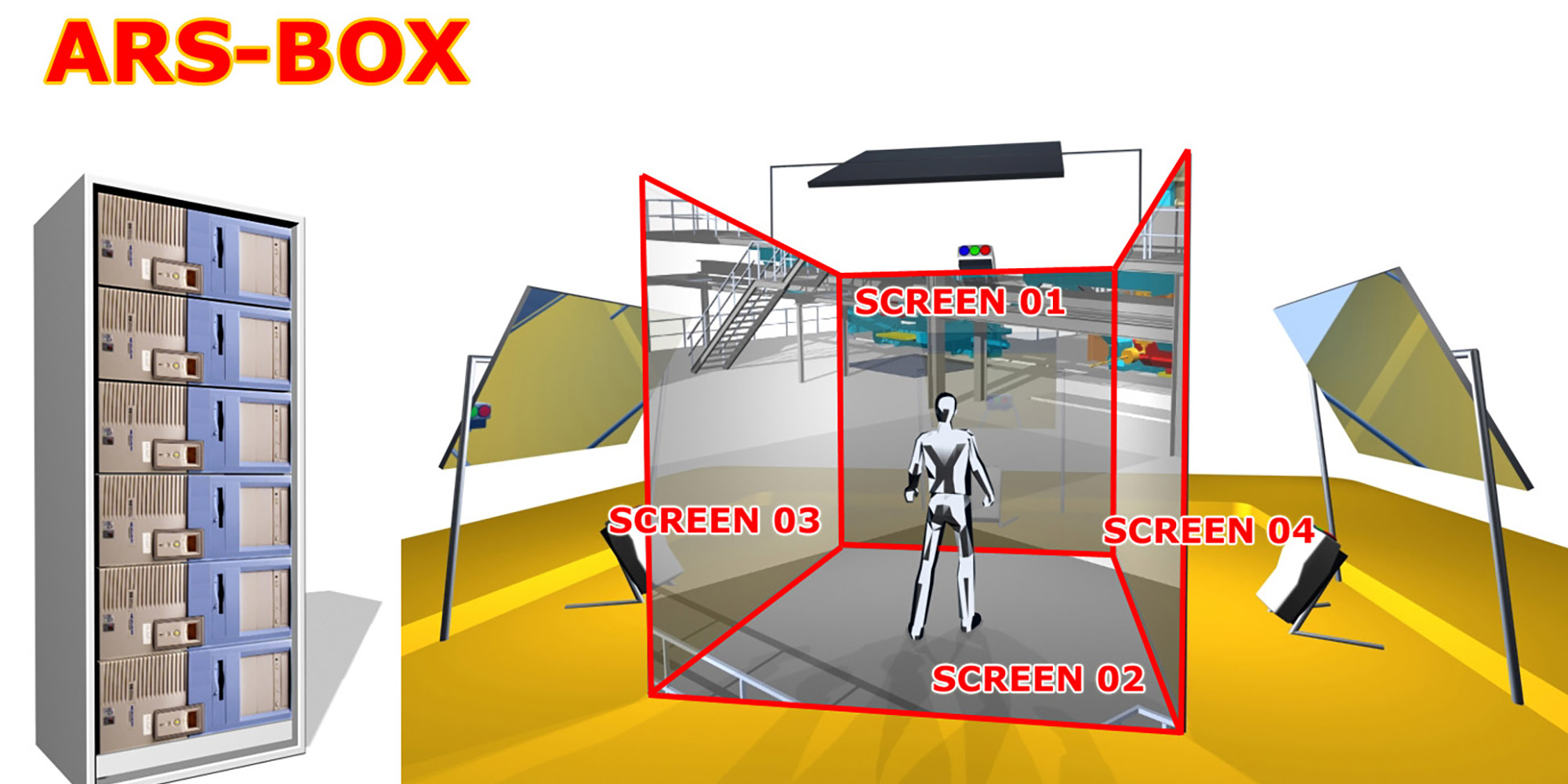

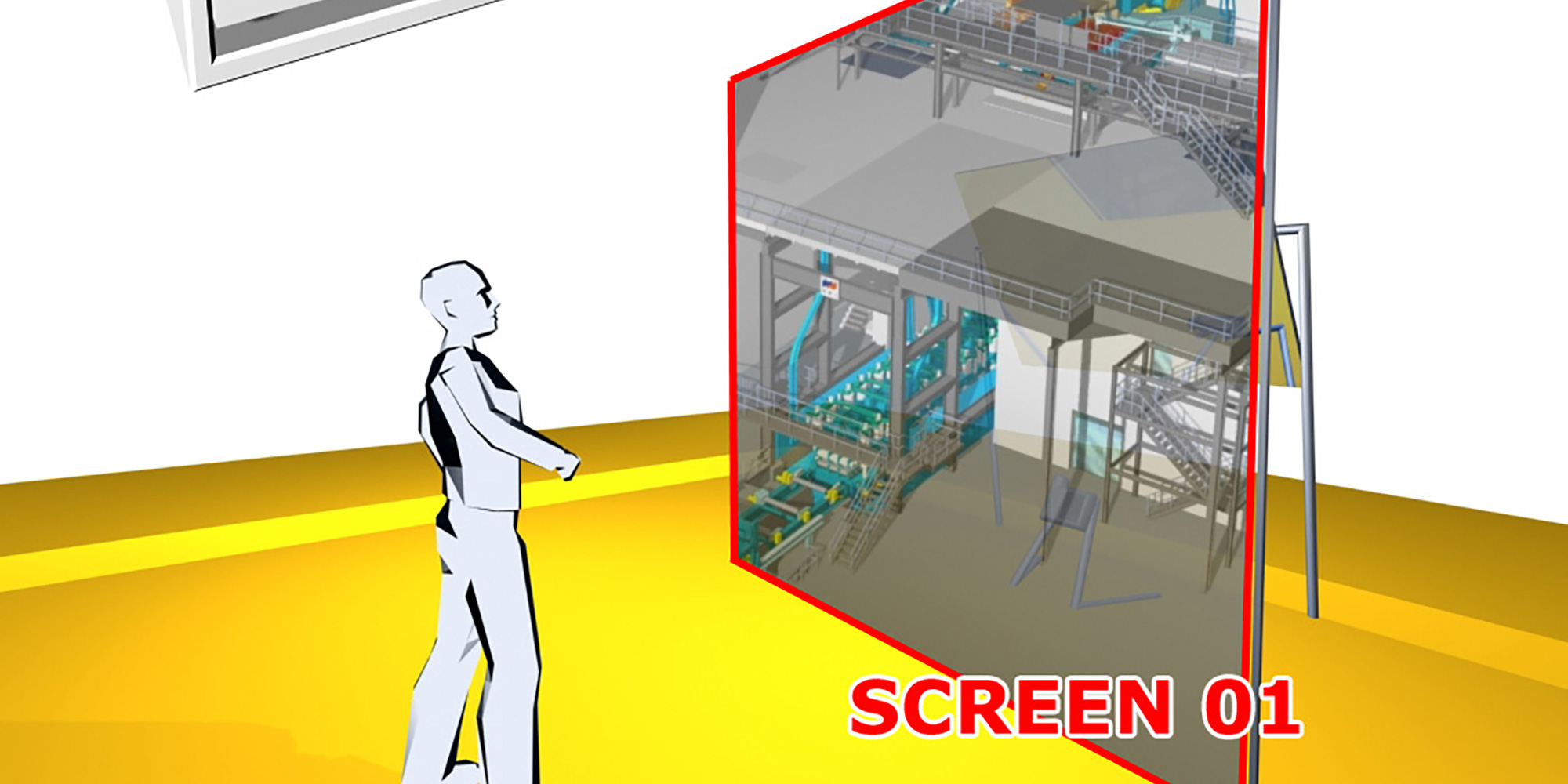

In addition, the applications themselves were necessarily tied to a costly hardware system, which significantly limited the capacity to present such work. So the switch from mainframe computers – originally required for immersive VR environments – to PC-based systems was an important milestone: The ARSBOX, a Linux PC cluster with a GeforceFX graphics card developed at the Ars Electronica Futurelab, is a good example. At the time, it radically reduced the cost of implementing artistic projects.

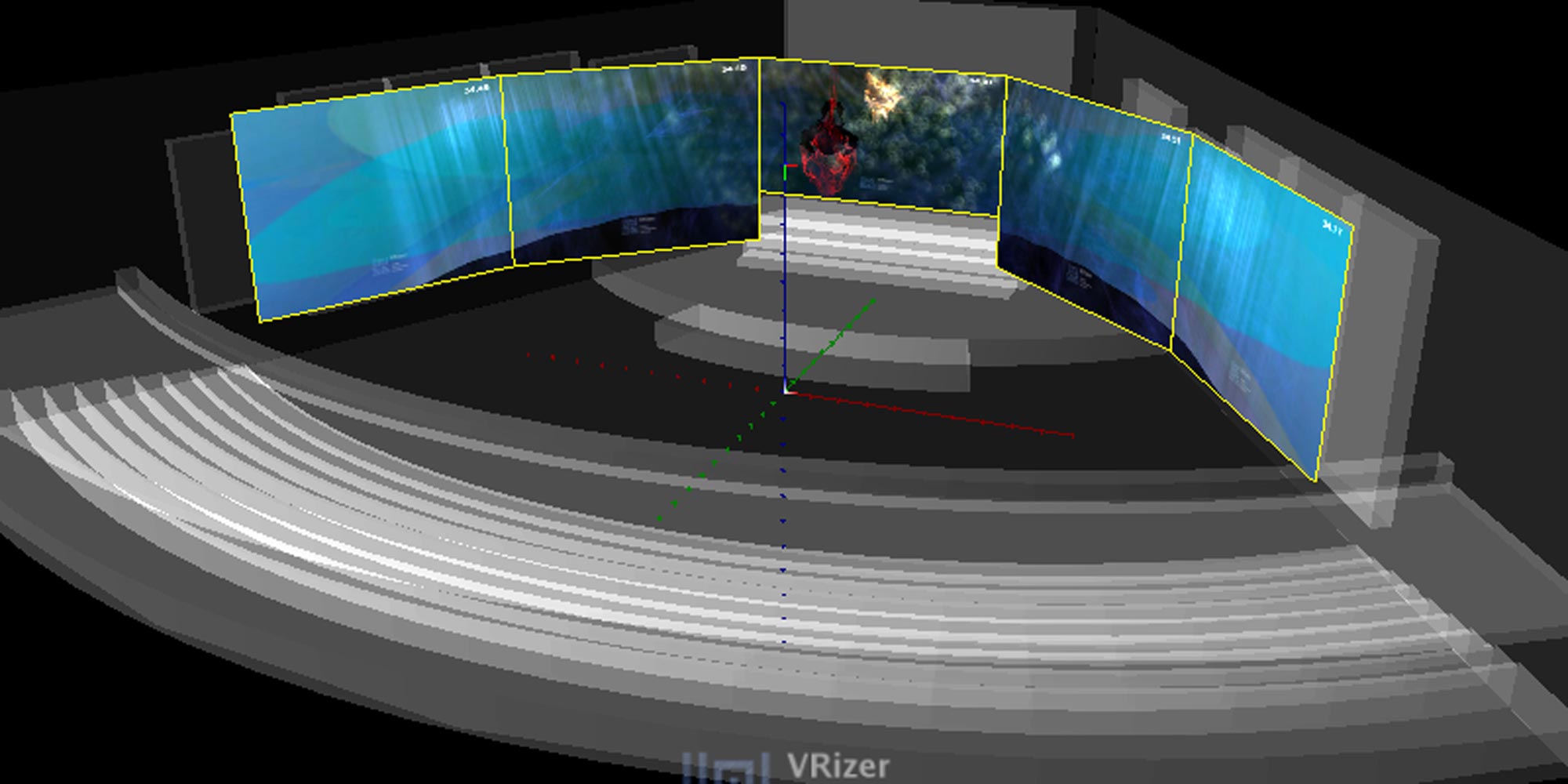

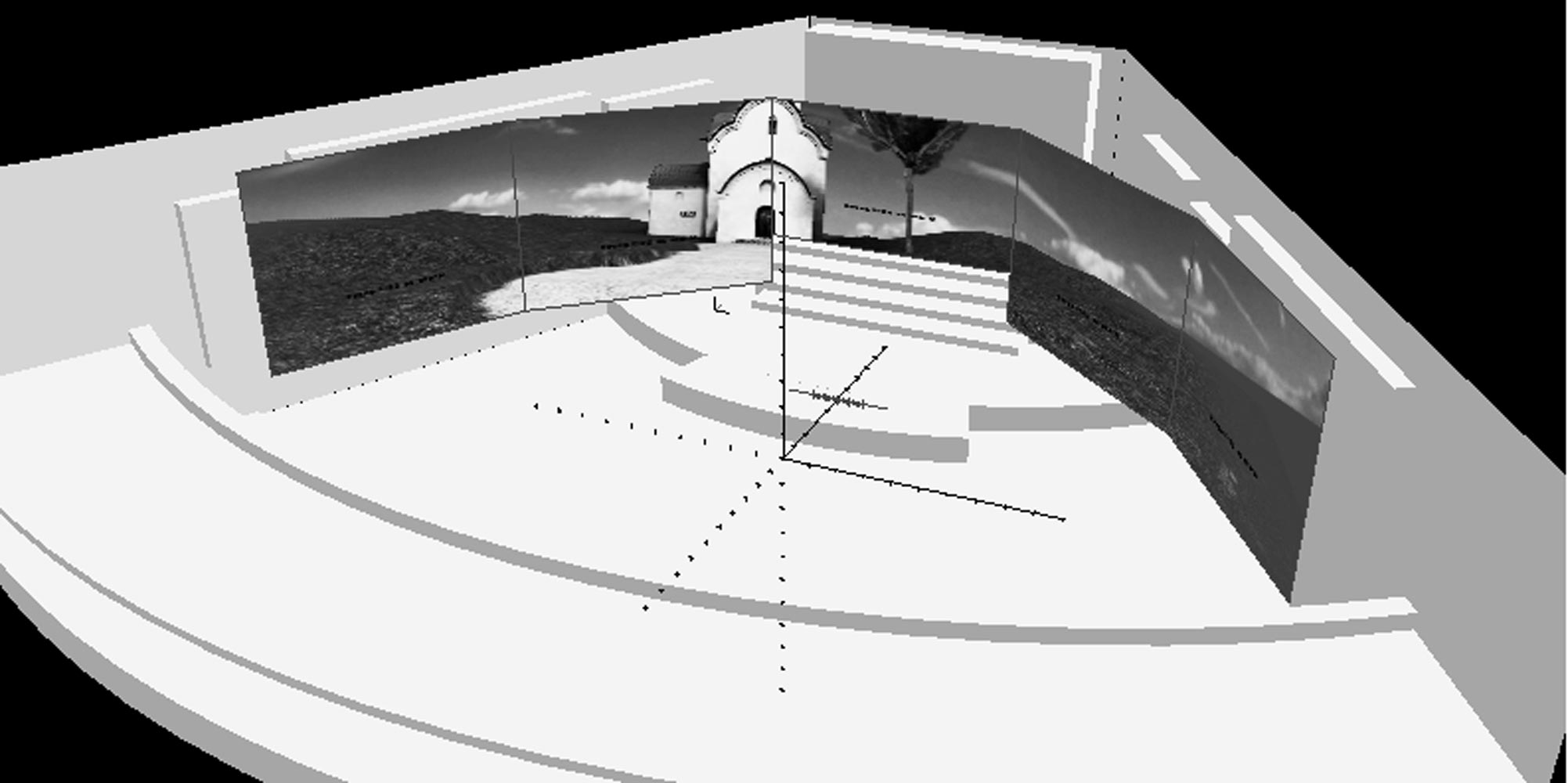

The 3D software VRizer, also developed here at the Lab, made it possible to achieve VR effects in the ARSBOX that were similar to those enabled by the corresponding application in the CAVE, with a decisive advantage: it ran on commercially available computers with a preinstalled Linux operating system and could be used with software that was not originally designed for virtual reality.

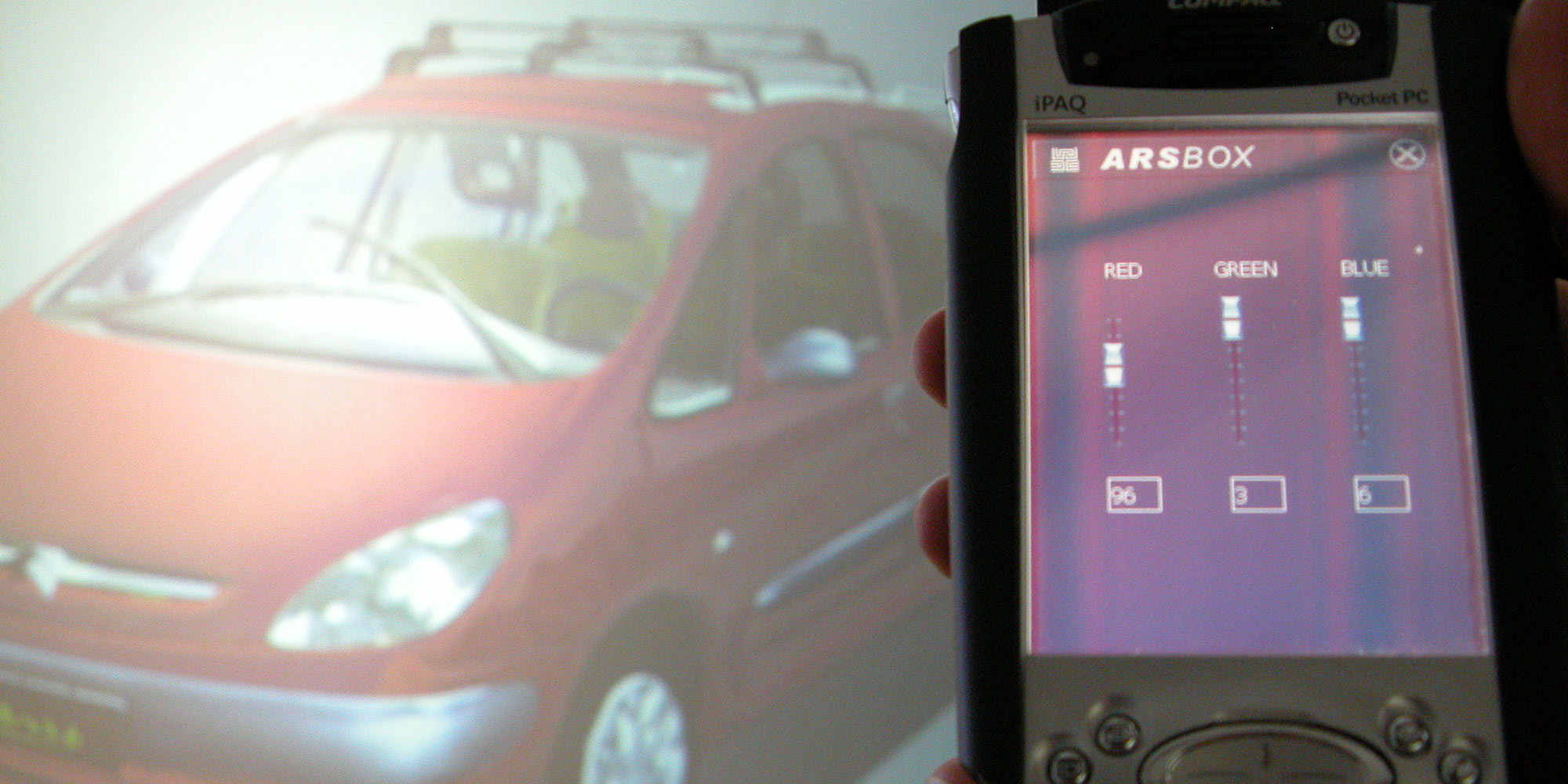

The ARSBOX made virtual prototyping possible in a simple, low-threshold way. The system’s interaction and control medium was a PALMIST – a pocket or tablet PC – equipped with WiFi and running the FATE software framework developed by the Ars Electronica Futurelab. The PALMIST provided all the basic functional features for controlling the projection surfaces: it could be used to change parameters of existing objects and create new ones, making it possible to visualize the results in real time.

The possibility of using stereoscopic visual environments to illustrate interactive product presentations and simulations also made the system highly relevant for the marketing sector. Visualizations of this kind had previously been reserved exclusively for large industrial companies. With ARSBOX and VRizer, smaller companies could now also use this state-of-the-art technology to create a stir.

However, ARSBOX and VRizer was also successfully used for cultural events at the Brucknerhaus in Linz (AT) and around the world.

What has changed? What sorts of new demands have arisen over the years?

Florian Berger: With Deep Space 8K, we now have a huge space in the Ars Electronica Center that can make visual illusions accessible to many people at once, simply because of its 16×9-meter dimensions. There is demand for the system to be available to large groups of visitors just because it’s not enough for an institution like the Ars Electronica Center to provide a single-user installation for this purpose.

You developed the 3D software VRizer. What projects has it been used for?

Florian Berger: ARSBOX is not the only thing the software has been great for. It was also useful in many other stereo setups and later also a cutting-edge project in Deep Space 8K with a virtual staging of the Millionenzimmer in Schönbrunn Palace: For this collaborative research and development project, we virtually staged a room from Schönbrunn Palace with a high-end real-time visualization. It was a pilot project that set new standards for the digital preservation of irretrievable cultural assets, i.e. “cultural heritages.”

The Ceremonial Hall and the Pink Room of the palace were also digitized as part of this project. As in the Millionenzimmer, the aim in these rooms was to reconstruct the ornate wall paneling, which is illustrated with rare paintings, virtually and in as high a resolution as possible, preserving it for posterity and making it available worldwide over long distances. The virtual production from Schönbrunn Palace was presented in Deep Space 8K and directly on site in Vienna with an ARSBOX.

Furthermore, for the world premiere of Wagner’s opera Das Rheingold in 2004, we used it to replace the traditional opera staging with a virtual backdrop. The stereoscopic projections that we were able to realize with the VRizer created a panorama on the walls of the stage area that extended the dimensions of the concert hall into virtual space. The computer-controlled backdrop followed the sounds of the musical interpretation and reflected them in a kind of dynamic structure. To achieve this – quite impressive – result, the opera had to be analyzed and interpreted by a digital system beforehand. The images were then modulated in real time, inspired by the music.

With SAP – a company that configures abstract business processes and gives them an organizational framework – we developed a digital guidance system called Source.Code in 2007. Everyday and less common processes that actually take place in the structure of the company were digitally visualized for this purpose:

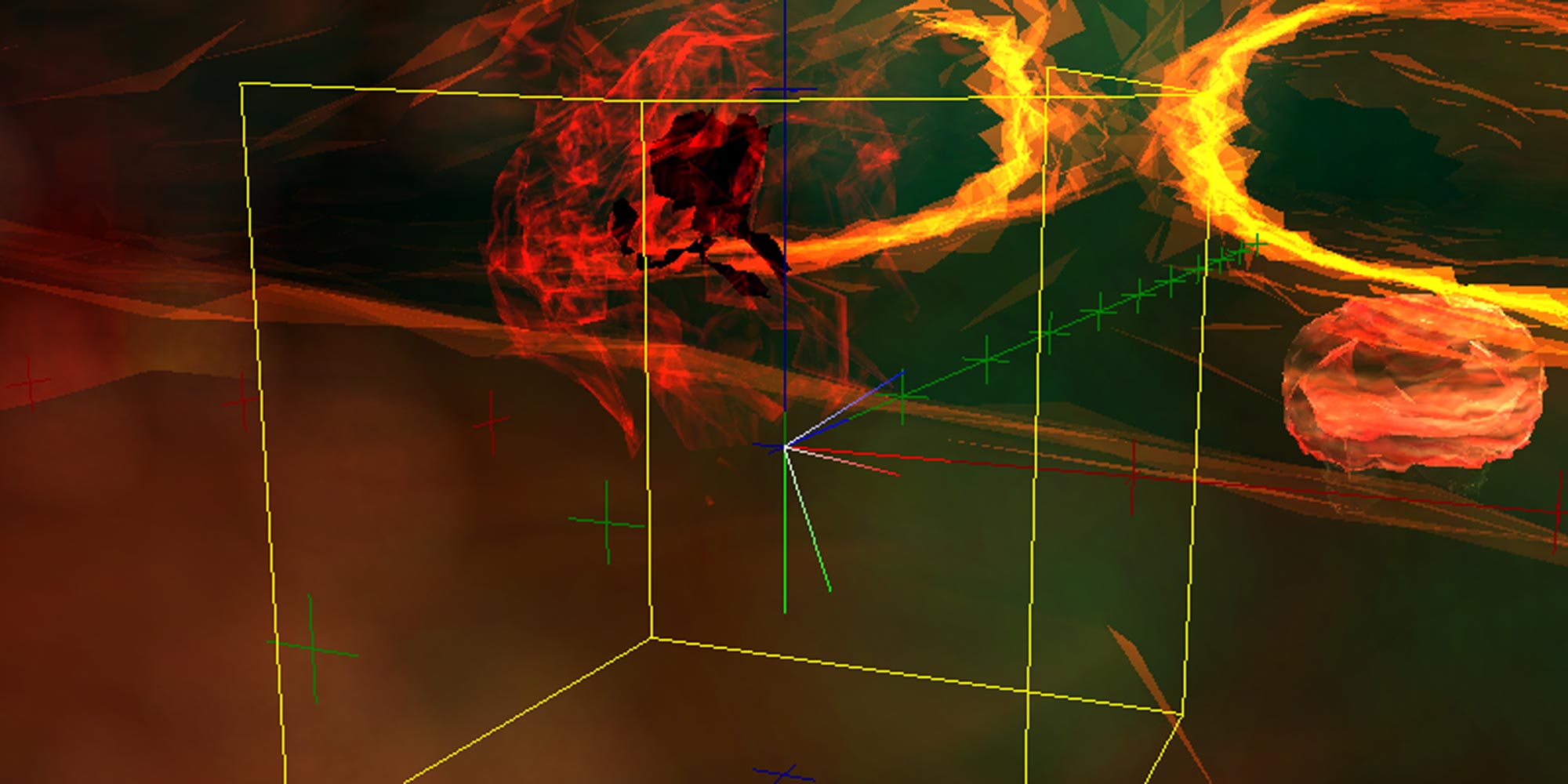

100 screens, linked to 30 different computers and centrally fed with virtual content, presented visualizations of systemic processes within the company in a very playful way, forming an interactive visitor guidance system leading through the SAP headquarters in Walldorf. The visualization – process animals wandering in a virtual river – was intended to illustrate everyday processes within the corporate structure, such as writing emails. This gave people a way to perceive administrative processes through artistic expression. The VRizer was used in this case to calculate an authentic-looking perspective for each of the screens.

So what exactly is the VRizer?

Florian Berger: The VRizer, this 3D visualization software developed at the Ars Electronica Futurelab, used the latest technology for a graphical representation of data, setting new standards in the field of real-time computer graphics. But to really answer this question, I need to backtrack a bit:

In 2004, when we developed VRizer, game engines were the most widely used method for creating PC-based, real-time interactive applications. Many games were already equipped with powerful editors as standard, offering a wide range of users the opportunity to realize their own ideas of a three-dimensional environment. With the help of game editors, it was possible to create highly complex, effective and dramatic environments with a minimum of effort.

With the development of VRizer, however, the Ars Electronica Futurelab has gone a decisive step further and closed the gap between applications based on game engines and the ARSBOX PC cluster system: With this software, we have provided a library that can use any OpenGL software as a basis for implementing stereoscopic VR projections – without the source code we developed having to interfere with the original code of the software – in order to obtain multiple views of the same scene from different perspectives from a continuous sequence of images.

In the art scene at that time, the most widely used engine was “Unreal Tournament 2003,” which also made it the obvious component for the first “VRizer” applications. In addition to artistic works, the Ars Electronica Futurelab staff also modified game-editor-based architectural visualization software and computer games, two closely related types of programs, for the VRizer’s immersive virtual environments. The first examples were presented to the public for the first time at the Ars Electronica Festival 2003: CODE – The Language of our Time.

Since game engines generally have very long release cycles, new hardware features were often not (yet) accessible in those engines. So it was a logical step to develop our own engine that allows access to the latest hardware features, such as vertex and pixel shaders: we developed the FL engine using OpenGL for this purpose.

Furthermore, the development of the FL-Engine was a necessary step to be able to use modern hardware in a professional way; conventional game engines were not designed for this at that time. To implement the idea for the FL engine, we used the latest features of the software Open GL and wrote new plug-ins because we simply needed an adequate system for our contemporary approach to programming.

So with the VRizer, stereo projections can be created from a mono frame stream. How does that work?

Florian Berger: Of course it’s not magic. The VRizer has been developed on the basis of OpenGL. In fact, the geometry created using this 3D software is based on a three-dimensional representation before being pixelated and drawn on the screen. The images used for this are computer graphics defined by triangular shapes.

So, in principle, we can change the viewpoints and other parameters frame by frame. In the simplest case, we can imagine displaying the same scene once from the perspective of the left eye and in the next frame from the perspective of the right eye. That would of course require shutter glasses triggered by frame switches.

Another possible way was to render the left-eye frame in red only, and the right-eye frame in green or blue (or both), and combine them into a single frame. Then if we wear red-green glasses, we get the stereoscopic view from the sequence of monoscopic frames in a very simple way.

From today’s perspective, where is the future of virtual worlds headed?

Florian Berger: The development will go in the direction of unifying the many existing virtual platforms. Many possibilities for high-end rendering are already easily available today in the web browser, for example as WebVR and WebXR. So the next logical step is to use these technologies on a large scale for VR applications. This will ultimately lead to unification – not only as far as VR software is concerned, but also across hardware and operating systems.

By developing Deep Virtual, the Ars Electronica Futurelab recently created a completely new VR system in tandem with the production of seven episodes for the 25th Anniversary Series on Ars Electronica Home Delivery, one that takes the illusion of a virtual space a decisive step further. Deep Virtual is the concept for a Deep Space format that will be developed step by step over the course of the next seven episodes. It will put the protagonists in Deep Space 8K in an immersive setting ideal for their presentations, talks and discussions. Deep Virtual is conceived as an immersive and hybrid media format that will allow viewers to immerse themselves in virtual worlds together. It also represents a step into the future of Deep Space 8K.

Florian Berger studied Theoretical Physics at JKU Linz and has been working at the Ars Electronica Futurelab since 2001. At that time, his applied research focused on real-time computer graphics, simulation, signal processing and embedded systems. As part of the Ars Electronica Futurelab’s core creative team, he was responsible for developing a flexible, modular high-end graphics engine that was used for several large-scale stereoscopic music visualizations of concerts. He is one of the foremost researchers in the field of virtual reality and real-time computer graphics, and has recently served as chief SW engineer for the Spaxels reseach team, among others. As a respected VR engineer and creative thinker, Florian Berger has played a key role in shaping the Ars Electronica Futurelab. At the Salzburg University of Applied Sciences, he teaches real-time computer graphics and supervises diploma theses for master’s students.

Learn more about Florian Berger on the Ars Electronica Futurelab website or subscribe to the Ars Electronica Home Delivery Channel to get a look at Deep Virtual in the 25th Anniversary Program of the Ars Electronica Futurelab. Explore Virtual Worlds in Episode One or the poetic moment in Systems, in Episode Two.

Six more episodes will deal with exciting topics related to the past, present and future of the Ars Electronica Futurelab. While reevaluating the past 25 years, it will also look into the future, examining Creative (Artificial) Intelligence, Robots & Robotinity, Computation & Beyond, Swarms & Bots and Art Thinking.