A collaboration between Japanese telecommunications giant NTT and the Ars Electronica Futurelab, Sky Compass is the first step towards a vision of employing drones (or UAVs, Unmanned Aerial Vehicles) in public signage, guidance and the facilitation of traffic.

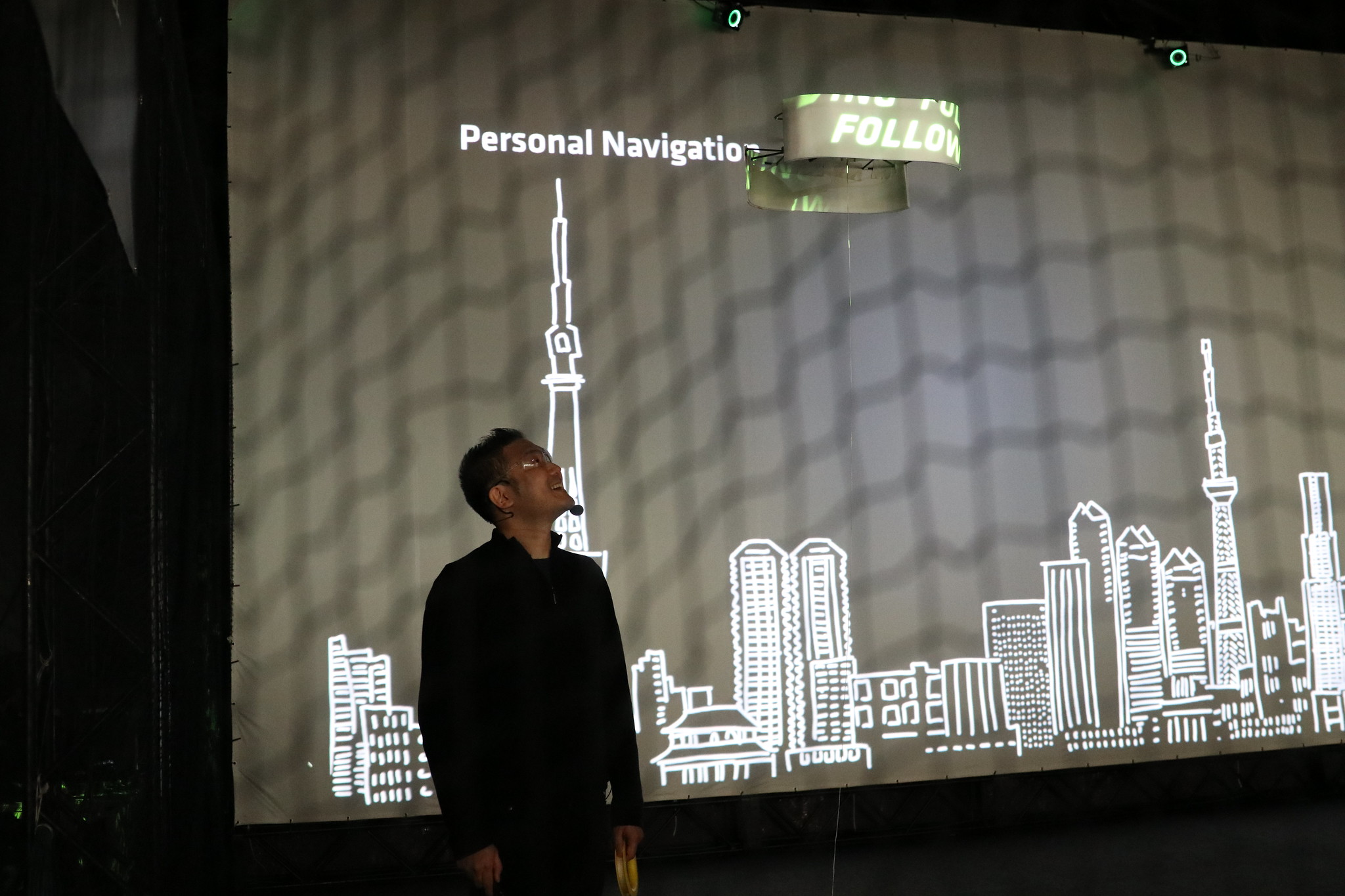

It manifested in a series of special demonstrations at the NTT R&D Forum 2017 in Musashino, Tokyo. The aim of this co-operation is an appealing annimated guiding aid for the world’s largest metropolitan area as well as a high-powered economy driven by technological innovation. In this scenario, as a visitor to a densely populated urban expanse, unfamiliar with the place’s language and topography, imagine: Personal drones guiding you to your desired destinations; intelligent airborne agents regulating the flow of traffic; swarms of brightly lit quadcopters forming glowing signs and symbols in the night sky …

The vision for animating Tokyo together in the year 2020 is to guide people by a signage that can be seen from great distances. Credit: Markus Scholl

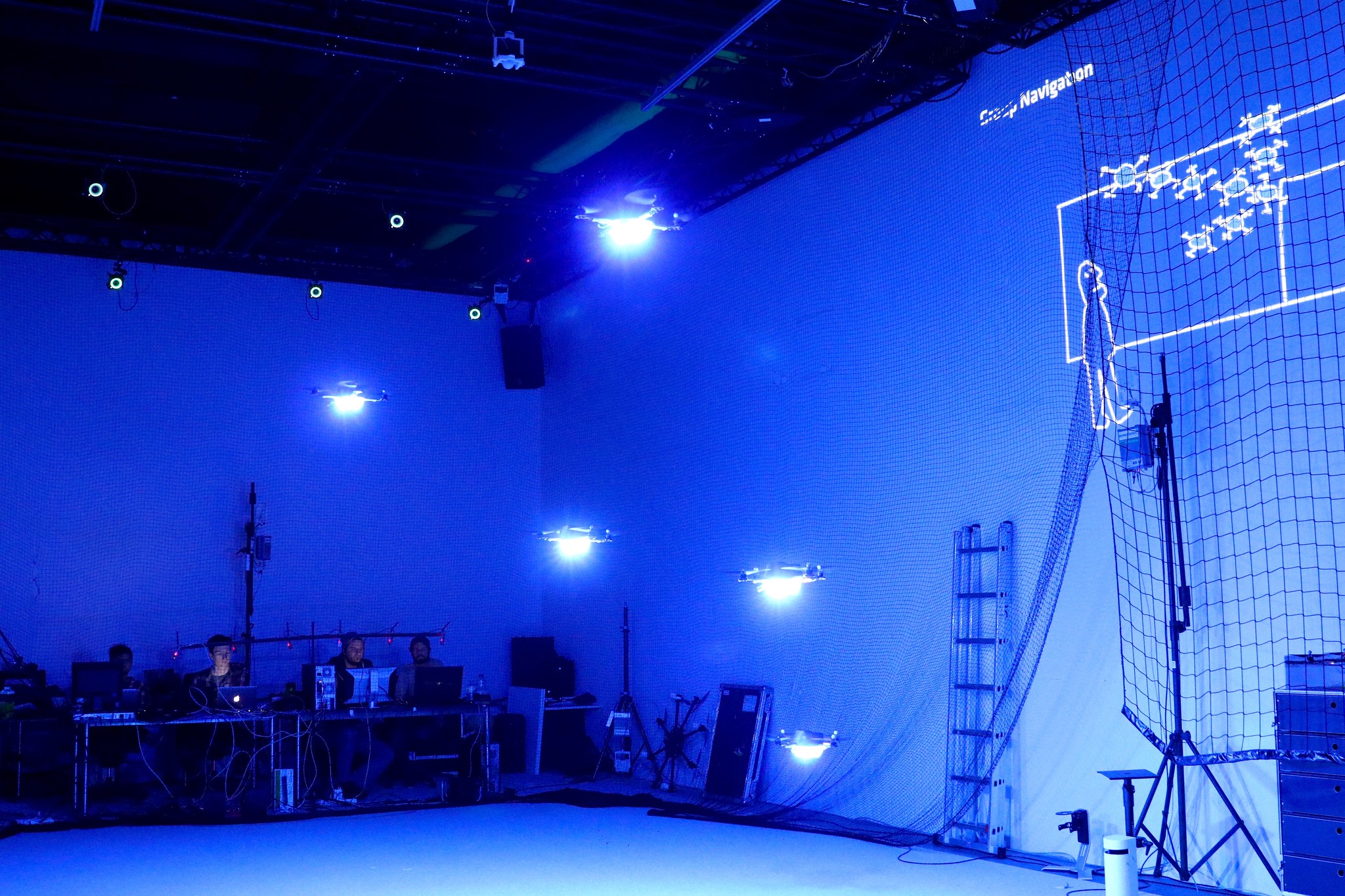

Realising this vision requires a number of technological advances and a commitment for intense research and development – the aim of the Sky Compass prototype at the R&D Forum was to convey a feeling for the possibilities by means of a first physical demonstration: A number of “scenes” in which up to five drones at a time moved around a volume in the NTT building, performing synchronised dances and reacting to the movement of people in the space. Researchers from the Ars Electronica Futurelab and NTT used this display to talk about the research that is not as obvious as the purely technical kind, but equally necessary: How would we communicate with such robotic agents? What kind of language would we need to design for them in order to make themselves understood, and, an even bigger challenge, for them to understand us?

Research Questions: “Sky Language” and “User Responsibility Design”

On a personal scale, the Guide Drone enters into a one-to-one relationship with its (possibly temporary) owner. As it takes the owner to a destination, it has to negotiate a complex urban landscape; the user, tethered to the drone via a physical or metaphorical leash, follows it as they would a guide dog, and has some extent of control over it; and like with a dog, they are not free of responsibility – accounting for the distribution of this responsibility between the drone (and its operating authority) on the one hand, and the owner/user on the other hand will be a crucial design task.

On a group level, drones may form shapes that act as signage in public space; globally, a well-understood symbolic language for traffic and public navigation has been well-established – but how can this be transferred to the dynamic shape (and extended capabilities) of small drone swarms?

Finally, on a city-wide scale, organising swarms of hundreds (or even thousands) of drones in the sky to display information recognisable from a number of vantage points opens up research questions in the field of spatio-temporal visual design under strong logistical constraints – an area in which the Ars Electronica Futurelab, with its successful Spaxels project, can draw on ample experience.

Demonstration: Technology

For the five days of demonstrations, an 8x8x5 metre volume was constructed as a tarp-covered enclosure on a terrace of the NTT building; inside this volume, sixteen OptiTrack cameras were used in conjunction with the Ars Electronica Futurelab’s custom Ground Control software to allow up to five drones simultaneously to precisely navigate. The presenter’s movements were tracked by a custom solution based on laser rangers, and for a special experiment in which a visual mode of communication was explored, a mirror head was used to achieve realtime projection mapping on the moving drone. Technologies being researched at NTT, namely speech recognition and live density maps based on wi-fi signals, were added to the demonstrated possible capacities of the drone agents.

The faster miniaturisation and lightweight material technology progresses, the more easily we will be able to integrate all such technologies onto the UAVs themselves, rather than stationary periphery – and in a few years it might easily be possible to have a personal quadcopter take us to all the best spots in an unfamiliar city …

Credits

Ars Electronica Futurelab: Roland Haring, Peter Holzkorn, Michael Mayr, Martin Mörth, Otto Naderer, Nicolas Naveau, Hideaki Ogawa, Michael Platz

NTT: Hiroshi Chigira, Kyoko Hashiguchi, Shingo Kinoshita