Lecture-Performance

Three performers, two of them human.

A conversation between three intellects, one of them artificial.

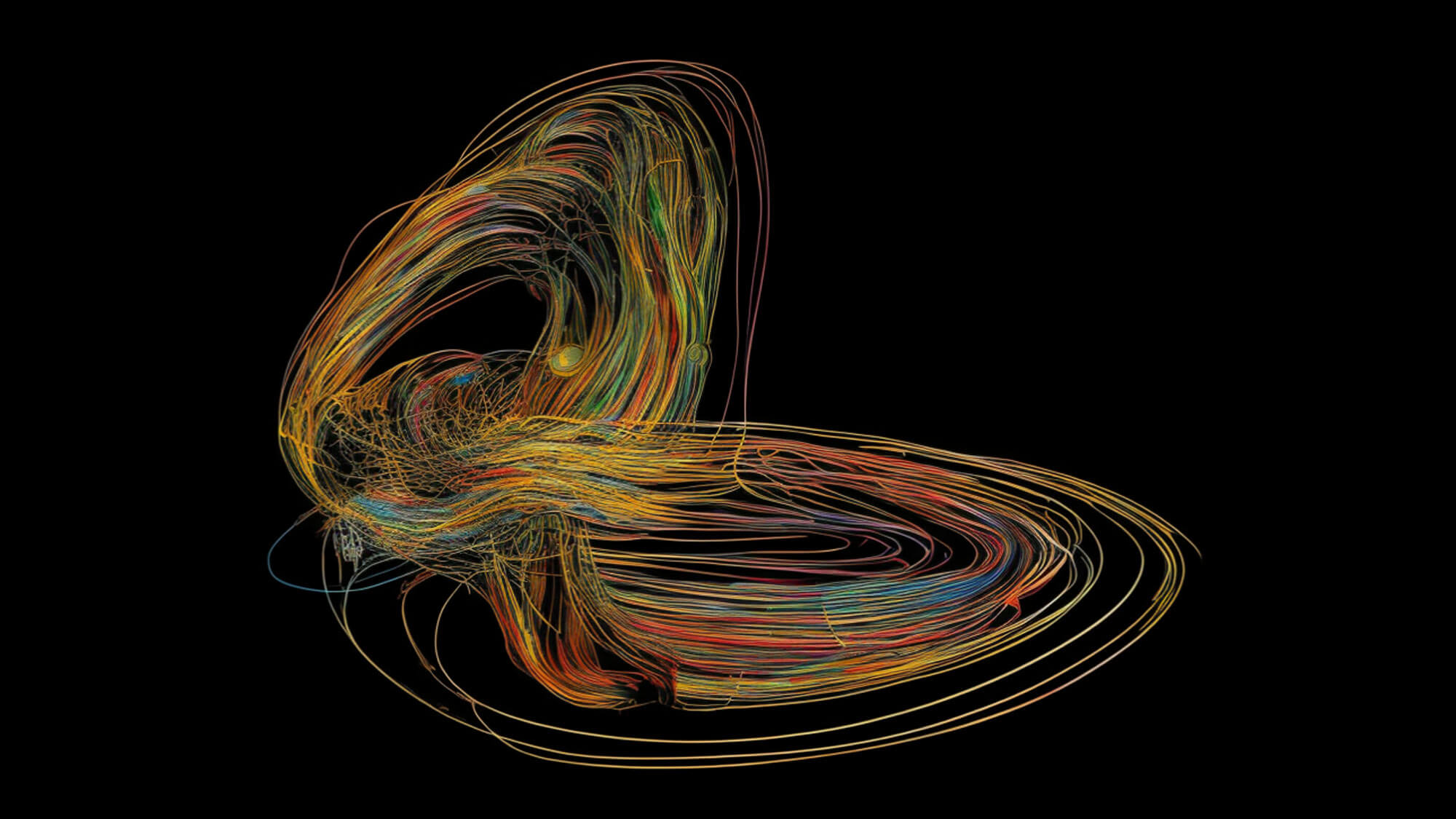

Vibrate ResonAIte connects human and artificial creativity in a dialogue of images and sound.

Mutual inspiration and provocation result in blending and diffusion of the participating minds until physical boundaries seem to disappear.

Generated images are transferred to video format, where they are processed and edited to create a seamless visual experience. The visuals are accompanied by live music that is created in real-time, based on the evolving images. Presented as a fusion of electronic and experimental elements, the live music performance acts as a dramaturgic counterpoint and connective layer. It enhances the immersive experience of the visuals, creating a multi-sensory experience that engages the audience at multiple levels.

ResonAIte as a platform and system explores the infinite possibilities of AI algorithms in the image domain. In a continuous process of image creation, an image generated from a given prompt is converted into a text description, which is subsequently fed back to create another image prompt. These feedback loops acting as quasi resonance frequencies of the cultural-historic space are questioning our understanding of source, truth and the process of creation.

The project aims to create a continuous and ever-evolving artistic experience that explores the possibilities of AI algorithms in the image domain while interacting with other artistic domains. The project thereby seeks to contribute to the ongoing scholarly discourse surrounding the intersection of art and technology, and the creative possibilities inherent in the integration of these domains.